Authors:

Ananya Pandey、Dinesh Kumar Vishwakarma

Paper:

https://arxiv.org/abs/2408.10246

Introduction

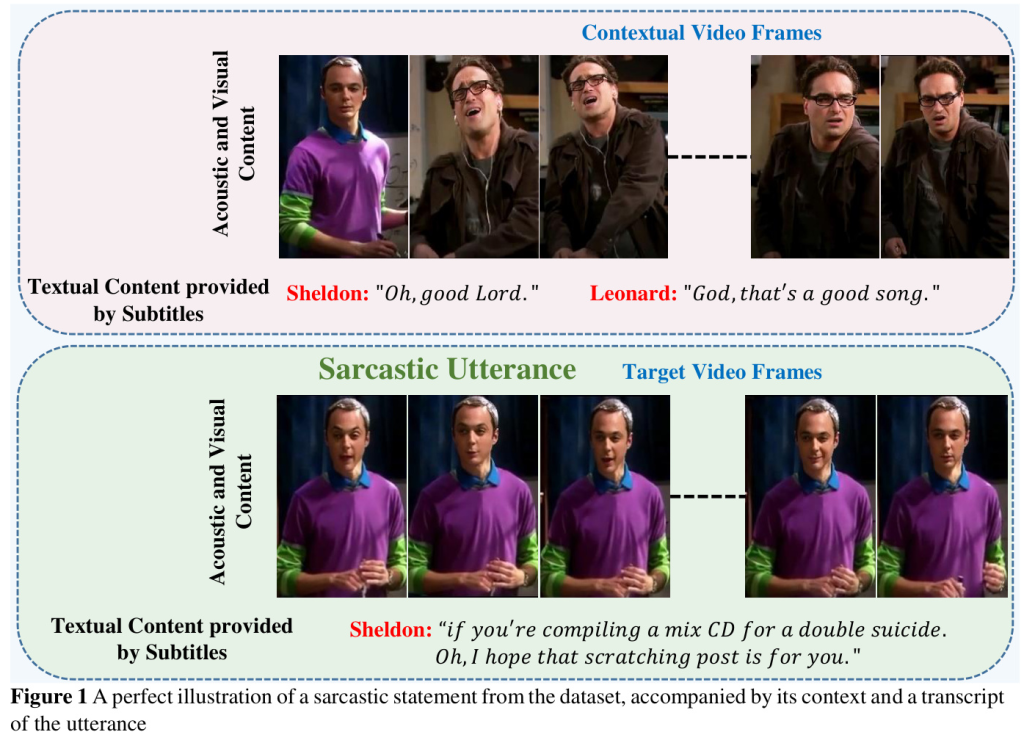

Sarcasm is a complex form of communication often conveyed through a combination of linguistic and non-linguistic cues. Recognizing sarcasm in conversations is a challenging task for computer vision and natural language processing systems. Traditional sarcasm recognition methods have primarily focused on text, but for more reliable identification, it is essential to consider visual, acoustic, and textual information. This blog explores VyAnG-Net, a novel multi-modal sarcasm recognition model that integrates visual, acoustic, and glossary features to enhance sarcasm detection accuracy.

Related Work

Unimodal Sarcasm Recognition

Text-Based Sarcasm Recognition

Early research in sarcasm detection focused on text-based content using lexicon or rule-based approaches. Twitter has been a primary data source, with human annotations and hashtag-based supervision being common methods. Classical machine learning algorithms like Naive Bayes, SVM, and logistic regression were initially used, but deep learning models such as GRU, LSTM, and ConvNets have shown better performance in handling large datasets.

Audio-Based Sarcasm Recognition

Sarcasm detection in audio primarily focuses on prosodic signals like speech rate and frequency. Studies have shown that slower speech rates and higher frequencies are indicative of sarcasm. Prosodic and spectral features, such as stress and intonation, are reliable predictors of sarcastic speech.

Multi-Modal Sarcasm Recognition

Image-Text Pairs

Recent research has explored sarcasm detection using image-text pairs, leveraging deep learning frameworks with attention mechanisms to improve accuracy. Studies have used Bi-GRU for text feature extraction and VGG-16 for visual features, with OCR for text within images.

Videos

The MUStARD dataset, the first multi-modal video dataset for sarcasm detection, has been pivotal in advancing this field. Researchers have used various deep learning architectures and fusion strategies to integrate visual, acoustic, and textual features for better sarcasm recognition.

Research Methodology

Objective

The goal of VyAnG-Net is to recognize sarcasm in video utterances by learning a mapping function from multi-modal training examples. The model aims to integrate visual, acoustic, and glossary (textual) information to accurately classify utterances as sarcastic or non-sarcastic.

VyAnG-Net Framework

VyAnG-Net consists of three main components:

1. Glossary Branch: Uses an attention-based tokenization approach to extract contextual features from the textual content provided by video subtitles.

2. Visual Branch: Incorporates a lightweight depth attention module to capture prominent features from video frames.

3. Multi-Headed Attention-Based Feature Fusion: Integrates features obtained from each modality to form a comprehensive multi-modal feature representation.

Experimental Design

Dataset

The MUStARD dataset, containing 690 video clips labeled as sarcastic or non-sarcastic, was used for training and evaluation. The dataset includes utterances from popular TV series like Friends and The Golden Girls. Two experimental setups were used: speaker-dependent and speaker-independent configurations.

Experimental Setup

The model was implemented using Keras and PyTorch frameworks. Evaluation metrics included accuracy, precision, recall, and F1 score. The model was trained for 200 epochs using the Adam optimizer with a learning rate of 0.001 and a batch size of 32. High-end GPU systems were used for training.

Results and Analysis

Performance Evaluation

VyAnG-Net was evaluated using unimodal, bimodal, and trimodal inputs. The trimodal approach outperformed unimodal and bimodal approaches in both speaker-dependent and speaker-independent configurations.

Speaker-Dependent Configuration

VyAnG-Net achieved a precision of 78.83%, recall of 78.21%, and F1 score of 78.52%, surpassing existing methods by a significant margin.

Speaker-Independent Configuration

VyAnG-Net exhibited exceptional performance with a precision of 75.69%, recall of 75.52%, and F1 score of 75.6%.

Comparative Analysis

VyAnG-Net outperformed baseline models in both configurations, demonstrating its effectiveness in integrating multi-modal features for sarcasm recognition.

Ablation Study

Ablation experiments showed that each component of VyAnG-Net contributed significantly to its overall performance. Removing any single module resulted in decreased accuracy and predictive power.

Generalization Study

VyAnG-Net’s generalizability was tested using the MUStARD++ dataset. The model trained on MUStARD and tested on MUStARD++ demonstrated its robustness and adaptability to unseen data.

Overall Conclusion

VyAnG-Net is a novel multi-modal sarcasm recognition model that effectively integrates visual, acoustic, and glossary features. The model outperforms existing methods and demonstrates strong generalizability across different datasets. Future research can explore advanced fusion strategies, transfer learning, and the development of visual sarcasm recognition datasets to further enhance sarcasm detection capabilities.