Authors:

Robert Geirhos、Priyank Jaini、Austin Stone、Sourabh Medapati、Xi Yi、George Toderici、Abhijit Ogale、Jonathon Shlens

Paper:

https://arxiv.org/abs/2408.08172

Towards Flexible Perception with Visual Memory: A Detailed Exploration

Introduction

In the realm of machine learning, the traditional workflow involves a series of steps: data collection, preprocessing, model selection, training, evaluation, and deployment. However, the static nature of deep learning models poses significant challenges in adapting to the ever-changing real world. This paper introduces a novel approach by integrating the representational power of deep neural networks with the flexibility of a database, creating a visual memory system. This system aims to address the limitations of static knowledge representation in deep learning models by enabling flexible addition, removal, and control of data.

Building a Retrieval-Based Visual Memory for Classification

Building a Visual Memory

The visual memory system retrieves (image, label) pairs from an image dataset based on their similarity to a query image. This is achieved using a pre-trained image encoder, which extracts feature maps from the dataset. These feature maps, along with their corresponding labels, are stored in a database, forming the visual memory. For the experiments, the visual memory was built using features extracted from datasets like ImageNet-1K with encoders such as DinoV2 and CLIP.

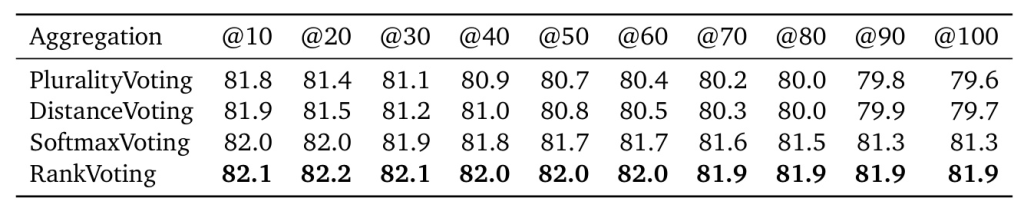

Retrieval-Based Classification Using Visual Memory

Given a query image, its feature map is extracted and compared with the feature vectors in the visual memory using cosine distance. The nearest neighbors are retrieved, and their labels are aggregated to classify the query image. Two approaches were implemented for fast inference: matrix multiplication on GPUs/TPUs for smaller datasets and ScaNN for scalable nearest neighbor search.

The reliability of retrieved memory samples was analyzed, showing that accuracy decreases with increasing neighbor index but remains above chance even for distant neighbors. This suggests that aggregating information across different neighbors can enhance decision-making.

Capabilities of a Visual Memory

Flexible Lifelong Learning: Adding Novel OOD Classes

A retrieval-based visual memory can naturally handle new information, aligning with the requirements of lifelong learning. By adding data for new classes from the NINCO dataset to the visual memory of a pre-trained model, high accuracy on new out-of-distribution (OOD) classes was achieved without affecting the in-distribution accuracy.

Flexibly Trading Off Compute and Memory

The scaling behavior of visual memory with increasing memory size was studied. Larger models required fewer examples in memory to represent different concepts, indicating a flexible trade-off between model size and memory size. This was demonstrated using models of different sizes like DinoV2 ViT and CLIP ViT.

Flexibly Adding Billion-Scale Data Without Training

The performance of visual memory was tested with billion-scale data by combining ImageNet-1K and JFT-3B datasets. The results showed that validation error decreases with increasing memory size, even in the billion-scale data regime, without any additional training.

Flexible Removal of Data: Machine Unlearning

Machine unlearning, the process of removing the influence of specific training data, becomes straightforward with a visual memory system. By simply deleting a data point from the visual memory, the influence of that data on the model’s decision-making can be removed efficiently and effectively.

Flexible Data Selection: Memory Pruning

Memory pruning involves retaining only useful samples while removing those with a neutral or harmful effect on model quality. This was achieved by estimating sample quality based on their contribution to decision-making and either removing low-quality neighbors or reducing their weight. Both hard and soft pruning methods improved ImageNet validation accuracy.

Flexibly Increasing Dataset Granularity

A visual memory model can refine its visual understanding as more information becomes available. This was tested using the iNaturalist21 dataset, where adding more samples of a newly discovered species improved accuracy across all levels of the taxonomic hierarchy.

Interpretable & Attributable Decision-Making

A visual memory system offers a natural way to understand model predictions by attributing them to training data samples. This was demonstrated by visualizing misclassified examples from the ImageNet-A dataset, showing that many errors appear sensible given the data, raising questions about label quality rather than model quality.

Discussion

Summary

The static nature of traditional neural networks limits their potential in real-world settings. Incorporating a visual memory enables a range of flexible capabilities, including lifelong learning, machine unlearning, memory pruning, and interpretable decision-making. The proposed visual memory system, which combines image similarity and fast nearest neighbor search, demonstrates significant improvements in flexibility and accuracy.

Limitations and Future Work

The current approach focuses on image classification. Extending this approach to other visual tasks like object detection, image segmentation, and instance recognition could be beneficial. Additionally, the reliance on a fixed, pre-trained embedding model may require updates to handle strong distribution shifts. Exploring the potential of smaller models with larger memory databases could also reduce computational footprints.

Outlook

As deep learning becomes more widely deployed, its limitations become more apparent. Incorporating an explicit visual memory offers a promising solution for real-world tasks where flexibility is key. The demonstrated capabilities of the visual memory system highlight its potential to inspire further research and discussions on knowledge representation in deep vision models.

In conclusion, the integration of visual memory with deep learning models presents a compelling alternative to static knowledge representation, enabling flexible and interpretable decision-making in dynamic real-world environments.