Authors:

Qiushuo Cheng、Catherine Morgan、Arindam Sikdar、Alessandro Masullo、Alan Whone、Majid Mirmehdi

Paper:

https://arxiv.org/abs/2408.08182

Introduction

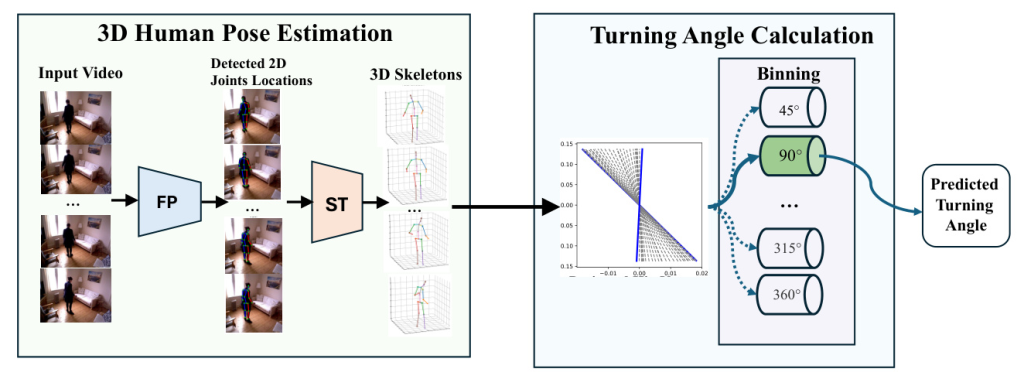

Parkinson’s disease (PD) is a progressive neurodegenerative disorder characterized by symptoms such as slowness of movement and gait dysfunction. These symptoms fluctuate throughout the day but progress slowly over the years. Current treatments focus on symptom improvement, with no available therapies to modify the disease’s course. The development of disease-modifying treatments (DMTs) is hindered by the lack of sensitive, frequent, and objective biomarkers to measure PD progression. The gold-standard clinical rating scale, MDS-UPDRS, includes subjective questionnaires and clinician ratings of scripted activities, which are limited to clinical settings and short durations. This paper presents a deep learning-based approach to automatically quantify turning angles by extracting 3D skeletons from videos and calculating the rotation of hip and knee joints. The study utilizes state-of-the-art human pose estimation models, Fastpose and Strided Transformer, on turning video clips from PD patients and healthy controls in real-world settings.

Literature Review

Turning Angle Estimation

Objective quantitative turning parameters for human motion analysis have been explored using inertial sensors and floor pressure sensors. Inertial sensors, consisting of gyroscopes and accelerometers, have been validated with gold-standard motion capture systems or human raters but are limited to laboratory environments. Wearable sensors face issues of acceptability, usability, power consumption, and memory storage, hindering their generalization to free-living environments. Video-based markerless approaches offer a passive and less obtrusive solution but face challenges in accuracy and reproducibility outside laboratory settings.

Gait Analysis for Parkinson’s Disease

Gait analysis is crucial in clinical applications and is closely studied in PD. Most state-of-the-art works analyze patient videos using deep learning models and compare outcomes against clinician annotations. However, these studies are confined within the limitations of traditional clinical rating scales, which cannot sensitively detect disease progression. Accurate measurement of absolute gait parameters like turning angle in real-world settings is essential for building sensitive markers to track changes in gait over time.

Datasets

Turn-REMAP

Turn-REMAP is a subset of the REMAP dataset, comprising turning actions recorded in a home environment. The dataset includes 12 pairs of PD patients and healthy controls, with videos annotated by clinicians for turning angle estimation and duration. The dataset contains 1386 turning video clips, with angles quantized into 45° bins.

Turn-H3.6M

Turn-H3.6M is a curated subset of the Human3.6M benchmark, consisting of turning actions performed by professional actors in a lab environment. The dataset includes 619 turning video clips with 3D ground truth data, allowing for the calculation of actual turning angles and speeds.

Methodology

3D Human Joints Estimation

The proposed framework consists of two major processes: 3D human joints estimation and turning angle calculation. The 2D joint locations are detected in each video frame using FastPose, and then reconstructed in 3D space using the Strided Transformer. The 2D keypoints detector maps input video frames into frames of 2D keypoint coordinates, which are then lifted to 3D using the Strided Transformer.

Turning Angle Estimation

The turning angle is calculated using the orientation of hip and knee joints on the frontal plane. The angle between vectors of consecutive frames is summed and averaged to determine the turning angle. The angular speed is computed based on the duration of the turning motion.

Experiments

Implementation and Evaluation

The experiment is conducted using PyTorch on a single NVIDIA 4060Ti GPU and a 12-core AMD Ryzen 5 5500 CPU. The FastPose model is trained on the MSCOCO dataset, and the Strided Transformer is trained on Human3.6M. The evaluation metrics include accuracy, Mean Absolute Error (MAE), and weighted precision (WPrec).

Results on Turn-REMAP

The proposed method achieves an accuracy of 41.6%, an MAE of 34.7°, and a WPrec of 68.3% on Turn-REMAP. The performance varies across different scenarios, locations, and subject conditions.

Results on Turn-H3.6M

On Turn-H3.6M, the method achieves an accuracy of 73.5%, an MAE of 18.5°, and a WPrec of 86.2%. The performance is consistent across different turning angle bins, subjects, and actions.

Ablations

Effect of Different 2D Keypoints

The performance of turning angle estimation is evaluated using different 2D keypoint detectors: SimplePose, HRNet, and FastPose. FastPose offers the highest accuracy and significantly reduces computational costs.

Effect of Using Different Joints

The turning angle is calculated using different combinations of knee, shoulder, and hip joints. The combination of hip and knee joints yields the best accuracy, while averaging all three sets of joints gives the lowest MAE.

Discussion

The study establishes a baseline for video-based analysis of turning movements in free-living environments. The performance on Turn-REMAP is not yet robust enough for clinical diagnosis, but it provides valuable insights for future research. The challenges in generalizing pretrained models to real-world datasets highlight the need for domain adaptation strategies and cross-dataset validation.

Conclusion and Future Work

This study presents the first effort to detect fine-grained turning angles in gait using video data in a home setting. The proposed framework is applied to the Turn-REMAP and Turn-H3.6M datasets, establishing a baseline for future research. Future work includes extending the methods to untrimmed videos, inferring additional turning metrics, and exploring alternative models for more accurate turning angle estimation.