Authors:

Jun Yan、Pengyu Wang、Danni Wang、Weiquan Huang、Daniel Watzenig、Huilin Yin

Paper:

https://arxiv.org/abs/2408.09839

Introduction

Background

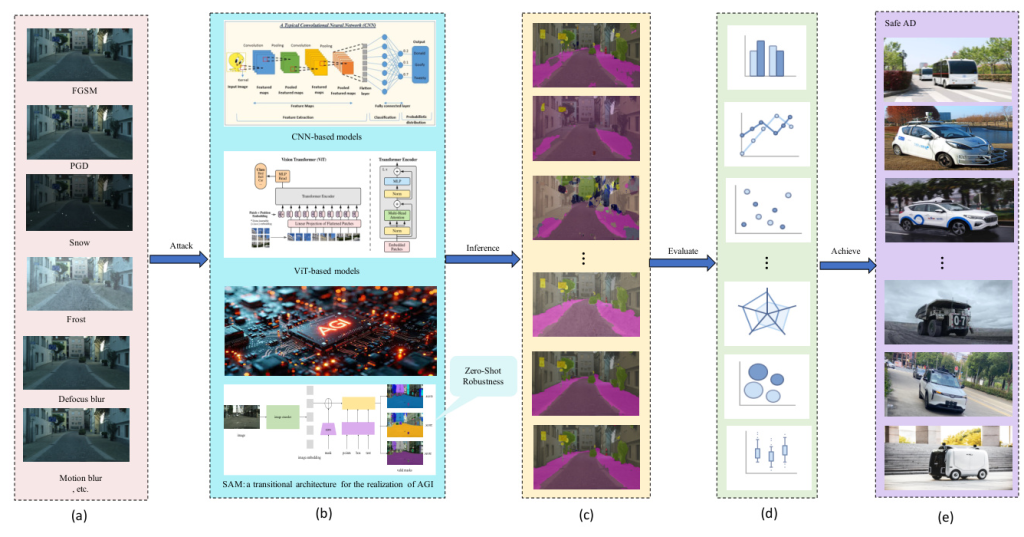

Semantic segmentation is a critical perception task in autonomous driving, enabling environmental perception, path planning, decision-making, barrier avoidance, collision prevention, precise localization, and human-computer interaction. The performance of semantic segmentation is crucial for ensuring the Safety of the Intended Functionality (SOTIF) in autonomous driving systems. Over the past decade, deep learning has significantly advanced semantic segmentation models, transitioning from convolutional neural networks (CNNs) to vision transformers (ViTs) and now to foundation models like the Segment-Anything Model (SAM).

Problem Statement

Despite the advancements, semantic segmentation models are vulnerable to adversarial examples—tiny perturbations that can deceive neural networks into making incorrect predictions. This vulnerability poses a significant security risk in autonomous driving. The study aims to investigate the zero-shot adversarial robustness of SAM in the context of autonomous driving, focusing on both black-box corruptions and white-box adversarial attacks.

Related Work

Deep-learning-based Semantic Segmentation Models

The development of deep-learning-based semantic segmentation models began with CNNs, including Fully Convolutional Networks (FCN), SegNet, Pyramid Scene Parsing Network (PSPNet), and DeepLabV3+. These models have been widely used but are sensitive to adversarial attacks.

ViTs, such as SegFormer and OneFormer, have introduced a new paradigm by capturing global relational and contextual information through self-attention mechanisms. These models have shown improved performance and robustness compared to CNNs.

Adversarial Examples in Vision Tasks

Adversarial examples are crafted perturbations that can cause neural networks to make incorrect predictions. Various attack methods, including Fast Gradient Sign Method (FGSM), Projected Gradient Descent (PGD), and Carlini and Wagner attacks (C&W), have been developed to test the robustness of models. Black-box attacks, such as Dense Adversary Generation (DAG) and procedural noise functions, simulate real-world conditions like adverse weather and sensor noise.

Research Methodology

SAM Based on the Open-Set Category Encoder

SAM is a powerful foundation model capable of segmenting arbitrary objects. It leverages the SA-1B dataset, the largest segmentation dataset to date, and the Contrastive Language-Image Pre-Training (CLIP) model, which maps images and text into a common feature space. The combination of SAM and CLIP enables zero-shot adversarial robustness by integrating visual and language information.

Research Questions

- What kind of model architecture can guarantee the adversarial robustness of semantic segmentation models?

- Can the combination of a ViT-based foundation model and CLIP achieve better adversarial robustness in autonomous driving?

Experimental Design

Dataset

The Cityscapes dataset, containing high-resolution images from urban environments, is used for evaluating the models. The dataset includes categories such as road, sidewalk, building, vehicle, and pedestrian.

Evaluation Metrics

The performance of semantic segmentation models is evaluated using metrics like recall, precision, F1-score, and intersection-over-union (IoU). The mean IoU (mIoU) is calculated as the average IoU across all categories.

Models

The study evaluates various CNN-based models (e.g., FCNs, DeepLabV3+, SegNet, PSPNet) and ViT-based models (e.g., SegFormer, OneFormer, OCRNet, ISANet). SAM and its variants (vanilla SAM and MobileSAM) are also evaluated, with SegFormer and OneFormer as backbones.

Adversarial Attack Methods

Both white-box (e.g., FGSM, PGD) and black-box (e.g., DAG, image corruptions) attack methods are used to test the robustness of the models. Black-box attacks simulate real-world conditions like adverse weather and sensor noise.

Results and Analysis

Robustness Study of Black-box Corruptions

The SAM model demonstrates significant robustness under black-box corruptions, even without additional training on the Cityscapes dataset. The performance of SAM exceeds that of most CNN-based models and some ViT-based models.

Robustness under the FGSM Attacks

SAM models maintain zero-shot adversarial robustness under FGSM attacks, outperforming many supervised learning models. The robustness of SAM-OneFormer and MobileSAM-OneFormer even improves with larger perturbation budgets.

Robustness under the PGD Attacks

SAM models show considerable robustness under PGD attacks, with SAM-OneFormer maintaining higher mIoU values compared to SAM-SegFormer across all attack iterations.

Discussion

The experimental results highlight the zero-shot adversarial robustness of SAM models in autonomous driving. The robustness under black-box corruptions is significant for SOTIF, while the robustness under white-box attacks ensures security in the Internet of Vehicles. The study also discusses the trade-off between robustness and computational cost, with MobileSAM being more suitable for edge devices.

Overall Conclusion

This study explores the zero-shot adversarial robustness of SAM architectures in semantic segmentation for autonomous driving. The findings reveal that SAM models exhibit robustness under both black-box and white-box attacks, providing valuable insights into the safety and security of autonomous driving systems. Future research will focus on expanding the test scale, integrating test-time defense methods, and exploring the deployment of SAM models in real-world applications to build trustworthy AGI systems.

Acknowledgments

This work was supported by the Shanghai International Science and Technology Cooperation Project and the Special Funds of Tongji University for “Sino-German Cooperation 2.0 Strategy.” The authors thank TÜV SÜD and colleagues from the Sino-German Center of Intelligent Systems for their support.

Code:

https://github.com/momo1986/robust_sam_iv