Authors:

Qiao Li、Cong Wu、Jing Chen、Zijun Zhang、Kun He、Ruiying Du、Xinxin Wang、Qingchuang Zhao、Yang Liu

Paper:

https://arxiv.org/abs/2408.10647

Introduction

Deep neural networks (DNNs) have become integral to various critical applications, such as identity authentication and autonomous driving. However, their vulnerability to adversarial attacks—where minor perturbations in input data can lead to significant prediction errors—poses a substantial risk. Traditional defense mechanisms often require detailed model information, raising privacy concerns. Existing black-box defense methods, which do not require such information, fail to provide a universal defense against diverse adversarial attacks. This study introduces DUCD, a universal black-box defense method that preserves data privacy while offering robust defense against a wide range of adversarial attacks.

Related Work

Empirical Defenses

Empirical defenses, such as adversarial detection and adversarial training, have been effective in enhancing DNN robustness. However, they remain susceptible to adaptive attacks and lack provable robustness guarantees.

Certified Defenses

Certified defenses offer provable robustness by ensuring that within a certified ℓp radius, adversarial attacks cannot successfully perturb the model’s predictions. Most certified defenses require access to white-box models, which is not always feasible due to privacy and security concerns.

Black-box Certified Defenses

Recent studies have focused on providing provable robustness in black-box settings. However, these methods often assume specific model structures or are limited to certain ℓp-norm constraints, making them less practical.

Research Methodology

Overview of DUCD

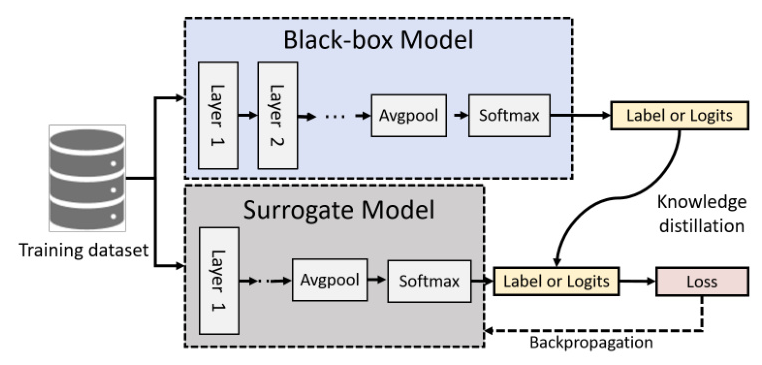

DUCD (Distillation-based Universal Black-box model Defense) employs a query-based approach to generate a surrogate model of the target black-box model. This surrogate model is then enhanced using randomized smoothing and optimized noise selection to provide a robust defense against various adversarial attacks.

Distillation-based Surrogate Model Generation

The surrogate model is generated by querying the target model and using the responses to train a white-box model. This process, known as knowledge distillation, ensures that the surrogate model accurately mimics the target model’s predictions.

Universal Black-box Defense Using Surrogate Models

The surrogate model is further enhanced using randomized smoothing, a technique that introduces random noise to input samples to ensure robustness. The noise parameters are optimized to maximize the certified radius, providing a robust defense against a wide range of adversarial attacks.

Experimental Design

Datasets

The proposed DUCD method is evaluated on three benchmark datasets: MNIST, SVHN, and CIFAR10. Additionally, CIFAR-S and CIFAR10.1 are used to simulate scenarios where the model owner is unwilling to disclose the original dataset.

Metrics

The evaluation metrics include absolute accuracy, relative accuracy, and certified accuracy. Certified accuracy is measured as the percentage of correctly classified data for which the certified radius exceeds a specified threshold.

Experimental Setup

All experiments are conducted on an Ubuntu 20.04 system with an Intel Xeon CPU and an NVIDIA GeForce RTX 4090 GPU. The experiments are implemented in Python with PyTorch and the adversarial-robustness-toolbox.

Results and Analysis

Surrogate Model Evaluation

The surrogate models generated by DUCD demonstrate high accuracy, closely matching the target models’ performance across various datasets. This indicates the effectiveness of the distillation process in creating accurate surrogate models.

Universality Evaluation

The noise selection process optimizes the noise parameters to maximize the certified radius. The results show that Gaussian noise generally yields the largest certified radii across different ℓp norms.

Defense Performance

DUCD significantly outperforms existing black-box defense methods in both certified accuracy and robustness score. It also demonstrates competitive performance compared to white-box defense methods.

Adaptive Attacks

DUCD maintains a low attack success rate (ASR) under various white-box and black-box adversarial attacks, demonstrating its robustness. However, the defense performance against ℓ∞ attacks remains suboptimal, highlighting an area for future improvement.

Purification

The purification process filters out uncertified inputs, ensuring that only certified samples are forwarded to the classifier. This enhances the robustness of the target classifier.

Privacy Evaluation

DUCD significantly reduces the success rate of membership inference attacks, demonstrating its effectiveness in preserving data privacy.

Overall Conclusion

This study presents DUCD, a universal black-box defense method that preserves data privacy while offering robust defense against a wide range of adversarial attacks. By generating a surrogate model through knowledge distillation and enhancing it with randomized smoothing, DUCD achieves high certified accuracy and robustness. However, the defense performance against ℓ∞ attacks remains an area for future exploration. Overall, DUCD represents a significant advancement in the field of adversarial defense for black-box models.