Authors:

Yisong Fu、Fei Wang、Zezhi Shao、Chengqing Yu、Yujie Li、Zhao Chen、Zhulin An、Yongjun Xu

Paper:

https://arxiv.org/abs/2408.09695

Introduction

Accurate weather forecasting is crucial for various sectors, including agriculture, transportation, energy, and economics. The proliferation of automatic weather stations has significantly enhanced modern meteorology by providing high-resolution meteorological data. However, the complex spatial-temporal patterns of global weather data pose significant challenges for accurate forecasting.

Recent advancements in deep learning (DL) have shown promise in leveraging historical data for improved weather forecasting. Transformers, in particular, have gained popularity due to their ability to capture long-term spatial-temporal correlations. However, their complex architectures result in large parameter counts and extended training times, limiting their scalability and practical application.

This study introduces LightWeather, a lightweight and efficient model for global weather forecasting. The key innovation lies in the use of absolute positional encoding, which effectively models spatial-temporal correlations without the need for attention mechanisms. This approach significantly reduces the model’s complexity while maintaining state-of-the-art performance.

Related Work

DL Methods for Station-based Weather Prediction

While radar- or reanalysis-based DL methods have achieved success, they are limited to processing gridded data and are incompatible with station-based forecasting. Spatial-temporal graph neural networks (STGNNs) have shown effectiveness in modeling weather data but are typically limited to short-term forecasting.

Transformer-based approaches have gained traction for their ability to capture long-term correlations. However, their quadratic computational complexity makes them impractical for global-scale forecasting. Efforts to enhance the efficiency of attention mechanisms, such as AirFormer and Corrformer, have resulted in limited improvements.

Studies on the Effectiveness of Transformers

The effectiveness of Transformers has been extensively studied in computer vision (CV) and natural language processing (NLP). In time series forecasting (TSF), simpler models like LSTF-Linear have outperformed Transformer-based methods, highlighting issues such as overfitting. Recent studies have questioned the necessity of attention mechanisms, suggesting that simpler architectures can achieve comparable performance.

Research Methodology

Problem Formulation

The problem involves forecasting weather data for N stations, each collecting C meteorological variables. The observed data at time t is denoted as (X_t \in \mathbb{R}^{N \times C}). The goal is to learn a function (F(\cdot)) to forecast future values (Y_{t:t+Tf}) based on historical observations (X_{t-Th:t}) and geographical coordinates (\Theta \in \mathbb{R}^{3 \times N}).

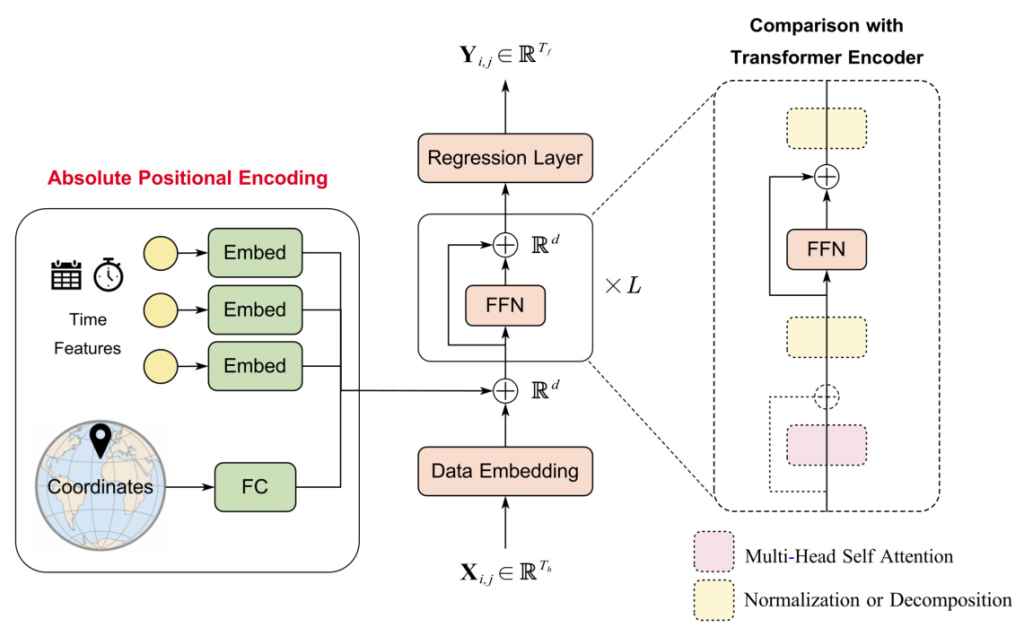

Overview of LightWeather

LightWeather consists of a data embedding layer, an absolute positional encoding layer, an MLP as encoder, and a regression layer. The model replaces the complex structures of Transformer-based models with a simple MLP, enhancing efficiency without compromising performance.

Data Embedding

The data embedding layer maps historical time series (X_{i,j}) to an embedding (E_{i,j} \in \mathbb{R}^d) in latent space using a fully connected layer.

Absolute Positional Encoding

Absolute positional encoding captures spatial-temporal correlations by integrating geographical and temporal knowledge. It includes spatial encoding (geographical coordinates) and temporal encoding (real-world temporal knowledge).

Encoder

The encoder is a multi-layer perceptron (MLP) that learns representations from the embedded data. Each MLP layer includes residual connections to enhance learning.

Regression Layer

A linear layer maps the learned representations to the specified dimension, yielding the prediction (\hat{Y} \in \mathbb{R}^{Tf \times N \times C}).

Loss Function

The Mean Absolute Error (MAE) is used as the loss function to measure the discrepancy between the prediction (\hat{Y}) and the ground truth (Y).

Experimental Design

Datasets

Experiments were conducted on five datasets, including global and national weather data:

- GlobalWind and GlobalTemp: Hourly averaged wind speed and temperature of 3,850 stations worldwide over two years.

- Wind CN and Temp CN: Daily averaged wind speed and temperature of 396 stations in China over ten years.

- Wind US: Hourly averaged wind speed of 27 stations in the US over 62 months.

Baselines

LightWeather was compared with three categories of baselines:

- Classic methods: HI, ARIMA.

- Universal DL methods: Informer, FEDformer, DSformer, PatchTST, TimesNet, GPT4TS, Time-LLM, DLinear.

- Weather forecasting specialized DL methods: AirFormer, Corrformer, MRIformer.

Evaluation Metrics

Performance was evaluated using Mean Absolute Error (MAE) and Mean Squared Error (MSE).

Implementation Details

The input length was set to 48 and the predicted length to 24. The Adam optimizer was used for training. The number of MLP layers was set to 2, with hidden dimensions ranging from 64 to 2048. The batch size was 32, and the learning rate was 5e-4. All models were implemented with PyTorch and tested on an NVIDIA RTX 3090 GPU.

Results and Analysis

Main Results

LightWeather consistently achieved state-of-the-art performance across all datasets, outperforming other baselines with a simple MLP-based architecture. Notably, it showed significant performance advantages on the Temp CN dataset.

Efficiency Analysis

LightWeather demonstrated superior efficiency compared to other DL methods, with significantly lower parameter counts, epoch times, and GPU memory usage.

Ablation Study

Experiments showed that both spatial and temporal encodings are beneficial. Absolute positional encoding outperformed relative positional encoding, highlighting its effectiveness.

Hyperparameter Study

The model achieved the best performance with two MLP layers and a hidden dimension of 64. Increasing the hidden dimension beyond 1024 led to diminishing returns.

Generalization of Absolute Positional Encoding

Applying absolute positional encoding to Transformer-based models significantly enhanced their performance, achieving near state-of-the-art results.

Visualization

Forecasting results closely aligned with ground-truth values, demonstrating LightWeather’s ability to capture spatial-temporal patterns. Visualization of positional encoding revealed distinct clustering and periodic patterns, consistent with real-world weather dynamics.

Overall Conclusion

This study highlights the importance of absolute positional encoding in Transformer-based weather forecasting models. By integrating geographical coordinates and real-world time features, LightWeather effectively captures spatial-temporal correlations without the need for complex architectures. The model achieves state-of-the-art performance with high efficiency and scalability, offering a promising approach for global weather forecasting and other applications involving geographical information.

Future work will focus on integrating physical principles to enhance the model’s interpretability and extend its applicability to other domains such as air quality and marine hydrology.