Authors:

Akane Sano、Judith Amores、Mary Czerwinski

Paper:

https://arxiv.org/abs/2408.07822

Exploration of LLMs, EEG, and Behavioral Data to Measure and Support Attention and Sleep

Introduction

Human altered states such as attention and sleep play significant roles in health, safety, and productivity. By precisely measuring these states, we can design adaptive tools and interfaces that respond effectively to users and help promote their health. Human attention states have been measured using physiological and behavioral data such as electroencephalogram (EEG), facial expressions, and eye tracking. Measuring human attention states can help design systems that enhance driver alertness, minimize interruptions during focus, or promote relaxation before sleep.

Extensive research has explored computational methods for measuring, evaluating, and improving sleep. For example, many algorithms have been developed to estimate sleep quality and stages using human physiological and behavioral sensor data including EEG and motion. Computational systems have been designed to promote better sleep.

Recent advances in natural language processing have leveraged massive textual data to train large language models (LLMs). Some studies have used LLMs for understanding human physiological and behavioral data and designing health applications including EEG abnormality detection and wearable sensor-based sleep quality detection. LLMs hold promise for health applications including human altered state detection and personalized feedback delivery; however, rigorous evaluations have not been conducted, particularly regarding the integration of different human physiological and behavioral data into LLMs for understanding the potential, accuracy, limitations, and reliability of the models.

In this paper, we evaluate LLMs for detecting and supporting human attention and sleep. Our ultimate goal is to create personalized, adaptive systems that enhance individuals’ attention and sleep. To achieve this, we conduct early explorations by integrating biobehavioral data into LLMs to understand their capabilities. We ask the following two research questions in the paper:

1. Can LLMs interpret/sense attentive states, sleep stages, and sleep quality?

2. Can LLMs provide personalized and adaptive feedback to help improve sleep?

We investigate the impact of various time scales and different input modalities of EEG, motion, and textual data on LLMs’ performance, reasonings, and generated responses for detecting attention states, sleep stages, and sleep quality detection and improving sleep.

Methods

We describe experiments and datasets for 1) user state detection and 2) sleep improvement suggestion generation to address our research questions.

Experiment 1: User State Detection

We conduct three different detection tasks: attention detection, sleep stage detection, and sleep quality detection.

Datasets & Data Processing

-

Mental Attention State: This dataset contains 25 hours of EEG data collected using 14 ch Emotive. Five participants were engaged in a low-intensity task of controlling a computer-simulated train. Three mental states were observed: focused, unfocused, and drowsy. We merged unfocused and drowsy into an unfocused state. We prepared three different types of information for attention detection: filtered EEG data, time-frequency spectrograms, and 11 features including power spectrum density, amplitude, standard deviation, kurtosis, and various ratios. The data was segmented into 10-sec intervals, resulting in 919 training samples, 230 validation samples, and 287 test samples.

-

Sleep EDF Expanded: This dataset contains 197 nights of polysomnography data collected from individuals aged 18-101 years. The data include EEG from two channels, EOG, EMG, and event markers. Sleep stages are labeled as follows: Wake, stage 1, stage 2, stages 3 & 4, and REM sleep. The data was segmented into 30-sec epochs. We used the same input types as those used for mental attention states.

-

Student Life: This dataset contains mobile phone sensor and survey data collected from 46 college students. We used the Pittsburgh Sleep Quality Index (PSQI) administered both at the pre and post-study and its scoring rules to categorize each participant as a poor or good sleeper. We assess sleep quality detection using participants’ textual responses to PSQI questions, physical activity-based actograms, and physical activity-based hourly averaged graphs.

Models

We compare various LLMs and traditional machine learning models.

- LLMs:

- Zero-shot learning: We feed data into LLMs without specific training, leveraging their pre-existing knowledge.

- In-context learning LLM: We include input data and label examples in prompts so that LLMs learn from context and adapt their response accordingly.

-

Fine-tuned LLM: We fine-tune LLMs using training and validating datasets.

-

Traditional Machine Learning Model: XGBoost uses gradient boosting that combines simple decision trees for accurate predictions. We also analyze feature importance by looking at the number of times each feature is used for trees.

-

Baseline (majority vote): Simply predicts the majority class for all test samples, serving as a basic reference point to evaluate the performance of other models.

We evaluate model performance using accuracy and weighted F1 score.

Experiment 2: Personalized and Adaptive Sleep Improvement Feedback

We explore whether LLMs can generate personalized content for sleep improvement, focusing on generating sleep improvement suggestions and guided imagery scripts. To generate the sleep suggestions, we feed LLMs various user context or profile information including EEG features, PSQI answers, physical activity-based actograms, gender, age group, ethnicity, health issues, and user preferences. To generate scripts to help a person sleep, we prompt the LLM to leverage a Guided Imagery technique.

Results

User State Detection

Attention Detection

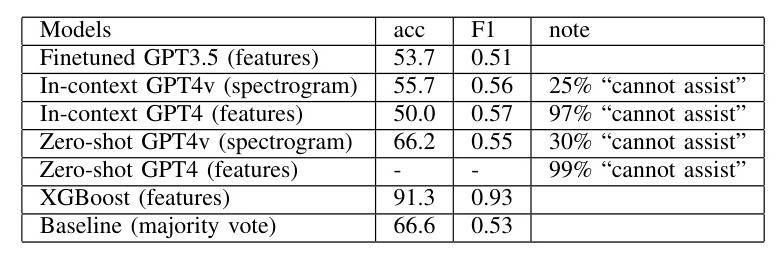

Traditional machine learning models outperformed LLM-based models for attention detection. Among the LLM models, fine-tuned GPT-3.5 models demonstrated the best performance. However, the GPT-4 vision model faced limitations, often returning a generic response such as “As a text-based AI, I do not have the capability to process images and I cannot assist with this request”. When fed high-dimensional EEG features, the GPT model indicated that it requires machine learning models and did not infer user states.

Sleep Stage Detection

LLM-based sleep detection shows lower performance than ML-based models. GPT 4 vision models often return a message that they cannot assist with the task. In-context learning with EEG waveforms performs worse than zero-shot learning with EEG waveforms. In-context learning with EEG features seems more effective than the zero-shot LLM model with EEG features. GPT fine-tuned models show higher misclassification rates compared to XGBoost.

Sleep Quality Detection

We compare sleep quality detection using two approaches: scored by PSQI standard scoring procedure vs. GPT4. Overall accuracy of the GPT4 model is 85.7%. GPT-4 demonstrates relatively good sleep quality detection performance based on textual answers to PSQI; however, GPT-4 struggles to recognize sleep quality accurately when participants show poor sleep behavior but good self-reported sleep quality. LLMs detect sleep and active periods and regular sleep patterns from the physical activity actograms and averaged graphs provided; however, the image data fed into LLMs are recognized as poor sleep.

Sleep Improvement Feedback Generation

LLMs modify suggestions and guided imagery scripts based on user input and profiles. LLMs generate sleep improvement suggestions that align with cognitive behavioral therapy for insomnia (CBT-i). LLMs also weave user profiles into generated guided imagery scripts and change scenes and phrases. When we feed EEG features for generating guided imagery scripts, LLM incorporates numerical information into guided imagery scripts, which might not be useful for users. Therefore, we adjust prompts not to include numerical information in the generated guided imagery script.

Discussion

This work explores the usage of LLMs and physiological and behavioral sensor data for attention and sleep detection and sleep improvement. Our experiments highlight both LLMs’ strengths and limitations. LLM-based attention and sleep detection exhibit lower performance compared to traditional ML models. Fine-tuned LLMs improve models’ ability to handle diverse contexts. However, we also found limitations. The fine-tuned GPT3.5 model uses limited features for classification even after fine-tuning and GPT-4 vision models fail to handle visual input. Feeding high-dimensional numerical features such as EEG features to LLMs does not reliably estimate user states.

To improve human state detection, LLM’s knowledge needs to be extended beyond simple one-on-one relationships and requires further refinement to handle diverse human physiological and behavioral data, variabilities, and patterns effectively. This might be possible using fine-tuning with larger datasets and retrieving external sources of knowledge. Integrating textual information with numerical and visual data is essential to understand variability within and across individuals; however, the capacity of current LLM vision models and fine-tuning is still limited.

LLM-based sleep improvement suggestions and guided imagery scripts are personalized and adaptive to user profiles. Automatically generated suggestions and scripts have potential for AI-based conversational systems or intervention systems after effectiveness and safety are carefully tested.

There are several limitations in this study. This study is an early exploration with limited datasets and limited LLMs. Refining prompts and using large and diverse datasets might help enhance task performance. User studies with end users and clinicians are necessary to evaluate generative responses in terms of accuracy, effectiveness, and safety. Beyond LLMs, there are other advanced approaches such as transformer models and multimodal learning for detecting user states.

We also discuss the ethical considerations of using LLMs to detect and improve human-altered states. Feeding personal physiological and behavioral data to LLMs could raise privacy concerns. Transparent consent processes and reliable data anonymization are important. Bias detection and mitigation strategies are necessary to ensure fair outcomes. Implementing guidelines for responsible use and monitoring LLM-generated content are required.