Authors:

Paper:

https://arxiv.org/abs/2408.07947

Introduction

Synthetic Aperture Radar (SAR) imaging technology offers the unique advantage of data collection regardless of weather conditions and time. However, SAR images are often complex due to backscatter patterns and speckle noise, making them difficult to interpret. Translating SAR images into optical-like representations can aid in their interpretation. Existing methods, primarily based on Generative Adversarial Networks (GANs), face challenges such as training instability and low fidelity, especially when dealing with low-resolution satellite imagery datasets. This paper introduces a novel approach using the Conditional Brownian Bridge Diffusion Model (BBDM) for translating Very-High-Resolution (VHR) SAR images to optical images, demonstrating superior performance over existing methods.

Methodology

Conditional Latent Diffusion Model

Recent advancements in generative modeling have been driven by diffusion-based methods. The Conditional Latent Diffusion Model (LDM) performs the diffusion process in a compressed latent space. The forward process gradually transforms an original latent feature into Gaussian noise through iterative noise addition steps. The reverse process reconstructs the original image by estimating noise at each step, guided by the condition from SAR imagery.

Brownian Bridge Diffusion Model

The Brownian Bridge Diffusion Model (BBDM) represents the translation as a probabilistic transformation between two fixed states, inspired by the Brownian bridge process. This model directly translates the initial image to the target domain image through a continuous-time stochastic process with fixed start and end points. The forward diffusion process and the reverse process aim to estimate the mean of the noise at each step, ensuring a smooth, continuous mapping between image domains.

Conditional BBDM for SAR to Optical

To address the limitations of the original BBDM, the Conditional BBDM (cBBDM) incorporates additional guiding information during the translation process. This modification allows for more refined results by leveraging SAR-specific features. The condition is derived from the pixel space of given SAR images and incorporated into the reverse diffusion process through channel-wise concatenation.

Experiments and Results

Data Set

Experiments were conducted on the MSAW dataset, consisting of 0.5m VHR SAR and optical images. The dataset includes 3,401 geo-referenced SAR and optical imagery paired sets, tiled to 900×900 pixels. The dataset was split into train and validation sets based on longitude to ensure validation on unseen data.

Evaluation Metrics

Several metrics were used to assess image translation performance, including Learned Perceptual Image Patch Similarity (LPIPS) scores and Fréchet Inception Distance (FID). Lower scores indicate better performance for both metrics.

Implementation Details

The proposed model was trained for 48 epochs using an NVIDIA A6000 GPU, with a minibatch size of 1 and an Adam optimizer with a learning rate of 1e-4. Data augmentation was implemented using horizontal flips during training. Comparative models were trained using their default minibatch size and learning rates.

Results and Analysis

Quantitative Results

The proposed model significantly outperforms other models in various LPIPS scores and FID, indicating excellent perceptual quality and accurate reconstruction of the target distribution.

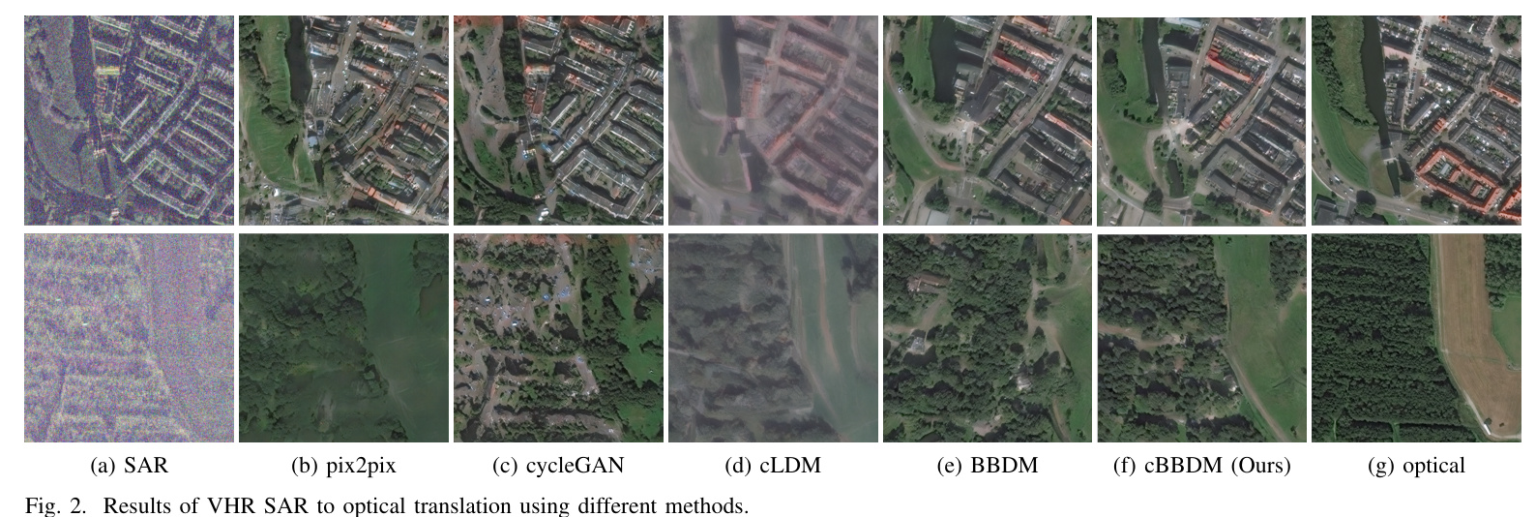

Qualitative Results

The qualitative results demonstrate that the conditional BBDM produces optical-like images that more accurately reflect the input SAR data while preserving structural details and textures. The model shows robustness against speckle noise and generates high-quality, detailed optical-like images across various scene types.

Conclusion

The Conditional BBDM framework for VHR SAR-to-optical image translation combines conditional information with Brownian bridge mapping in latent space, achieving high-quality, cost-effective translation for large VHR images. The proposed method outperforms existing GAN-based models and conditional LDM, contributing to improved SAR image interpretation and narrowing the gap between SAR and optical remote sensing modalities. This development opens new research avenues in the field of SAR to optical image translation.