Authors:

Jialiang Wang、Shimin Di、Hanmo Liu、Zhili Wang、Jiachuan Wang、Lei Chen、Xiaofang Zhou

Paper:

https://arxiv.org/abs/2408.06717

Graph Neural Networks (GNNs) have emerged as powerful tools for graph representation learning, but designing optimal GNN architectures remains a complex and resource-intensive task. The paper “Computation-friendly Graph Neural Network Design by Accumulating Knowledge on Large Language Models” proposes a novel framework, DesiGNN, to streamline this process by leveraging Large Language Models (LLMs) to accumulate and apply specialized knowledge in GNN design. This blog post provides a detailed interpretation of the paper, explaining its methodology, contributions, and experimental results.

Introduction

Graph Neural Networks (GNNs) have proven effective in modeling complex systems represented as graphs. However, designing optimal GNN architectures is challenging due to the need for extensive trial and error and deep expertise. Automated algorithms like AutoGNNs have been developed to alleviate this burden, but they still face significant computational overhead and lack proficiency in accumulating and applying knowledge across different datasets.

DesiGNN aims to address these challenges by empowering LLMs with specialized knowledge for GNN design. The framework includes a knowledge retrieval pipeline that converts past model design experiences into structured knowledge for LLM reference, enabling quick initial model proposals. A knowledge-driven search strategy then refines these proposals efficiently.

Related Work

Graph Neural Networks

GNNs update node representations through message passing, with various architectures differing in their aggregation, combination, update, and fusion functions. Automated GNNs (AutoGNNs) aim to find optimal architectures using search algorithms like reinforcement learning, evolutionary algorithms, and differentiable search. However, they still face issues of proficiency and computational overhead.

LLMs and Their Applications to GNNs

LLMs have shown proficiency in natural language understanding and task optimization. Recent research explores integrating LLMs with GNNs, either by using GNNs to process graph data into structured tokens for LLMs or by having LLMs enrich raw graph data for GNNs. However, existing LLM-based approaches for GNN design often lack nuanced knowledge and rely heavily on user-provided descriptions, leading to suboptimal model suggestions.

Methodology

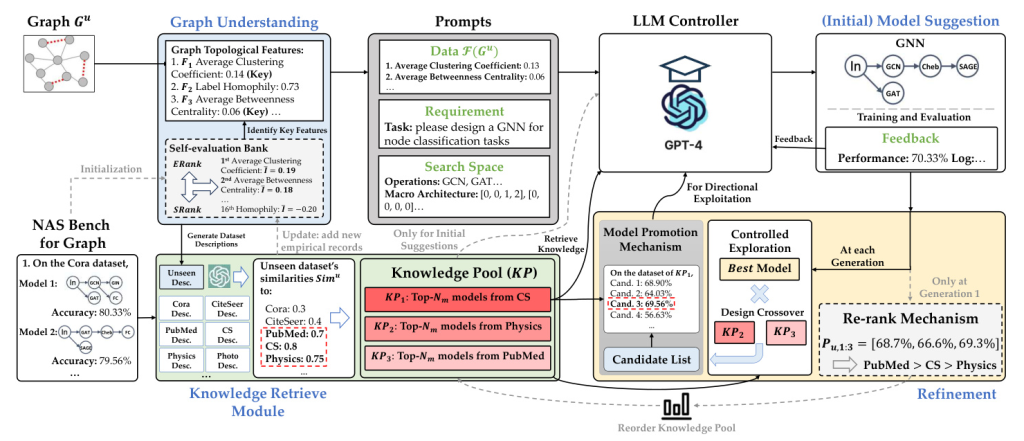

DesiGNN comprises three main components: Graph Understanding, Knowledge Retrieval, and Model Suggestion and Refinement.

Graph Understanding Module

This module analyzes graph characteristics and structures to understand similarities between different graphs. It uses 16 topological features to assess graph similarity and their impact on GNN performance. An adaptive filtering mechanism identifies the most influential features, bridging the gap between graph datasets and GNN designs.

Knowledge Retrieval Module

This module leverages the detailed records of model-to-performance relationships in NAS-Bench-Graph, a comprehensive dataset of GNN architectures and their performance on various graphs. It encapsulates top-performing models for different benchmark graphs and associates them with their corresponding filtered features. LLMs analyze and compare similarities between unseen and benchmark graphs to retrieve relevant model configuration knowledge.

GNN Model Suggestion and Refinement

Initial Model Suggestion

LLMs suggest initial model proposals for unseen graphs based on retrieved knowledge from similar benchmark graphs. This process is designed to generate initial proposals quickly without extensive training, significantly accelerating the model suggestion phase.

Model Proposal Refinement

A structured, knowledge-driven refinement strategy further enhances the initial model proposals. This strategy emulates the exploration-exploitation process of human experts, incorporating empirically derived configuration knowledge into the refinement phase. The process includes re-ranking knowledge bases, controlled exploration, model promotion, and directional exploitation and optimization.

Experiments

Experimental Settings

The experiments focus on node classification across 8 benchmark graphs and 3 additional graphs. Various baselines, including manually designed GNNs, classic search strategies, and LLM-based methods, are used for comparison. The evaluation measures the average accuracy of designed GNNs and assesses short-run efficiency based on the number of validated model proposals.

Main Results

Initial Model Suggestions

DesiGNN-Init, the initial model suggestion phase of DesiGNN, outperforms manually designed GNNs and other automated methods on diverse graphs. This highlights the effectiveness of the knowledge-driven approach in providing robust initial model suggestions.

Model Refinement and Short-run Efficiency

DesiGNN quickly optimizes initial proposals, surpassing all manually designed GNN competitors across 11 graphs. It achieves faster improvements in model performance with fewer proposal validations, demonstrating its efficiency in utilizing accumulated knowledge for rapid optimization.

Retrieve Knowledge with Graph Similarity

The knowledge retrieval strategy of DesiGNN effectively identifies empirically most similar benchmarks, enabling LLMs to suggest high-performing initial model proposals. The similarity function used in DesiGNN is competitive with posterior methods, further validating the importance of selecting key graph topological features.

Conclusion

DesiGNN presents a computation-friendly framework for designing GNNs by accumulating knowledge on LLMs. It efficiently produces initial GNN models and refines them to align with specific dataset characteristics and actionable prior knowledge. Experimental results demonstrate its effectiveness in expediting the design process and achieving outstanding search performance. Future research could extend this knowledge-driven model design pipeline to broader machine learning contexts beyond graph data.

Illustrations

- Illustration of how AutoGNNs and LLM-based works design GNN architectures.

- The DesiGNN pipeline for designing GNNs.

- Confidence of different features across graphs.

- Initial performance comparison of GNNs designed by different works.

- Short-run performance of automated baselines after validating 1-30 proposals.

- Similarities between datasets computed by different methods.

DesiGNN represents a significant advancement in the automation of GNN design, leveraging the power of LLMs to accumulate and apply specialized knowledge efficiently. This approach not only reduces computational overhead but also enhances the proficiency and effectiveness of GNN architecture design.