Authors:

Xin Sun、Xiao Tang、Abdallah El Ali、Zhuying Li、Xiaoyu Shen、Pengjie Ren、Jan de Wit、Jiahuan Pei、Jos A.Bosch

Paper:

https://arxiv.org/abs/2408.06527

Introduction

Motivational Interviewing (MI) is a client-centered counseling technique designed to encourage individuals to change behaviors. It enhances intrinsic motivation and collaboration between therapists and clients by addressing ambivalence and boosting self-efficacy. Traditional MI chatbots rely on expert-written scripts, which can be rigid and lack diversity. This paper explores the use of Large Language Models (LLMs) to generate MI dialogues that align with therapeutic strategies, aiming for controllable and explainable generation in psychotherapy.

Related Work

NLG in Motivational Interviewing

Natural Language Generation (NLG) in MI has evolved from pre-scripted templates to more dynamic models that can rephrase client utterances empathetically. However, ensuring that generated content adheres to MI principles remains a challenge.

Strategy-Aware Dialogue Generation

Strategy-aware dialogue generation incorporates strategic dialogue objectives, moving beyond linguistic fluency. This approach is particularly relevant in therapeutic contexts, where embedding psychological and empathetic principles into responses is crucial.

Chain-of-Thought Reasoning with LLMs

The Chain-of-Thought (CoT) concept enhances the reasoning process in LLMs, enabling more coherent and contextually accurate dialogue generation. This technique is promising for sensitive domains like psychotherapy.

Method

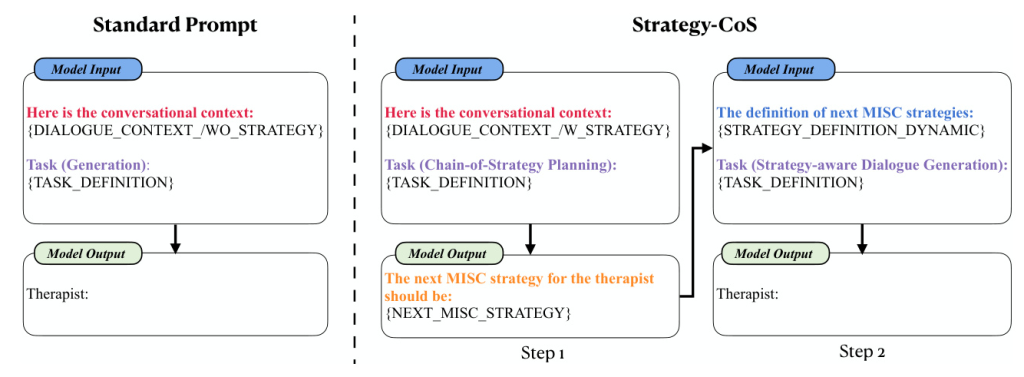

Strategy-Aware Dialogue Generation with Chain-of-Strategy Planning

Inspired by previous work, the authors propose a method to generate MI dialogues using LLMs guided by domain-specific MISC strategies. This approach, called Chain-of-Strategy (CoS) Planning, involves predicting the next MI strategy based on the dialogue context and generating subsequent dialogues accordingly.

Prompt Design

Three prompting methods were designed for the experiments:

- Standard Prompt: Includes only the dialogue context and task instruction.

- Strategy-CoS Prompt: Adds the predicted MI strategy as internal reasoning.

- Strategy-GT Prompt: Utilizes the ground-truth strategy from the dataset.

Experiments

Task Definition

The experiments aimed to answer two research questions:

- Can LLMs generate dialogues that align with MI strategies and are comparable to those from human therapists?

- How effective is CoS planning with LLMs for MI dialogue generation?

Datasets

Two MI datasets were used:

- AnnoMI: Contains MI conversations with a single coarse-grained MI strategy per utterance.

- BiMISC: Contains MI conversations with multiple fine-grained MI strategies per utterance.

Benchmark LLMs

Several prominent LLMs were benchmarked, including Flan-t5-xxl, Vicuna-13B, Qwen-14B, Qwen2-7B, Llama-2-13B, Llama-3-8B, and GPT-4.

Automatic Evaluation Metrics

The quality of generated dialogues was evaluated using metrics such as BLEU, ROUGE, METEOR, BERTScore, Entropy, and Belief.

Human Evaluation

Both expert and layperson evaluations were conducted to assess the alignment and quality of generated dialogues.

Outcomes

Empirical Analysis on Automatic Metrics

The results showed that strategy-aware generation effectively instructs LLMs to generate MI-adherent dialogues. GPT-4 consistently achieved the highest scores, but open-sourced LLMs like Flan-T5 and Vicuna-13B also performed well.

Role of Strategy in Guiding MI Dialogue Generation (RQ1)

Expert evaluations confirmed that strategy-aware prompts were more effective in guiding the generation of MI-adherent dialogues. Layperson evaluations revealed that while strategy-aware generations align better with MI principles, they may not always resonate as well with lay evaluators in terms of empathy.

CoS Planning as Reasoning Step (RQ2)

CoS planning significantly enhanced LLM reasoning for subsequent MI-aligned dialogue generation. Expert evaluations showed that CoS planning demonstrated superior contextual alignment and adherence to MI principles compared to other methods.

Case Study

A case study highlighted the nuanced balance between strategic therapeutic alignment and client perception. It showed that while strategy-aware generations are more aligned with MI principles, they may lack the emotional nuances that laypeople prioritize.

Discussion

Strategy-Aware MI Dialogue Generation

LLMs present a promising solution for generating diverse and coherent MI dialogues, reducing dependency on pre-scripted content. However, ensuring strategic alignment with MI principles is crucial to safeguard against inappropriate outputs.

Challenges of Applying LLMs in MI

Balancing empathetic engagement with strategic alignment is essential for the success of LLM-assisted psychotherapeutic tools. Enhancing LLMs’ ability to accurately understand and plan MI strategies is key to ensuring dialogues remain on course.

Conclusion

This work addresses the challenge of utilizing LLMs in psychotherapy by proposing an approach to guide LLMs in generating MI strategy-aligned dialogues. Extensive experiments and evaluations validate the effectiveness of this approach, highlighting the need for balancing strategic alignment with empathetic engagement.

Limitations

Several limitations were acknowledged, including the need for more diverse datasets, capturing dynamic human interactions, and developing more nuanced evaluation metrics. Future work will focus on refining LLM capabilities and conducting empirical studies to validate the effectiveness of LLMs in live therapeutic settings.