Authors:

Paper:

https://arxiv.org/abs/2408.10831

Introduction

In the realm of deep learning, the scarcity of labeled data in unconventional domains, such as wildlife scenarios, poses a significant challenge. This is particularly true for tasks like 2D pose estimation of animals, where collecting real-world data is often impractical. Zebras, for instance, are not only difficult to capture in diverse poses but also include endangered species like Grévy’s zebra, making data collection even more challenging. The study titled “ZebraPose: Zebra Detection and Pose Estimation using only Synthetic Data” by Elia Bonetto and Aamir Ahmad addresses this issue by leveraging synthetic data generated through a 3D photorealistic simulator. This approach aims to bridge the gap between synthetic and real-world data without relying on sophisticated animal models or pre-trained networks.

Related Work

Synthetic Data Generation Techniques

Traditional synthetic data generation techniques often involve stitching CAD or SMAL-based models over randomized backgrounds. These methods, while useful, suffer from issues like scale differences, incorrect blending, and lighting inconsistencies. Previous works have focused primarily on 2D pose estimation, assuming reliable detection of animals, which is not always feasible in real-world scenarios.

Bridging the Syn-to-Real Gap

To address the syn-to-real gap, various methods have been proposed, including domain adaptation and semi-supervised learning techniques. These methods often rely on large quantities of unlabeled real images or style transfer techniques to enhance the realism of synthetic data. However, these approaches still face challenges in generalizing to real-world images, especially in the context of animal detection and pose estimation.

Photorealistic Simulators

Recent advancements have introduced photorealistic simulators to generate high-quality synthetic data. These simulators, combined with realistic animal models, offer a promising solution to the syn-to-real gap. However, the effectiveness of these simulators in training models for both detection and pose estimation tasks remains underexplored.

Research Methodology

Data Generation

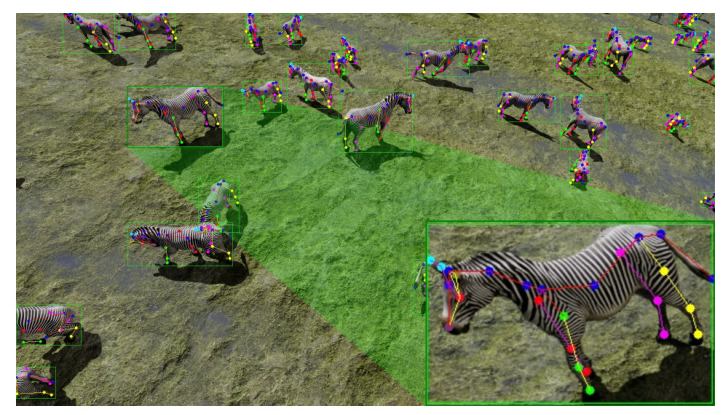

The study employs a 3D photorealistic simulator to generate synthetic data. This involves creating 250 3D zebra models, each randomly scaled and posed using a selected animation sequence. The models are placed in ten different environments from the Unreal Engine marketplace, and the scenes are rendered at 1080p resolution. The generated data includes RGB images labeled with bounding boxes, instance segmentation masks, depth, and vertices locations.

Detection and Pose Estimation Models

For detection, the study uses the YOLOv5 model, while for 2D pose estimation, the ViTPose+ model is employed. The synthetic dataset, named ‘SC’, is augmented through a cropping and scaling procedure to create the ‘SC5K’ dataset. This augmentation aims to cover a wider distribution of viewpoints and improve the generalization of the models to real-world images.

Keypoints Annotation

The synthetic data is annotated with 27 keypoints using the 3D vertices locations of the zebra models. These keypoints include various body parts like hooves, knees, thighs, tail, eyes, ears, neck, nose, and skull. The annotations are projected onto the 2D images using the ground truth camera pose, and the visibility of keypoints is marked based on their position within the instance mask of the animal.

Experimental Design

Datasets

The study utilizes multiple datasets for training and evaluation, including both synthetic and real-world data. The synthetic datasets include ‘SC’ and ‘SC5K’, while the real-world datasets include AP-10K, APT-36K, Zebra-300, Zebra-Zoo, and TigDog Horses. Additionally, aerial images from the R123 and RP datasets are used to evaluate the models’ performance in different viewpoints.

Evaluation Metrics

The detection models are evaluated using the mAP and mAP50 metrics, which measure the average precision over different IoU thresholds. The pose estimation models are evaluated using the percentage of correct keypoints (PCK) with thresholds set to 5% and 10% of the bounding box size.

Training Procedures

The YOLOv5 model is trained for 300 epochs with default learning rates and input image sizes of 1920×1920 and 640×640. The ViTPose+ model is trained for 210 epochs using the Adam optimizer with a learning rate of 5e-4. The models are trained with both randomly initialized weights and pre-trained backbones to evaluate the impact of pre-training on performance.

Results and Analysis

Detection Performance

The results indicate that models trained on the augmented synthetic dataset ‘SC5K’ outperform those trained on the original ‘SC’ dataset. The ‘SC5K’ models achieve better mAP50 scores across various real-world datasets, demonstrating the effectiveness of the cropping and scaling augmentation.

Pose Estimation Performance

The ViTPose+ models trained on synthetic data show promising results in 2D pose estimation, with performance comparable to models trained on real-world data. The inclusion of a minimal amount of real-world data further boosts the performance and generalization capabilities of the models.

Impact of Pre-training

Using pre-trained backbones generally improves the performance of the pose estimation models. However, the study finds that models trained on synthetic data alone can achieve competitive results without pre-training, highlighting the quality and realism of the generated synthetic data.

Overall Conclusion

The study demonstrates that synthetic data generated through a 3D photorealistic simulator can effectively train models for both detection and 2D pose estimation of zebras. The proposed methodology eliminates the need for style transfer techniques and extensive real-world data, making it a viable solution for wildlife monitoring and conservation research. The results suggest that high-quality synthetic datasets can bridge the syn-to-real gap, enabling the development of robust models for unconventional domains.

Future work will focus on generalizing the approach to other animals and extending it to 3D pose and shape estimation tasks. The study also emphasizes the importance of comprehensive testing and diversified training data to ensure the robustness and generalization of deep learning models.