Authors:

Julius Pesonen、Teemu Hakala、Väinö Karjalainen、Niko Koivumäki、Lauri Markelin、Anna-Maria Raita-Hakola、Juha Suomalainen、Ilkka Pölönen、Eija Honkavaara

Paper:

https://arxiv.org/abs/2408.10843

Introduction

Background

The increasing frequency and intensity of wildfires pose significant risks to the environment, ecosystems, and societies. Early detection is crucial to prevent large-scale disasters. Uncrewed aerial vehicles (UAVs) offer a promising solution for rapid deployment and surveying large areas with minimal infrastructure. However, in remote areas, UAVs are limited to on-board computing due to the lack of high-bandwidth mobile networks. This necessitates lightweight, specialized models for real-time detection and localization of wildfires.

Problem Statement

Accurate camera-based localization of wildfires requires segmentation of detected smoke. However, training data for deep learning-based wildfire smoke segmentation is limited. This study proposes a method to train small specialized segmentation models using only bounding box labels, leveraging zero-shot foundation model supervision. The method aims to achieve accurate real-time detection with limited computational resources.

Related Work

UAV-based Wildfire Detection

The concept of UAV-based wildfire monitoring dates back to the early 2000s. Early methods were limited by available computational resources and non-learning-based computer vision techniques. Recent advancements in artificial neural networks, such as R-CNN, Faster R-CNN, YOLO, and FPN, have enabled efficient detection using bounding boxes. However, bounding-box detections lack the pixel-level accuracy required for precise localization.

Segmentation Models

Segmentation models like ERFNet, BiSeNet, and ENet have been proposed for real-time use. While segmentation has been applied to wildfire detection, most studies focus on flame detection rather than smoke, which is visible earlier and from a greater distance. Publicly available wildfire datasets primarily focus on flames, with limited data annotated at the pixel level for smoke detection.

Weakly Supervised Segmentation

Weakly supervised semantic segmentation uses non-pixel-level labels, such as bounding boxes, to train models. Recent methods like BoxSnake, Mask Auto-Labeler, and BoxTeacher have shown promise in using bounding-box supervision for instance segmentation. Knowledge distillation, which transfers knowledge from a larger model to a smaller one, has also been applied to semantic segmentation with positive results.

Foundation Models

Prompt-guided segmentation models like Segment Anything Model (SAM) and High-Quality SAM provide tools for generating pixel-level pseudo-labels from weak labels. These models have been successfully used in various applications, including planetary geological mapping, cell nuclei segmentation, and remote sensing.

Research Methodology

Data Collection

The study used two datasets: the Bounding Box Annotated Wildfire Smoke Dataset Versions 1.0 and 2.0 by AI For Mankind and HPWREN, and a UAV-collected dataset introduced by Raita-Hakola et al. The UAV dataset consists of 5035 bounding box annotated images of boreal forests, with 94% containing visible smoke. The AI For Mankind dataset represents early detection scenarios with smoke observed from a distance.

Pseudo-label Generation

Two approaches were used to generate pseudo-labels for training the inference model:

1. SAM Supervision: Masks were generated using SAM with bounding box prompts.

2. BoxSnake Supervision: A larger model was trained using BoxSnake, and pseudo-masks were obtained by inferring the trained model.

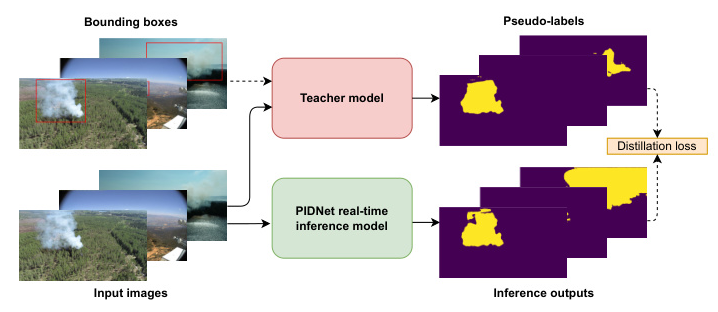

Inference Model Training

The PIDNet model was chosen for its real-time performance with limited computational resources. The model was trained using a knowledge distillation scheme with a larger teacher model and a smaller student model. The training involved four loss functions to optimize segmentation and boundary prediction.

Experimental Design

Data Preparation

The datasets were split into training, validation, and test sets. The UAV data was split with a 60-image separation interval to ensure diversity. The AI For Mankind data was split based on location and direction. A total of 80 images were human-labeled with pixel-wise masks for testing.

Training and Validation

The models were trained using AdamW optimizer with a starting learning rate of 1e-3 and weight decay coefficient of 1e-2. The best-performing model was chosen based on validation set mIoU. Heavy data augmentation was applied to overcome the limitations of small data and noisy pseudo-labels.

UAV-based System Testing

A lightweight UAV-carried system was implemented with a global shutter RGB camera and an NVIDIA Jetson Orin NX computer. The system was tested at real-world forest-burning events to demonstrate the model’s real-time segmentation capability.

Results and Analysis

Model Performance

The PIDNet models trained using the distillation scheme consistently outperformed the BoxSnake-trained ResNet-50-RCNN-FPN model. The PIDNet-M variant showed the best test set metrics, but PIDNet-S was chosen for practical inference due to its superior speed on the NVIDIA Jetson Orin NX.

Qualitative Results

Qualitative results showed that both the teacher and student models could segment smoke from test images. The SAM-supervised PIDNet-S model was chosen for real-world application due to its zero-shot capability and efficient adoption.

Ablation Study

An ablation study on the loss functions used for distillation optimization showed that omitting boundary-dependent loss terms improved test errors. However, the usefulness of these terms in other studies suggests that the optimal setting lies between the original weights and zero.

Real-world Performance

The model successfully detected smoke at distances between 1.4 and 9.7 km during real-world tests. The system achieved sufficient results for reliable onboard sensing, running at ∼6 fps with video recording.

Overall Conclusion

The study demonstrates that real-time smoke segmentation for early wildfire detection is feasible with UAV-carried edge computing resources. The proposed method achieved 63.3% mIoU on a diverse offline dataset and showed promising results in real-world tests. The SAM-supervised PIDNet-S model offers a low barrier-of-entry framework for training segmentation models with bounding box labels, enabling efficient and accurate wildfire detection.

Future work includes extending the method to other locations with varied data and exploring different types of weakly supervised data. The study opens avenues for further development in practical early wildfire detection and other real-time segmentation tasks with computational resource limitations.