Authors:

Ik Jun Moon、Junho Moon、Ikbeom Jang

Paper:

https://arxiv.org/abs/2408.09952

Introduction

With the increasing interest in skin diseases and aesthetics, the ability to predict and analyze facial wrinkles has become crucial. Facial wrinkles are significant indicators of aging and can be useful in assessing skin conditions, skin care, and early diagnosis of skin diseases. However, manually analyzing extensive collections of images for facial wrinkles is resource-intensive and subjective, leading to variability in research findings.

To address these challenges, this study proposes a deep learning-based approach to automatically segment facial wrinkles. By combining wrinkle data labeled by multiple annotators and leveraging transfer learning, the proposed method aims to achieve high performance in wrinkle segmentation with limited labeled data, significantly reducing the time and cost involved in manual labeling.

Related Work

Deep Learning in Medical Imaging

Deep learning has shown significant promise in medical imaging, particularly in tasks such as image segmentation and classification. Convolutional Neural Networks (CNNs) have been widely used for various medical image analysis tasks, including skin lesion detection and segmentation.

Transfer Learning

Transfer learning involves leveraging knowledge from a pretrained model on a large dataset to improve performance on a related but smaller dataset. This approach is particularly useful in scenarios with limited labeled data, as it allows the model to benefit from the knowledge gained during pretraining.

Weakly Supervised Learning

Weakly supervised learning uses incomplete, inexact, or inaccurate labels to train models. This approach can be beneficial when obtaining fully labeled data is challenging or expensive. In this study, weakly supervised learning is used during the pretraining stage to generate texture masks from face images.

Research Methodology

Dataset

The study utilized the FFHQ (Flickr-Faces-HQ) dataset, which consists of 70,000 high-quality face images captured under various conditions. The images are 1024×1024 in size and were used without any downsampling or preprocessing.

Pretraining and Finetuning

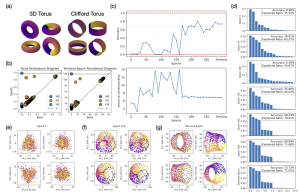

- Weakly Supervised Pretraining:

- Data Selection: 25,000 images were randomly selected from the FFHQ dataset.

- Ground Truth Generation: Texture maps were extracted from the face images, and non-facial areas were masked out to produce the final texture masks (

-

Model Training: The U-Net architecture was used to train the model to output texture masks from face images (

-

Supervised Finetuning:

- Data Selection: 500 face images were randomly selected for finetuning.

- Ground Truth Generation: Wrinkle masks were manually annotated by three experienced annotators. A majority voting mechanism was implemented to mitigate inter-rater variability (

- Model Training: The pretrained U-Net model was finetuned to classify each pixel of a face image as either a wrinkle or not (

Evaluation Metric

The Jaccard Similarity Index (JSI) was used as the evaluation metric, measuring the overlap between the predicted segmentation and the actual label.

Experimental Design

Data Preparation

- Training Data: 80% of the dataset was allocated for training.

- Validation Data: 10% of the dataset was used for validation.

- Testing Data: 10% of the dataset was used for testing.

Training Pipeline

- Weakly Supervised Pretraining:

- The model was trained for 300 epochs using texture masks.

- Supervised Finetuning:

- The model was finetuned for 150 epochs using manually annotated wrinkle masks.

- Finetuning was performed using varying proportions of the training dataset: 100%, 50%, 25%, and 5%.

Results and Analysis

Performance Comparison

The proposed method demonstrated improved performance compared to training exclusively with manual data (no pretraining). The JSI improvements were 1.92%, 0.35%, 1.06%, and 8.53% for datasets comprising 100%, 50%, 25%, and 5% of the data, respectively (

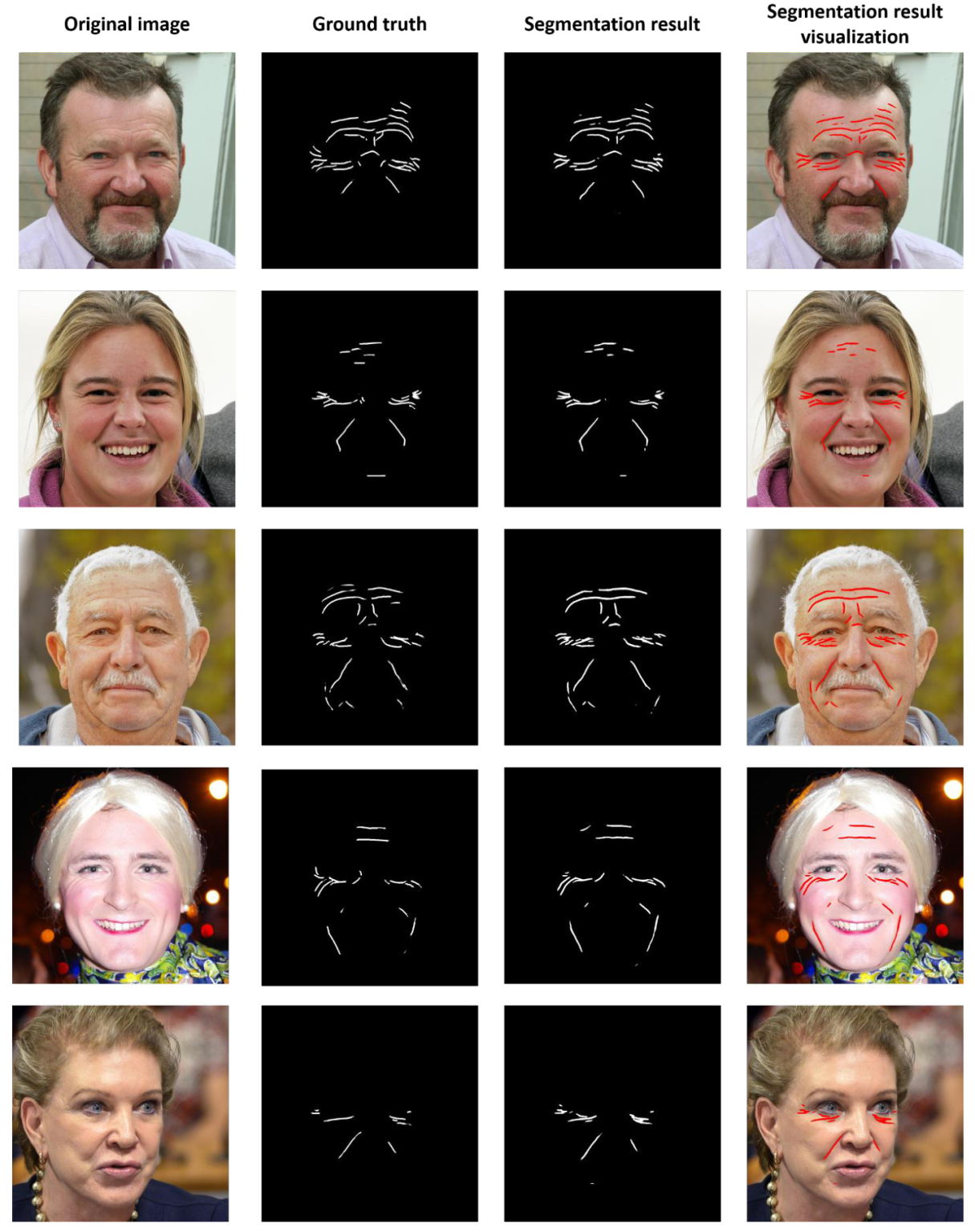

Visual Performance

The visual performance of the proposed methodology showed accurate wrinkle segmentation results, highlighting the efficacy of the approach (

Comparison with Other Methods

The proposed method also outperformed other methods pretrained with self-supervised learning techniques, demonstrating a significant performance improvement (

Overall Conclusion

This study presents a reliable ground truth generation strategy and an efficient transfer learning approach for facial wrinkle detection. By combining weakly supervised pretraining with multi-annotator supervised finetuning, the proposed method achieves high performance in wrinkle segmentation, even with limited labeled data. The approach automates intricate and time-consuming tasks of wrinkle analysis, offering substantial advantages over manual methods.

Future work will involve involving dermatologists in the wrinkle annotation process and researching reliable label combination methods, such as label smoothing, to further improve the reliability of manual wrinkle masks.