Authors:

Harsh Kumar、Mohi Reza、Jeb Mitchell、Ilya Musabirov、Lisa Zhang、Michael Liut

Paper:

https://arxiv.org/abs/2408.08401

Introduction

The integration of large language models (LLMs) in programming education is transforming how students approach writing SQL queries. Traditionally, students have relied on web searches for coding assistance, but the advent of LLMs like ChatGPT is changing this dynamic. This study aims to compare the help-seeking behavior of students using traditional web search versus LLMs, including a publicly available LLM (ChatGPT) and an instructor-tuned LLM, in the context of writing SQL queries.

Related Work

The rise of LLMs has prompted comparative research with traditional web search methods for information retrieval and problem-solving. Previous studies have shown mixed results regarding the effectiveness of LLMs versus web search for various tasks. While LLMs often provide more specific and understandable responses, their accuracy can be inconsistent, especially for complex tasks. In programming education, LLMs have the potential to enhance understanding but also pose risks of overreliance and reduced critical thinking.

Methods

Context of Deployment

The study was conducted in a 12-week introductory database systems course at a large, research-intensive university in North America. The course used a flipped classroom approach, encouraging students to seek external resources for self-regulated learning. The study involved 39 volunteer students who participated in randomized interview sessions over Zoom, where they were assigned to use either web search, standard ChatGPT, or an instructor-tuned LLM to solve SQL-writing problems.

Experimental Conditions

Three distinct sources of help were considered:

- Web Search: Students used any web search engine to find resources for writing SQL queries.

- ChatGPT: Students used the standard ChatGPT (3.5 model) without any additional tweaks.

- Instructor-tuned LLM: Students used a chatbot based on GPT-3.5, configured with system prompts to provide context-specific assistance without giving direct answers.

Outcome Measures

The study measured the following outcomes:

- Number of Interactions with the Source of Help: Calculated by the number of queries sent to the assigned source.

- Number of Edits Made to the Final SQL Query: Calculated by the number of changes made to the final SQL query.

- Quality of the SQL Query: Assessed using a grading rubric specified by the course instructor.

- Self-reported Mental Demand: Measured using the Mental Demand subscale from the NASA-TLX questionnaire.

Results

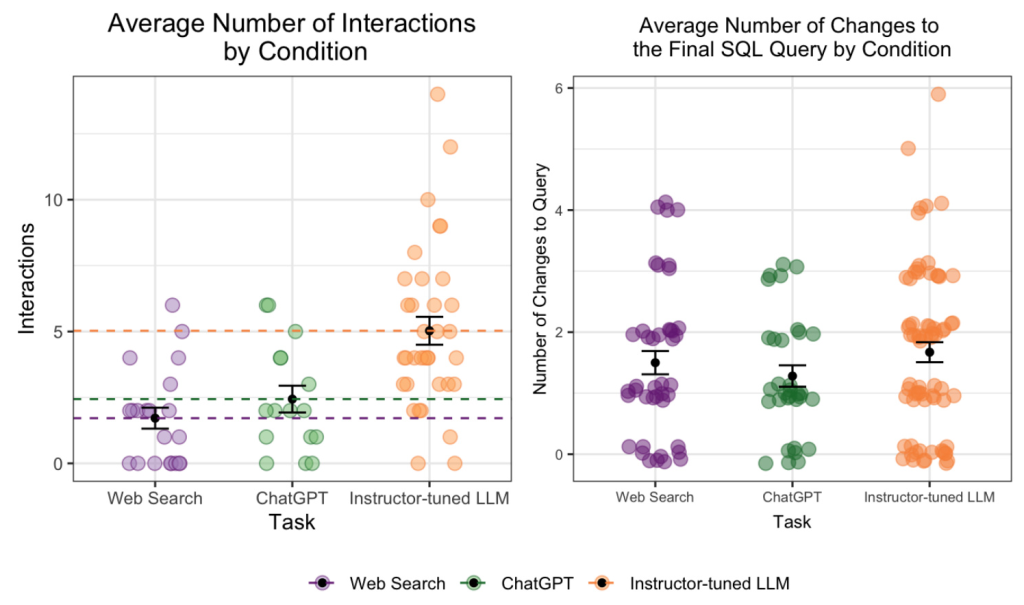

Effect on Number of Interactions

Students interacted significantly more with the instructor-tuned LLM compared to both ChatGPT and web search. This increased interaction is likely due to the system prompts designed to prevent direct answers and encourage engagement.

Effect on Number of SQL Query Edits/Changes

There were no significant differences in the number of changes made to the final SQL query across the different conditions. This suggests that while the instructor-tuned LLM required more interactions, it did not lead to more edits.

Effect on the Quality of the Final SQL Query

The quality of the final SQL queries did not significantly differ between conditions, although the LLM conditions showed directionally higher query quality compared to web search.

Self-Reported Mental Demand

Students reported similar levels of mental demand across all conditions, with a slight indication that the instructor-tuned LLM was less mentally demanding.

Discussion

Key Findings

The study found that students needed to interact more with the instructor-tuned LLM, which did not negatively impact the quality of their final SQL queries. This increased engagement could potentially lead to longer-term learning benefits. Additionally, the instructor-tuned LLM did not increase the mental burden on students, suggesting that it can be an effective tool for facilitating learning without overwhelming students.

Broader Implications

The findings highlight the potential of low-cost instructor tuning through system prompts to increase student engagement. Instructor-tuned chatbots can provide personalized support and reduce over-dependency on general-purpose chat agents like ChatGPT. However, the effectiveness of these tools depends on the accuracy of the models and the ability of students to ask the right questions.

Limitations & Future Work

The study’s small sample size and specific participant demographics limit the generalizability of the findings. Future research should involve larger-scale, multi-institutional studies to enhance robustness and explore long-term learning outcomes. Additionally, investigating different types of programming tasks and expanding the study to diverse educational settings could provide further insights into the broader applicability of LLMs in education.

Conclusion

This study compared the use of LLMs (standard and instructor-tuned) with traditional web search for writing SQL queries. The findings suggest that instructor-tuned LLMs can lead to higher engagement without compromising the quality of the final output or increasing mental demand. These results have significant implications for the design of AI-based instructional resources and the future of programming education.

This blog post provides a detailed interpretation of the study “Understanding Help-Seeking Behavior of Students Using LLMs vs. Web Search for Writing SQL Queries,” highlighting the key findings, broader implications, and future research directions.