Authors:

Yankai Chen、Yixiang Fang、Yifei Zhang、Chenhao Ma、Yang Hong、Irwin King

Paper:

https://arxiv.org/abs/2408.09239

Introduction

Bipartite graphs are a fundamental structure in various real-world applications, such as recommendation systems, database retrieval, and document querying. These graphs consist of two disjoint sets of nodes, with edges only between nodes of different sets. The task of Top-N search in bipartite graphs involves selecting the best-matched nodes for a given query node, which is crucial for effective information filtering.

Traditional approaches rely on similarity matching in continuous Euclidean space using vectorized node embeddings. However, these methods face challenges in terms of computation latency and memory overhead, especially with the explosive growth of data. To address these issues, hashing techniques have emerged as a promising solution, converting continuous values into binarized hash codes for efficient similarity computation in Hamming space.

Despite the advantages of hashing, previous studies have encountered significant performance decay. This paper investigates the problem of hashing with Graph Convolutional Networks (GCNs) for effective Top-N search and proposes a novel approach called Bipartite Graph Contrastive Hashing (BGCH+). BGCH+ introduces a dual augmentation approach to enhance the expressivity and robustness of hash codes within a dual self-supervised learning paradigm.

Related Work

Graph Convolutional Networks (GCNs)

GCNs have gained popularity for their ability to capture high-order connection information and enrich node embeddings. Early research focused on spectral-based methods, but these were computationally expensive. Spatial-based GCNs, which aggregate embeddings of neighbors, have become more prevalent due to their scalability. However, GCNs still face challenges in inference efficiency due to the high computational cost of similarity calculations.

Learning to Hash

Hashing techniques aim to reduce computation and storage costs by converting high-dimensional vectors into low-dimensional hash codes. Locality Sensitive Hashing (LSH) and deep learning-based methods have been explored for various tasks, including image retrieval and recommendation. Recent work has integrated GCNs with hashing techniques, but these approaches often fail to capture intermediate semantics and suffer from optimization challenges.

Graph Contrastive Learning

Contrastive learning has shown promise in visual representation tasks and has been adapted for graph data. Traditional methods involve explicit graph structure manipulation, such as node and edge dropout. However, these methods can introduce biases and inefficiencies. Feature-level augmentation offers a more flexible and efficient alternative, enhancing the robustness of learned representations.

Research Methodology

Overview

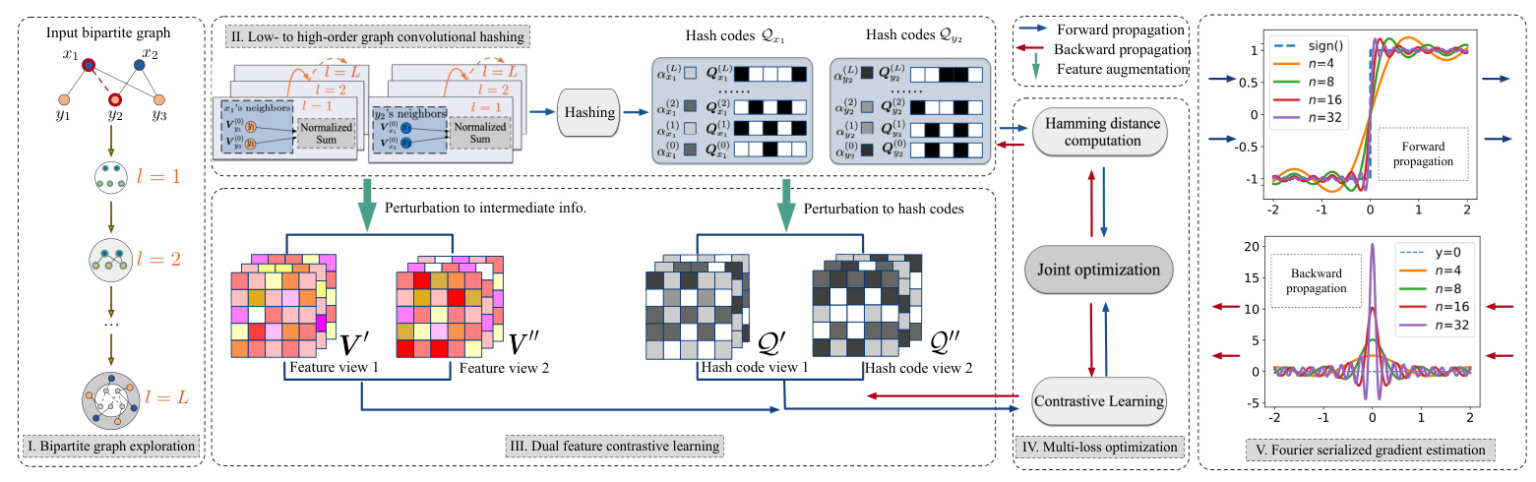

BGCH+ incorporates several key modules to enhance the expressivity and robustness of hash codes:

- Adaptive Graph Convolutional Hashing: Enhances the expressivity of hashed features while ensuring efficient matching in Hamming space.

- Dual Feature Augmentation for Contrastive Learning: Constructs effective data augmentations for both intermediate continuous information and target hash codes.

- Fourier Serialized Gradient Estimation: Introduces Fourier Series decomposition for accurate gradient approximation.

- Efficient Online Matching: Utilizes Hamming distance measurement for accelerated inference.

Adaptive Graph Convolutional Hashing

BGCH+ introduces a layer-wise positive rescaling factor for each node to increase the flexibility and smoothness of learned representations. This approach captures the unique numerical characteristics of embeddings, enhancing the expressivity of hash codes.

Dual Feature Contrastive Learning

BGCH+ employs feature-level augmentations by adding random noise to both continuous embeddings and binarized hash codes. This approach maintains the integrity of the graph structure while introducing controlled perturbations, enhancing the robustness of learned representations.

Fourier Serialized Gradient Estimation

To address the challenges of gradient estimation for the non-differentiable sign function, BGCH+ approximates the sign function using its Fourier Series decomposition. This method provides more accurate gradient approximation, preserving the discriminability of hash codes.

Model Prediction and Optimization

BGCH+ utilizes a multi-loss objective function comprising Bayesian Personalized Ranking (BPR) loss and contrastive learning loss. This design ensures high-quality binarized embeddings and maintains rich relative order information, leading to superior performance in Top-N retrieval tasks.

Experimental Design

Datasets and Evaluation Metrics

The experiments were conducted on six real-world bipartite graph datasets: MovieLens, Gowalla, Pinterest, Yelp2018, AMZ-Book, and Dianping. The evaluation metrics used were Recall@N and NDCG@N, which assess the ranking capability of the models in Hamming space retrieval.

Baselines

BGCH+ was compared with several state-of-the-art hashing-based models (e.g., LSH, HashNet, CIGAR, HashGNN) and full-precision models (e.g., NeurCF, NGCF, DGCF, LightGCN). These baselines represent a range of approaches for object retrieval and recommendation.

Hyperparameter Settings

The models were implemented using Python and PyTorch, with hyperparameters tuned through grid search. The dimension of embeddings was set to 256, and the number of graph convolution iterations was set to 2. The learning rate and other coefficients were optimized for each dataset.

Results and Analysis

Top-N Hamming Space Query

BGCH+ consistently outperformed previous hashing-based models in terms of Recall and NDCG metrics across all datasets. The dual feature contrastive learning paradigm significantly improved performance, highlighting the effectiveness of the proposed approach.

Comparison with Full-Precision Models

BGCH+ achieved competitive performance compared to full-precision models, demonstrating its capability to balance retrieval quality and computational efficiency. This makes BGCH+ a viable alternative for scenarios with limited resources.

Impact of Dual Feature Contrastive Learning

The dual feature contrastive learning approach enhanced the uniformity of learned hash codes, leading to improved retrieval performance. Feature-level augmentation proved to be more effective and efficient than traditional structure manipulation methods.

Ablation Study

The ablation study confirmed the necessity of key components in BGCH+, including adaptive graph convolutional hashing, multi-loss optimization, and Fourier serialized gradient estimation. Each component contributed to the overall performance improvement.

Resource Consumption Analysis

BGCH+ demonstrated significant advantages in terms of training time, inference time, and memory footprint compared to state-of-the-art models. The efficient design of BGCH+ ensures scalability and practicality for large-scale bipartite graphs.

Fourier Gradient Estimation

The Fourier Series decomposition provided accurate gradient estimation, leading to better optimization and retrieval accuracy. This method outperformed other gradient estimators, particularly for sparse bipartite graphs.

Overall Conclusion

BGCH+ represents a significant advancement in the field of bipartite graph hashing, offering a robust and efficient solution for Top-N Hamming space search. The dual feature contrastive learning paradigm and adaptive graph convolutional hashing enhance the expressivity and robustness of hash codes, leading to superior performance in retrieval tasks. The empirical analyses validate the effectiveness of BGCH+ across various datasets, making it a promising approach for real-world applications with limited computational resources.