Authors:

Arpan Mahara、Naphtali D. Rishe、Liangdong Deng

Paper:

https://arxiv.org/abs/2408.08216

Introduction

Generative AI has become a cornerstone in various research fields, including healthcare, remote sensing, physics, chemistry, and photography. Among the many methodologies, Generative Adversarial Networks (GANs) have shown remarkable success, particularly in image-to-image (I2I) translation. This study introduces the Kolmogorov-Arnold Network (KAN) as a potential replacement for the Multi-layer Perceptron (MLP) in generative AI, specifically in the subdomain of I2I translation. The proposed KAN-CUT model replaces the two-layer MLP in the existing Contrastive Unpaired Image-to-Image Translation (CUT) model with a two-layer KAN, aiming to generate more informative features for high-quality image translation.

Related Work

Unpaired Image-to-Image Translation in GANs

CycleGAN was a foundational work in unpaired I2I translation, using a cyclic principle to map images from one domain to another. However, CycleGAN’s cyclic nature made it computationally expensive and time-consuming. To address these limitations, models like GCGAN and CUT were introduced, focusing on single-directional training to improve efficiency. CUT, in particular, combines Generative Adversarial training with contrastive learning to generate high-quality images.

Contrastive Learning

Contrastive learning, a self-supervised methodology, has been effective in various machine learning tasks. The SIMCLR framework demonstrated that applying contrastive learning to augmented image patches could yield high-quality feature representations. Inspired by SIMCLR, the CUT model utilized contrastive learning at the patch level, integrated with GANs, to achieve better performance in I2I translation.

Kolmogorov-Arnold Networks (KANs)

KANs, based on the Kolmogorov-Arnold representation theorem, offer improved accuracy and interpretability compared to MLPs. KANs have been applied to various machine learning tasks, including image classification, segmentation, and generation. This study aims to integrate KAN into the CUT model, creating the KAN-CUT model for unpaired I2I translation.

Proposed Approach

Kolmogorov-Arnold Networks (KAN)

KANs are based on the Kolmogorov-Arnold representation theorem, which states that a multivariate continuous function can be simplified into a finite composition of continuous single-variable functions and addition operations. KANs use B-spline curves as basis functions, offering a more interpretable and accurate representation compared to MLPs.

Efficient Two-Layer KAN

The original KAN implementation expands the input tensor to apply activation functions. To improve efficiency, this study proposes a reformulated procedure that applies learnable activation functions directly to the input tensor, avoiding tensor expansion and additional entropy regularization. The activation function is expressed as a combination of basis and spline functions, processed with Gated Linear Units (GLUs) for non-linear transformation.

Formulation of KAN-CUT Model

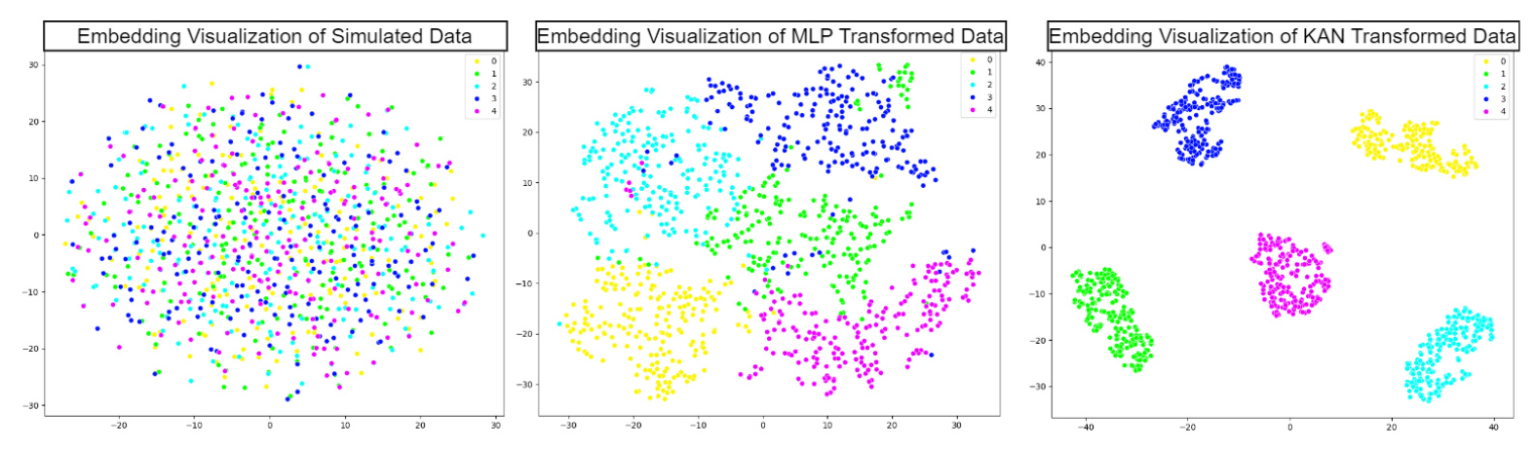

The KAN-CUT model integrates a two-layer KAN into the CUT model, replacing the two-layer MLP. The generator in the KAN-CUT model uses a ResNet-based encoder-decoder architecture. During translation, patches from the input and generated images are processed through the encoder and passed to the two-layer KAN to produce enriched feature representations. Contrastive learning is applied to maximize mutual information between corresponding patches.

Adversarial Loss for Realistic Image Generation

To ensure high-quality image generation, the KAN-CUT model uses adversarial loss based on the least squares loss. This loss guides the generator to produce realistic images that the discriminator cannot distinguish from real images. The final loss function combines adversarial loss and PatchNCE loss to ensure patch-level correspondence and prevent inappropriate translation.

Experiments

Datasets

Two well-known datasets were used for evaluation: Horse→Zebra and Cat→Dog. The Horse→Zebra dataset comprises 1067 horse images and 1344 zebra images for training, with 120 horse images and 140 zebra images for testing. The Cat→Dog dataset includes 5000 training images and 500 test images for each category.

Evaluation Metrics

The Fréchet Inception Distance (FID) score was used to evaluate the quality of generated images. A lower FID score indicates higher similarity to real images, representing better quality.

Experimental Environment and Baselines

Experiments were conducted in a Python environment using the PyTorch framework, with computational tasks performed on NVIDIA A100-PCI GPUs. The models were trained for 400 epochs using the Adam optimization algorithm. Baseline models for comparison included CycleGAN, MUNIT, SelfDistance, GCGAN, CUT, and DCLGAN.

Results

The KAN-CUT model outperformed all baseline models on both datasets, achieving an FID score of 40.2 on the Horse→Zebra dataset and 59.55 on the Cat→Dog dataset. Visualization of generated images demonstrated the superior quality of KAN-CUT compared to other models.

Conclusion, Limitation, and Future Work

The KAN-CUT model successfully demonstrated the applicability of KAN in I2I translation, achieving superior performance compared to baseline models. However, the study has limitations, including the focus on only two datasets and the primary goal of demonstrating KAN’s applicability rather than achieving state-of-the-art performance. Future work will involve validating the KAN-CUT model on additional datasets and exploring further improvements in accuracy and efficiency.

Acknowledgement

This material is based in part upon work supported by the National Science Foundation under Grant Nos. CNS-2018611 and CNS-1920182.