Authors:

Niyar R Barman、Krish Sharma、Ashhar Aziz、Shashwat Bajpai、Shwetangshu Biswas、Vasu Sharma、Vinija Jain、Aman Chadha、Amit Sheth、Amitava Das

Paper:

https://arxiv.org/abs/2408.10446

Introduction

Background

The rapid advancement of text-to-image generation systems, such as Stable Diffusion, Midjourney, Imagen, and DALL-E, has significantly increased the production of AI-generated visual content. This surge has raised concerns about the potential misuse of these images, particularly in the context of misinformation. To mitigate these risks, companies like Meta and Google have implemented watermarking techniques on AI-generated images. However, the robustness of these watermarking methods against sophisticated attacks remains questionable.

Problem Statement

This study investigates the vulnerability of current image watermarking techniques to visual paraphrasing attacks. Visual paraphrasing involves generating a caption for a given image and then using an image-to-image diffusion system to create a visually similar image that is free of any watermarks. The study aims to empirically demonstrate the effectiveness of visual paraphrasing attacks in removing watermarks and calls for the development of more robust watermarking techniques.

Related Work

State-of-the-Art Image Watermarking Techniques

Watermarking techniques can be broadly classified into two categories: static (non-learning) and learning-based methods.

Static Watermarking Methods

Static watermarking involves embedding a watermark into an image in a fixed manner. Common techniques include:

- DwtDctSVD: Combines Discrete Wavelet Transform (DWT), Discrete Cosine Transform (DCT), and Singular Value Decomposition (SVD) to embed watermarks in specific frequency bands. However, these methods are outdated and easily circumvented.

Learning-Based Watermarking Methods

Learning-based methods use neural networks to embed and detect watermarks. Key techniques include:

- HiDDen: Embeds a secret message into a cover image using an encoder-decoder architecture.

- Stable Signature: Modifies latent image representations in latent diffusion models to embed watermarks.

- Tree Ring Watermark: Embeds watermarks in the frequency domain of the initial noise vector using Fast Fourier Transform (FFT).

- ZoDiac: Uses pre-trained diffusion models to embed watermarks while maintaining visual similarity.

- Gaussian Shading: Embeds watermarks in the latent space during the diffusion process, preserving image quality.

Traditional De-Watermarking Techniques

Traditional image alteration techniques, such as brightness adjustment, rotation, JPEG compression, and Gaussian noise, can also function as de-watermarking attacks. However, these methods often degrade the overall image quality.

Research Methodology

Visual Paraphrasing

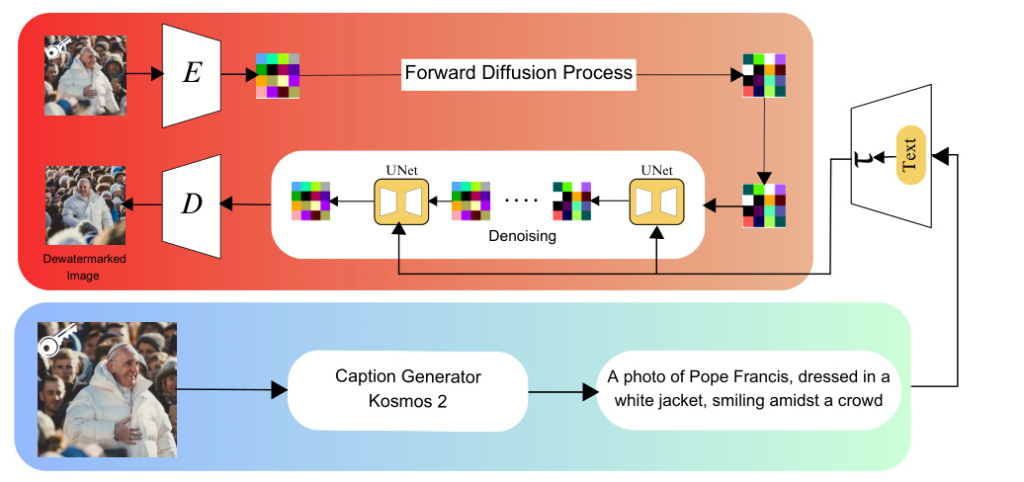

Visual paraphrasing involves generating a caption for a given image and then using an image-to-image diffusion system to create a visually similar image that is free of any watermarks.

Generating Caption

The KOSMOS-2 image captioning system is used to generate a textual description of the image. This caption serves as the textual conditioning input for the image-to-image diffusion models.

Image-to-Image Diffusion

The image-to-image diffusion process involves two stages: forward diffusion (adding noise to the image) and reverse diffusion (removing noise to reconstruct the image). The process is guided by the original image and the generated caption.

Parameters

- Paraphrase Strength: Determines the extent to which the original image’s features are preserved versus the introduction of new variations.

- Guidance Scale: Controls the extent to which the generated image aligns with the details specified in the text prompt.

Experimental Design

Evaluation Metrics

- Semantic Distortion: Measured using the continuous Metric Matching Distance (CMMD) score, which quantifies the similarity between the original and paraphrased images.

- Detectability Rate: Assesses the effectiveness of watermark detection methods after visual paraphrasing.

Experiment Setup

For each attack, the watermark probability post-attack is reported. The success of watermark detection is determined by applying a threshold on the obtained probability. Experiments are conducted on three datasets: MS COCO, DiffusionDB, and WikiArt.

Results and Analysis

Semantic Distortion

The analysis reveals a complex relationship between paraphrasing strength and semantic distortion. Low-strength paraphrasing results in minimal semantic distortion but is less effective at removing watermarks. As paraphrasing strength increases, watermark removal becomes more successful, but semantic distortion also increases.

Detectability Rate

The detectability rate decreases as the strength of visual paraphrasing increases. This trend is consistent across various watermarking techniques, though some algorithms demonstrate more resilience than others.

Human Annotation Task

A human annotation task was conducted to obtain annotations regarding the acceptability of paraphrased images. The results indicate that the optimal paraphrase strength is around 0.4, and the optimal guidance scale values are 1 and 3.

Overall Conclusion

This study empirically demonstrates that existing image watermarking techniques are fragile and susceptible to visual paraphrase attacks. The findings underscore the urgent need for the development of more robust watermarking strategies. The release of the visual paraphrase dataset and accompanying code aims to facilitate further research in this area.

Ethical Considerations

The development of visual paraphrasing methods that can bypass watermarking techniques raises important ethical considerations. The research aims to advance image processing and improve watermarking resilience while mitigating risks of misuse. Responsible disclosure and ethical guidelines are advocated to ensure the research aligns with the highest ethical standards.

By highlighting the brittleness of current watermarking techniques, this study serves as a call to action for the scientific community to prioritize the development of more resilient watermarking methods.