Authors:

Yuchao Liao、Tosiron Adegbija、Roman Lysecky

Paper:

https://arxiv.org/abs/2408.10428

Introduction

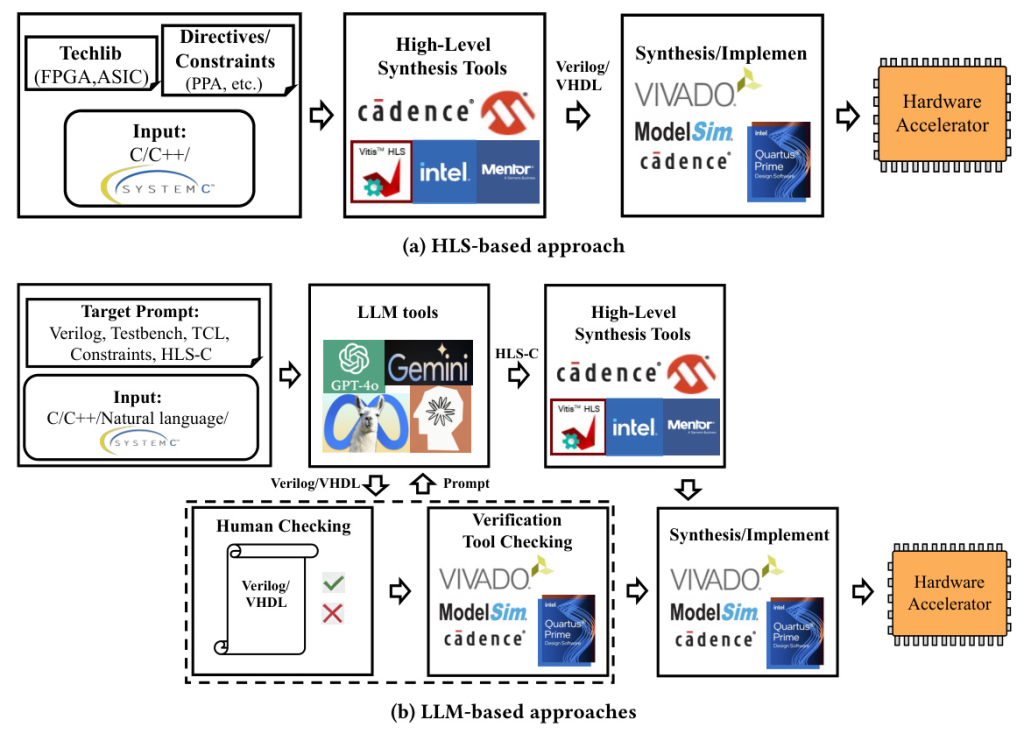

The rapid advancement in technology and the increasing demand for custom hardware accelerators, driven by applications such as artificial intelligence and high-performance computing, necessitate innovative design methodologies. High-Level Synthesis (HLS) has emerged as a valuable approach for designing, synthesizing, and optimizing hardware systems. HLS allows designers to define systems at a high abstraction level, independent of low-level circuit specifics, and utilize HLS tools to produce optimized low-level hardware descriptions.

Despite the advantages of HLS, the tools can still be time-consuming to use and demand considerable expertise. This paper explores the potential of Large Language Models (LLMs) to streamline or replace the HLS process, leveraging their ability to understand natural language specifications and refactor code. The study aims to illuminate the role of LLMs in HLS, identifying promising directions for optimized hardware design in applications such as AI acceleration, embedded systems, and high-performance computing.

Related Work

Taxonomy of LLM Applications in HLS

The application of LLMs to different stages of the HLS process has emerged as a promising research direction. The taxonomy categorizes LLMs based on their primary role in HLS: specification generators, design space exploration assistants, code generators, and hardware verification tools. This classification provides a framework for understanding how LLMs can augment HLS methodologies.

LLM as Specification Generator

LLMs hold promise as specification generators in HLS, translating natural language or higher-level code into HLS-compatible formats. This allows for intuitive and accessible expression of hardware functionality. Challenges persist in mitigating ambiguities inherent in natural language, which can lead to misinterpretations.

LLM as Code Generator

LLMs can help with code generation, directly generating synthesizable HDL from high-level specifications. This automation can boost productivity and reduce errors. The challenge lies in ensuring generated code quality and providing designers control over code structure and style.

LLM as Hardware Verification Assistant

LLMs can assist with hardware verification in HLS by automating the generation of test cases and identifying potential design flaws. This can lead to significant time savings and improved design reliability. However, challenges persist in ensuring the accuracy of LLM-generated test cases and their integration into existing HLS workflows.

LLM as Design Space Exploration Assistant

LLMs are promising in aiding HLS design space exploration (DSE) by suggesting optimizations and exploring design alternatives. Their ability to analyze design constraints and objectives can lead to faster design cycles and innovative solutions.

Research Methodology

Survey of the State-of-the-Art in LLMs for HLS

This section surveys the diverse applications of LLMs in HLS, spanning hardware design automation, software-hardware co-design, and design of embedded systems. Key research areas include natural language processing (NLP) to HDL translation, code generation, optimization and verification, and multimodal approaches. The survey also discusses input modalities used in the state-of-the-art, like textual descriptions and pseudocode, and the output modalities such as HDLs (VHDL, Verilog, SystemVerilog) and HLS-compatible programs (e.g., HLS-C).

LLMs Used for HLS

Recent advancements in LLMs such as ChatGPT, Gemini, Claude, and LLAMA have great potential for use in HLS. Fine-tuning models on domain-specific data often yields superior performance in generating desired outputs within the HLS workflow. A consistent theme emerging from existing literature is the necessity of human-in-the-loop (HITL) approaches for successful LLM integration in HLS.

Applications

LLMs have shown success in automating the generation of analog/mixed-signal (AMS) circuit netlists from transistor-level schematics, generating RTL code from natural language descriptions, and aiding in writing and debugging HDL code through conversational interactions. They are also being integrated into tools like MATLAB and Simulink to translate high-level design specifications into synthesizable Verilog and VHDL code.

Input and Output Modalities

The versatility of LLMs in HLS stems from their ability to process and generate information across diverse modalities. Textual descriptions, including high-level design specifications, natural language explanations of functionality, and code snippets in languages like C/C++, often serve as primary input modalities. LLMs can transform these textual inputs into HDL such as Verilog or VHDL.

Benchmarking and Evaluation

The evaluation and advancement of LLMs in HLS rely on robust benchmarks and datasets. Several key initiatives have emerged to address this need, including the RTLLM benchmark, RTL-Repo benchmark, VerilogEval, and VHDL-Eval. These benchmarks and datasets are crucial in LLM-driven HLS research, facilitating the evaluation of LLM capabilities and guiding the development of more robust HLS solutions.

Experimental Design

HLS Approach

The general HLS design flow transforms a high-level language input to a synthesizable hardware description (e.g., in Verilog or VHDL). This process starts with describing the desired hardware functionality in a high-level language like C/C++/SystemC, followed by synthesis for a specific hardware target, e.g., FPGAs like the Artix-7 or Zynq UltraScale+. HLS tools offer a range of directives to guide the synthesis process, allowing designers to control various aspects of the design.

LLM-Assisted HLS Approaches

Three LLM-assisted approaches were explored:

- C→LLM→Verilog: Using LLMs to directly generate synthesizable hardware accelerators in Verilog from high-level specifications.

- NL→LLM→Verilog: Directly generating Verilog code from natural language descriptions using LLMs.

- NL→LLM→HLS-C: Translating natural language descriptions into HLS-compatible input (HLS-C), which is then processed by the HLS tool to produce the synthesizable Verilog output.

Experimental Setup

To evaluate the three LLM-based approaches and compare them with the baseline HLS approach, nine benchmarks from the Polybench suite were used. These benchmarks encompass computational kernels common in scientific and engineering applications. ChatGPT-4o was employed as the LLM model, Vitis HLS 2023.2 as the HLS tool, and Vivado 2023.2 for implementation targeting a Xilinx xc7a200tfbg484-1 FPGA.

Results and Analysis

Number of Prompts for LLM-Based Approaches

The number of prompts required for each file type (HLS-C, Verilog, TCL, testbench, and XDC) to construct a complete hardware accelerator from C benchmarks was tracked. The complexity of the benchmark and the designer’s growing familiarity with LLM interaction were key factors in determining the number of prompts needed for successful Verilog generation.

Place & Routing Results

The simulation and implementation results for both LLM-based and HLS-based approaches were compared. The evaluation metrics included execution cycles, resource utilization (FFs, LUTs, Slices, DSPs, and BRAMs), total power consumption, and critical path delay. The results demonstrated the potential of LLMs in optimizing various aspects of hardware design.

Overall Conclusion

This paper has explored the application of Large Language Models (LLMs) in High-Level Synthesis (HLS), evaluating their potential to transform hardware design workflows. The findings reveal that LLM-based approaches can significantly enhance the efficiency of the HLS process, demonstrating notable improvements in resource utilization, execution cycles, and power consumption for most benchmarks compared to traditional HLS tools. However, challenges remain in ensuring the quality and optimization of LLM-generated code, particularly regarding critical path delays and the complexity of initial prompt interactions. Additionally, the substantial energy consumption associated with training and utilizing LLMs raises concerns about the overall energy efficiency of their integration into HLS workflows. Despite these challenges, the promising results suggest that with further refinement and research, LLMs could play a pivotal role in the future of hardware design automation, offering a powerful tool to streamline and optimize the HLS process.