Authors:

Saptarshi Neil Sinha、Holger Graf、Michael Weinmann

Paper:

https://arxiv.org/abs/2408.06975

Introduction

Accurate scene representation is crucial for various applications, including architecture, automotive industries, advertisement, and design. Traditional RGB color channels often fail to capture the full spectrum of light, leading to limitations in scene reproduction. Multi-spectral scene capture and representation overcome these limitations by providing higher resolution light and reflectance spectra. This is particularly important for applications such as virtual prototyping, predictive rendering, and spectral scene understanding.

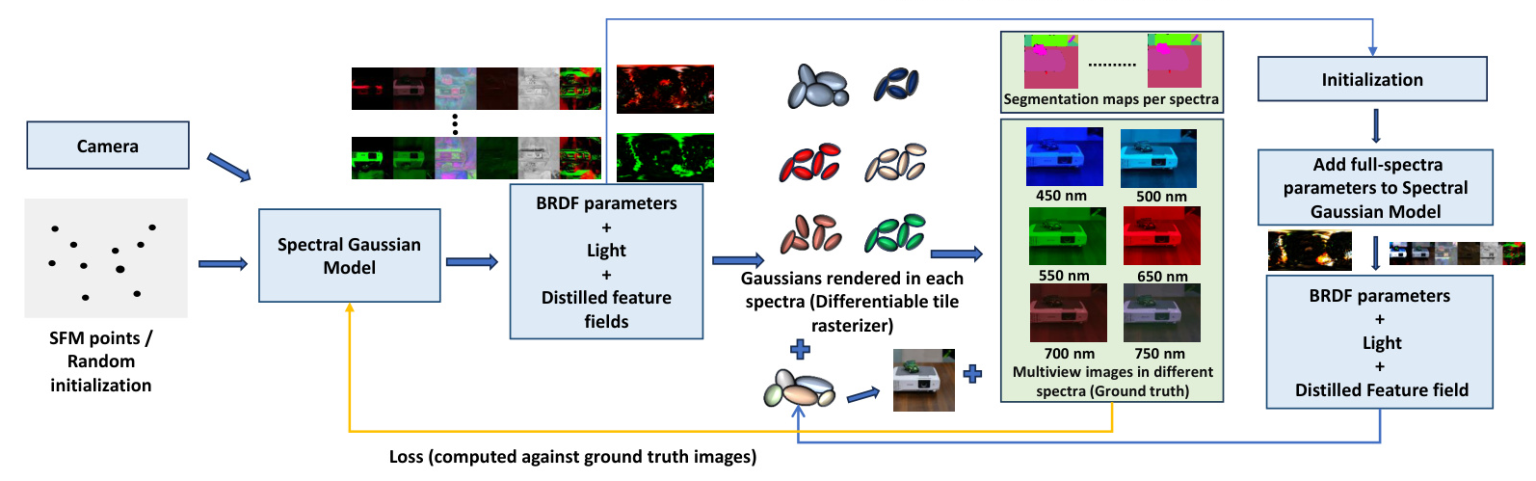

In this paper, we introduce a novel cross-spectral rendering framework based on 3D Gaussian Splatting (3DGS). Our approach generates realistic and semantically meaningful splats from registered multi-view spectrum and segmentation maps. We enhance the representation of scenes with multiple spectra, providing insights into underlying materials and segmentation. Our improved physically-based rendering approach for Gaussian splats estimates reflectance and lights per spectra, enhancing accuracy and realism.

Related Work

Learning-based Scene Representation

Recent advancements in learning-based scene representations combined with volume rendering techniques have enabled the generation of photo-realistic novel views. Neural Radiance Fields (NeRF) represent scenes using a neural network that predicts local density and view-dependent color for points in the scene volume. Despite their success, NeRFs lack interpretability and require dense grid evaluations for surface information extraction, limiting real-time applications.

Point-based neural rendering techniques, such as Point-NeRF, merge precise view synthesis from NeRF with fast scene reconstruction abilities. Recently, 3D Gaussian Splatting has been introduced as a state-of-the-art learning-based scene representation, surpassing existing implicit neural representation methods in quality and efficiency. However, extending these representations to the spectral domain remains an open challenge.

Radiance-based Appearance Capture

Several NeRF extensions focus on modeling reflectance by separating visual appearance into lighting and material properties. These approaches predict environmental illumination and surface reflectance properties, even under varying lighting conditions. Notable contributions include Ref-NeRF, which enhances reflectance prediction accuracy, and GaussianShader, which improves neural rendering in scenes with reflective surfaces.

Sparse Spectral Scene Understanding

Gaussian splatting-based semantic segmentation frameworks, such as Gaussian Grouping and LangSplat, utilize foundation models like Segment Anything to segment scenes. These methods enable precise and efficient open-vocabulary querying within 3D spaces. Our framework leverages Gaussian grouping for accurate semantic segmentation of spectral scenes, providing valuable information about regions visible in specific spectral ranges.

Spectral Renderers

Spectral rendering engines, such as ART, PBRT, and Mitsuba, simulate real-world spectral data. GPU-based spectral renderers, like Mitsuba 2 and PBRT v4, leverage GPU acceleration for faster computations. Our approach adopts a sparse spectral rendering technique using multi-view spectrum maps, enabling faster computations while preserving necessary information for realistic spectral scene rendering.

Methodology

Spectral Gaussian Splatting

We propose an end-to-end spectral Gaussian splatting approach that enables physically-based rendering, relighting, and semantic segmentation of a scene. Our method builds upon the Gaussian splatting architecture and leverages the Gaussian shader for accurate BRDF parameter and illumination estimation. By employing Gaussian grouping, we effectively group 3D Gaussian splats with similar semantic information.

Our framework uses a Spectral Gaussian model to predict BRDF parameters, distilled feature fields, and light per spectrum from multi-view spectrum maps. This combination enhances BRDF estimation by incorporating spectral information, supporting applications in material recognition, spectral analysis, reflectance estimation, segmentation, illumination correction, and inpainting.

Spectral Appearance Modelling

To support material editing and relighting, we use an enhanced representation of appearance by replacing spherical harmonic coefficients with a shading function. This function incorporates diffuse color, roughness, specular tint, normal information, and a differentiable environment light map to model direct lighting. The rendered color per spectrum of a Gaussian sphere is computed by considering its diffuse color, specular tint, direct specular light, normal vector, and roughness.

Spectral Semantic Scene Representation

Per-spectrum segmentation maps enable sparse scene representation, allowing detailed identification of specific regions of interest and detection of attributes like material composition or texture. These maps are beneficial for tasks like inpainting and statue restoration, where spectral information is crucial for accurate results. Our framework utilizes the Gaussian grouping method to generate per-spectrum segmentation of the splats, ensuring consistent mask identities across different views.

Combined (Semantic and Appearance) Spectral Model

Combined with the original 3D Gaussian loss on image rendering, the total loss per spectra for fully end-to-end training is given by:

[ L_{\text{render}\lambda} = (1 – \gamma)L_{1\lambda} + \gamma \cdot L_{\text{D-SSIM}\lambda} + \gamma_{2d\lambda}L_{2d\lambda} + \gamma_{3d}L_{3d\lambda} ]

The total loss is given by:

[ L_{\text{render}\text{total}} = \sum_{\lambda=1}^{n\lambda} L_{\text{render}\lambda} ]

Spectral Scene Editing

Our framework extends scene editing techniques into the spectral domain, enabling object deletion, in-painting, and style-transfer. By leveraging semantic information present in any of the spectrum maps, we can achieve precise and context-aware modifications. This capability is particularly significant in fields like cultural heritage, where retrieving color information from specific spectral bands enables accurate restoration of missing color details throughout the full spectrum.

Experiments

Baseline Techniques Used for Comparison

We compare our approach with several state-of-the-art variants of Neural Radiance Fields (NeRF) and Gaussian splatting techniques, as well as their extensions to the spectral domain.

Datasets

We use synthetic and real-world multi-spectral videos for comparison with SpectralNeRF. Additionally, we conduct a comparative analysis using the cross-spectral NeRF dataset and create a synthetic multi-spectral dataset from the shiny Blender dataset and synthetic NeRF dataset.

Implementation Details

Evaluations were conducted on an Nvidia RTX 3090 graphics card. We used a total of 30,000 iterations for most scenes, except for specific scenes where we used 40,000 iterations.

Quantitative Analysis

Quantitative analysis was performed on all datasets, computing PSNR, SSIM, and LPIPS for all camera views. Our method outperforms existing spectral methods for both multi-spectral and cross-spectral data.

Qualitative Analysis

We conducted a qualitative comparison between our method and Cross-spectral NeRF using the dino and penguin datasets. Our method demonstrates superior performance in reconstructing scene appearance and accurate rendering of specular effects.

Ablation Study

We conducted ablations by eliminating the warm-up iterations introduced to enhance reflectance and light estimations. The results indicate that incorporating information from other spectra leads to improved performance metrics for the rendered output.

Limitations

Our framework requires co-registered spectrum maps, which can be complex and time-intensive. Additionally, the shading model used is fixed. Future research can explore integrating alternative deep learning algorithms for end-to-end training of non-co-registered spectrum maps and improving encoding methods to accommodate a larger number of spectra.

Conclusion

We presented 3D Spectral Gaussian Splatting, a cross-spectral rendering framework that generates realistic and semantically meaningful splats from registered multi-view spectrum and segmentation maps. Our approach enhances scene representation by incorporating multiple spectra, providing valuable insights into material properties and segmentation. Future work can focus on improving the accuracy of lighting and reflectance estimation and integrating a registration process for end-to-end training of non-co-registered spectrum maps.