Authors:

Shuqi He、Jun Zhuang、Ding Wang、Jun Song

Paper:

https://arxiv.org/abs/2408.06665

Introduction

Graph Neural Networks (GNNs) have become essential tools for node classification tasks in graph-structured networks, finding applications in various domains such as financial transactions, e-commerce, e-health systems, and real estate markets. Despite their powerful capabilities, GNNs face significant challenges due to topological vulnerabilities and weight instability in graph-structured networks. These issues can lead to decreased classification performance and model instability.

Topological Vulnerability

Topological vulnerability refers to the significant impact on model output caused by minor changes in the node connections (i.e., the topology) of graph-structured data. GNNs update node representations by aggregating information from neighboring nodes, where changes in node features may be propagated and amplified. This can lead to information loss or miscommunication due to slight topological changes.

Weight Sensitivity

Weight sensitivity indicates that GNNs are highly responsive to variations in weight initialization and optimization processes. Noise and outliers in the input data can impact weight updates, leading to unstable model performance. If the training data contains bias or noise, the model may overfit these undesirable patterns, resulting in increased weight sensitivity.

Existing methods, such as Graph Convolutional Networks (GCN), Graph Sampling and Aggregation (GraphSAGE), and Graph Attention Networks (GATv2), primarily focus on aggregating data from neighboring nodes. However, they often overlook information from non-adjacent nodes, limiting their ability to respond to topological changes and weight fluctuations.

RW-NSGCN: Proposed Method

To address the limitations of current graph structure network analysis methods, we propose a new approach called Random Walk Neural Sampling Graph Convolutional Network (RW-NSGCN). RW-NSGCN introduces a negative sampling mechanism based on the random walk algorithm to effectively utilize information from non-adjacent nodes. By integrating the Random Walk with Restart (RWR) and PageRank (PGR)-based negative sampling, this model combines global and local information, reduces noise interference, reassesses node importance, and identifies key nodes, thereby improving network stability.

Selection of Negative Samples

We propose a method to identify and protect important nodes by calculating the shortest path and combining scores.

First, by calculating the shortest path length between node pairs, we can determine the relationship chain length between two nodes. Then, we generate a set of reachable nodes for each node at different path lengths. This step helps identify the set of nodes that have specific path relationships with a node, understand path diffusion, and analyze the node’s position within the local structure and its relation to other nodes.

GCN Based on Label Propagation with DPP Sampling

By integrating Determinantal Point Processes (DPP) with Graph Convolutional Networks (GCN), we effectively capture the feature associations between nodes and communities while ensuring node diversity, thus improving defense capabilities. The model uses a DPP kernel matrix to measure the association strength between node pairs.

Experiment

This study explores the topological vulnerabilities and weight instability present in Graph Neural Networks (GNN) during classification tasks within graph-structured networks. To address these challenges, we propose a negative sampling method that combines random walk, PageRank, and Determinantal Point Processes (DPP) sampling to improve the robustness of graph neural networks.

Datasets

We tested the model’s baseline performance on the Cora and CiteSeer datasets and extracted subgraphs from PubMed to evaluate weight perturbations and critical link attacks.

Baselines

We compared RW-NSGCN with several models, which can be broadly categorized into four classes:

1. Aggregation-based Models: GCN, GraphSAGE, GATv2, PGCN.

2. Robust Representation and Sampling Techniques: MCGCN, D2GCN, SDGCN.

3. Heterogeneous and Multi-Relational Graph Models: R-GCN.

4. Over-smoothing Mitigation and Depth Enhancement: MAD, DGN.

Experiment Settings

In this experiment, we assessed the effectiveness of the model on the Cora and CiteSeer datasets and extracted subgraphs from the PubMed dataset to introduce artificial topological and weight perturbations for robustness testing. Each dataset underwent 200 training iterations, and model parameters were selected from the best-performing epoch for testing.

Metrics

Accuracy

Accuracy is used to assess the performance of a classification model, indicating the proportion of correctly classified instances within the dataset.

Mean Average Distance (MAD)

The Mean Average Distance (MAD) calculates the average absolute difference between vectors and measures the overall variability through the cosine distance, reflecting the dispersion of the data.

Experiment Results

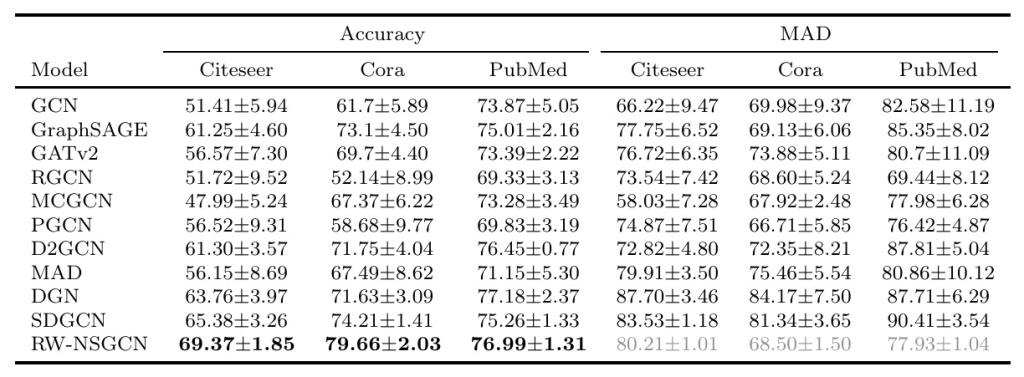

To validate the generalizability of the model, Table 2 presents the accuracy and Mean Average Distance (MAD) of various models on the Citeseer, Cora, and PubMed datasets.

Graph Structure Perturbations

We designed two types of attacks—topological perturbation and weight perturbation—to evaluate the model’s robustness and stability under such conditions.

Comparative Experiments with State-of-the-Art Models under Attack Conditions

To evaluate the robustness of the RW-NSGCN model against adversarial attacks, we conducted comparative experiments with leading state-of-the-art models.

Ablation Experiment

To better understand the RW-NSGCN, we conducted ablation experiments to evaluate its effectiveness.

Sensitivity Analysis of Maximum Non-Neighbor Distance

The comparison of model accuracy under different parameter settings (L = 5 and L = 6) on the Cora and Citeseer datasets demonstrates the superiority of the L = 5 setting.

Conclusion

This paper introduces a novel approach that combines Restart Random Walk (RWR) and PageRank algorithms for negative sampling and employs a Graph Convolutional Network (GCN) based on Determinantal Point Processes (DPP) to address the topological vulnerabilities and weight instability present in Graph Neural Network (GNN) classification tasks. Experimental evaluations demonstrate that RW-NSGCN excels in addressing topological and weight perturbations in graph structures, showing strong robustness and outperforming current state-of-the-art models.