Authors:

Ruei-Che Chang、Yuxuan Liu、Lotus Zhang、Anhong Guo

Paper:

https://arxiv.org/abs/2408.06632

Introduction

Images are a crucial part of our daily lives, serving various purposes such as work, social engagement, and entertainment. However, blind and low-vision (BLV) individuals often face significant barriers when it comes to image editing. Traditional image editing tools require visual evaluation and manipulation, making them inaccessible to BLV users. To address this issue, the researchers developed EditScribe, a prototype system that enables non-visual image editing through natural language verification loops powered by large multimodal models (LMMs).

Abstract

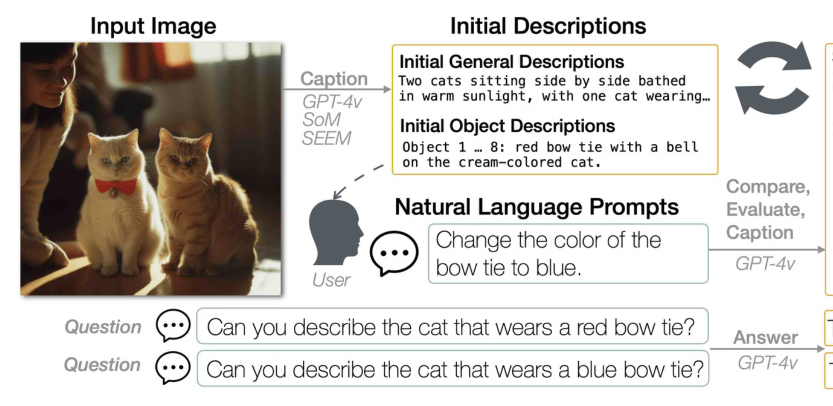

EditScribe allows BLV users to comprehend image content through initial general and object descriptions and specify edit actions using natural language prompts. The system performs the image edit and provides four types of verification feedback: a summary of visual changes, AI judgment, and updated general and object descriptions. Users can ask follow-up questions to clarify and probe into the edits or verification feedback before performing another edit. A study with ten BLV users demonstrated that EditScribe supports non-visual image editing effectively.

Related Work

Visual Content Authoring Accessibility

Research has shown substantial interest from the BLV community in digital creative activities, including photography, videos, presentation slides, and more. However, BLV individuals face immense access barriers in visual content authoring, such as limited understanding of visual editing standards and insufficient information about visuals.

BLV Individuals’ Access to Images

High-quality image descriptions are crucial for BLV users to perceive visual information. Automated image descriptions and object detection tools have become increasingly available, but state-of-the-art AI models still produce inaccurate results. Therefore, providing enough information for users to notice potential undesired outcomes is essential.

AI-assisted Image Editing

Recent advances in computer vision and multimodal models have introduced vast opportunities for easing image content authoring. However, most AI-based image editing tools target sighted users, and only a limited number of accessibility research studies have explored how these tools may support BLV individuals.

EditScribe

EditScribe leverages natural language verification loops to support BLV people in understanding and editing images non-visually. The system interprets the user’s natural language prompt into visual edits and converts the resulting visual changes back into textual feedback for the user to review and confirm.

Scenario Walkthrough

Amanda, a blind user, wants to make a public post asking for help finding her lost cat, Elsa. She uses EditScribe to remove her other cat, blur herself for privacy, increase the brightness of Elsa, change the color of Elsa’s bow tie, and add her phone number to the image. Amanda confirms each edit through consistent feedback from EditScribe.

Natural Language Verification Loops

EditScribe uses LMMs to communicate between the user and the image. The system provides four types of verification feedback:

- Summary of Visual Changes: Simulates a sighted person editing an image.

- AI Judgement: Assesses both visual and textual modifications to evaluate the success of the edit.

- Updated General Descriptions: Offers an independent perspective on the new image.

- Updated Object Descriptions: Provides detailed visual inspections of each object.

Cross-modal Grounding Pipeline

EditScribe generates initial general and object descriptions for the user. The user can perform edits with natural language prompts and get verification feedback after each edit. The system classifies user prompts as either questions or edit instructions and generates the corresponding verification feedback.

Image Edit Actions

EditScribe supports five editing functions: blurring an object, removing an object, changing the color of an object, adjusting the brightness of an object, and adding text to the image. These actions are critical to tasks commonly desired by BLV people.

EditScribe Web Interface

The EditScribe interface shows the images before and after the most recent edit, an image labeled with masks and indexes for debugging purposes, and an accessible chat with verification feedback. Users can enter their prompts and questions using natural language or undo/redo their edits.

User Study

A user study with ten BLV participants was conducted to understand how natural language verification loops support image editing. The study included five sessions: a tutorial, performing individual edit actions, making a flyer to find a missing cat, making a flyer for recruiting a craftsman, and editing participants’ own images.

Findings

Task Performance

Participants were able to complete most tasks but with varying confidence. They were generally confident about changing the color of the wall and adding text but encountered occasional confusing verification feedback in other tasks.

Perception of EditScribe

Participants found EditScribe promising for everyday scenarios but expressed a need for more edit actions and finer control over image editing. They appreciated the ability to edit images non-visually and had ideas for supporting everyday scenarios.

Prompting Strategies

Participants found it intuitive to use natural language to specify prompts and ask follow-up questions. They developed strategies for creating prompts with varying levels of granularity and used follow-up questions to verify the results of their edits.

Verification Feedback

Participants found all four types of verification feedback useful depending on the context. They developed different strategies for consuming verification feedback and noted occasional discrepancies among the feedback.

Final Edited Images

Participants were generally willing to use the final edited images depending on the scenario but expressed the need for further validation from other sources. They employed various strategies to verify the content of images, including using different image captioning models or applications.

Discussion and Future Work

Enhancing Verification Feedback Loops

There are opportunities to make verification feedback more informative and personalized to BLV users. Customization, elaborating specific visual effects, and incorporating detailed spatial descriptions are essential. The system can also improve its effectiveness by building a model of the user and initiating follow-up questions to elicit user needs and goals.

Supporting Richer Edit Actions

Participants expressed the need for finer controls and more edit actions. EditScribe’s flexible structure can support more edit actions and allow users to specify particular values for visual effects. Future work could explore ways to describe global or partial style changes in verification feedback.

Limitations of the Study

The study insights may not represent sufficiently broad perspectives in the BLV community. Future studies could explore broader experiences and perspectives through field studies or deployment.

Conclusion

EditScribe demonstrates the concept of natural language verification loops in image editing. The system supports five specific edit actions and allows BLV users to input their editing instructions in natural language. A user study with ten BLV people showed that EditScribe supports non-visual image editing effectively. Future work could enhance verification feedback, support richer edit actions, and explore broader experiences and perspectives in the BLV community.

Acknowledgments

The researchers thank the anonymous reviewers and all the participants in the study for their suggestions, as well as Andi Xu for helping facilitate the user studies.