Authors:

Alessandro Berti、Mayssa Maatallah、Urszula Jessen、Michal Sroka、Sonia Ayachi Ghannouchi

Paper:

https://arxiv.org/abs/2408.07720

Introduction

Process Mining (PM) is a data science discipline that extracts process-related insights from event data recorded by information systems. Techniques in PM include process discovery, conformance checking, and predictive analytics. Large Language Models (LLMs) have shown promise as PM assistants, capable of responding to inquiries and generating executable code. However, LLMs struggle with complex tasks requiring advanced reasoning. This paper proposes the AI-Based Agents Workflow (AgWf) paradigm to enhance PM on LLMs by decomposing complex tasks into simpler workflows and integrating deterministic tools with LLMs’ domain knowledge.

Related Work

Process Mining on LLMs

Previous works have explored textual abstractions of PM artifacts for LLMs to respond to inquiries and translate inquiries into executable SQL statements. Various PM tasks, such as semantic anomaly detection and root cause analysis, have been implemented on LLMs. Different implementation paradigms, including direct provision of insights, code generation, and hypotheses generation, have been proposed.

Connection to Traditional Process Mining Workflows

Scientific workflows, such as those based on RapidMiner, Knime, or SLURM, have been used in PM to increase reproducibility and standardize experiments. However, AgWf(s) aim to ensure the feasibility of the overall pipeline by adopting a divide-et-impera approach and using the right tool for each task. Unlike traditional PM workflows, AgWf(s) are non-deterministic by nature.

Methodology

AI-Based Agents Workflows

An AI-Based Agents Workflow (AgWf) is defined as a tuple containing deterministic tools (F) and AI-based tasks (T). The workflow involves selecting tools for tasks and executing tasks to produce the final response. Different tasks can be associated with different agents, each with specific skills. The workflow is static, but tasks and tool selections are non-deterministic.

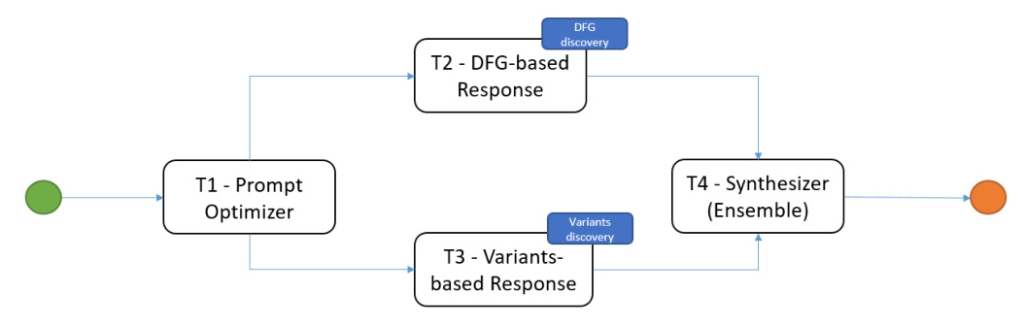

AgWf Running Example

An example AgWf aims to exploit two different abstractions (directly-follows graph and process variants) to respond to a user’s inquiry. The individual results are merged by an ensemble, potentially leading to better results. The workflow includes clear start and end tasks, with interleaved tasks allowing different sequences of execution. The execution of each task appends the result to the input string, optimizing the inquiry and providing a comprehensive response.

Possible Implementations

Different implementations of AgWf(s) for the same tasks can vary in effectiveness. A single-task implementation may struggle with complex tasks, while a multi-task implementation can provide more comprehensive insights. Decomposing tasks into smaller units, each with a specific tool, leads to better results. For example, bias detection in process mining can be implemented with multiple tasks, each focusing on different aspects of the analysis.

Types of Tasks

Different types of tasks in AgWf workflows include:

- Prompt Optimizers: Transform the original inquiry into a language tailored to AI agents.

- Ensembles: Collate insights from different tasks into a coherent text.

- Routers: Decide which dependent nodes should be executed based on the prompt.

- Evaluators: Assess the quality of the output of previous tasks.

- Output Improvers: Enhance the quality of the output of previous tasks.

Implementation Framework

The CrewAI Python framework supports the implementation of AgWf(s) on top of LLMs. Key concepts include defining AI-based agents, tasks, and tools, and executing tasks sequentially or concurrently. The framework supports entity memory and callbacks for advanced features. Examples of AI-based workflows implemented in CrewAI include fairness assessment and root cause analysis.

Next Steps

Automatic Definition of AgWf(s)

Future research should explore automatic decomposition of tasks into AgWf(s) by an orchestrating LLM. This involves understanding the original task and requesting clarifications.

Tasks Keeping the Human-in-the-Loop

Some tasks may benefit from user clarifications. For example, a prompt optimizer may need additional information to optimize a generic inquiry.

Evaluating AgWf(s)

Assessing the quality of outputs from AgWf(s) is challenging. The effectiveness of the overall workflow depends on the performance of individual agents. Collaboration and psychological traits of agents should be considered.

Maturity of the Tool Support

Current frameworks for implementing AgWf(s) need further development to reach high maturity. Solutions like LangGraph and AutoGen offer advanced features but require stability and user-friendly interfaces.

Conclusion

AgWf(s) offer a powerful tool for PM-on-LLMs by decomposing tasks into smaller units and combining AI-based tasks with deterministic tools. This approach aims to maximize the quality of outputs, differing from traditional scientific workflows focused on reproducibility. Future research should focus on automatic workflow definition, human-in-the-loop tasks, evaluation frameworks, and tool support maturity.