Authors:

Ruofan Liang、Zan Gojcic、Merlin Nimier-David、David Acuna、Nandita Vijaykumar、Sanja Fidler、Zian Wang

Paper:

https://arxiv.org/abs/2408.09702

Introduction

Virtual object insertion into real-world scenes is a crucial task in various applications, including virtual production, interactive gaming, and synthetic data generation. Achieving photorealistic insertions requires accurately modeling the interactions between virtual objects and their environments, such as shadows and reflections. Traditional pipelines for virtual object insertion involve three main steps: lighting estimation, 3D proxy geometry creation, and composited image rendering. However, lighting estimation from a single image remains a significant challenge due to the ill-posed nature of inverse rendering.

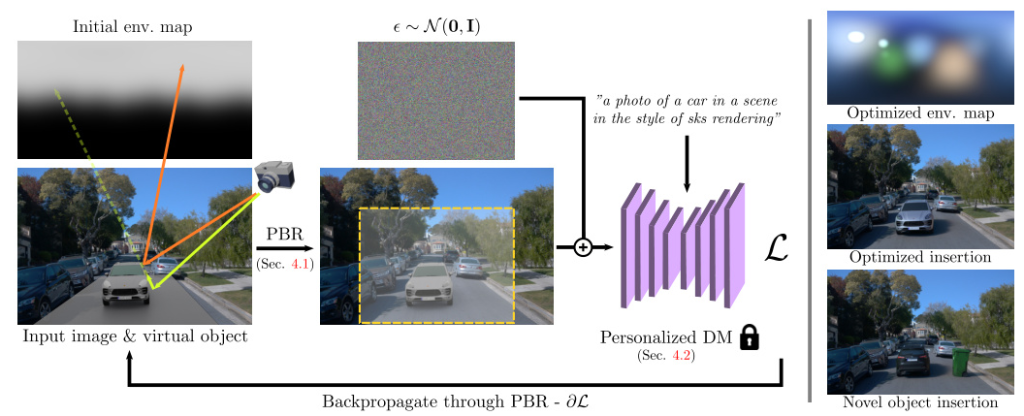

Recent advancements in large-scale diffusion models (DMs) have demonstrated strong generative and inpainting capabilities. However, these models often fail to generate consistent lighting effects while preserving the identity and details of the composited object. To address these challenges, the authors propose a novel method called Diffusion Prior for Inverse Rendering (DiPIR), which leverages the strong image generation priors learned by DMs to guide a physically based inverse rendering process.

Related Work

Inverse Rendering

Inverse rendering aims to recover intrinsic properties of a scene, including materials, shape, and lighting, from images. The main challenge lies in the ill-posed nature of the task. Early methods used hand-crafted priors to constrain the solution space, but these often fell short in real-world scenarios. With the advent of deep learning, data-driven priors have been learned from ground truth supervision. However, acquiring real-world data with accurate intrinsic decomposition is challenging, leading to the use of synthetic datasets or domain-specific algorithms.

Lighting Estimation

Lighting estimation is a core component of photorealistic virtual object insertion pipelines. Existing methods often use feed-forward neural networks to regress lighting information from a single image. Recent approaches have formulated lighting estimation as a generative task, using models like StyleGAN and diffusion models to generate environment maps. However, reproducing high dynamic range (HDR) lighting remains challenging, especially for effects like sharp shadows in outdoor settings.

Physically-Based Differentiable Rendering

Physically-based rendering (PBR) aims to produce realistic images by accurately simulating light transport. Differentiable rendering methods have been developed to compute derivatives for optimization tasks. These methods focus on lighting, material, and tone-mapping derivatives, which generally do not introduce discontinuities.

Diffusion Model Priors and Personalization

Diffusion models trained on large-scale datasets have been adapted for various applications, including depth estimation and image restoration. Techniques like DreamBooth and Textual Inversion fine-tune DMs for specific tasks. Recent works have adapted DMs for lighting estimation and intrinsic image decomposition, showing promising results.

Research Methodology

Physically-Based Virtual Object Insertion

DiPIR recovers scene lighting and tone-mapping parameters from a single image to enable photorealistic insertion of virtual objects. The method involves creating a 3D proxy virtual scene with a virtual object and a proxy plane. The scene’s lighting is represented using Spherical Gaussian (SG) parameters, which are optimized through a differentiable rendering process.

Light Representation

The scene’s lighting is represented with a set of optimizable SG parameters. The overall environment map is computed using these parameters, providing a flexible and efficient representation for lighting optimization.

Differentiable Rendering

The rendering process involves simulating the interactions of the environment map with the virtual object and its effect on the background scene. The foreground image of the inserted object is rendered using path tracing, while the shadow ratio accounts for the object’s effect on the surrounding scene. An optimizable tone correction function compensates for unknown tone-mapping of the input image.

Diffusion Guidance

The composited image is passed through a personalized DM to compute a guidance signal. The DM is fine-tuned to preserve the identity of the objects to be inserted, using additional synthetic images for the insertable class concept. The Score Distillation Sampling (SDS) loss is adapted to guide the optimization process, ensuring perceptual realism of the edited result.

Optimization Formulation

The optimization process involves minimizing a loss function that combines diffusion guidance, consistency regularization, and environment map fusion. The personalized DM provides guidance for lighting consistency and accurate shadows, while the environment maps are progressively fused to improve optimization stability.

Experimental Design

Datasets

- Waymo Dataset: 48 scenes with diverse illumination conditions from the Waymo Open Dataset, including daytime, twilight, and night scenes. The process is fully automatic, using LiDAR point cloud and semantic segmentation to fit a ground plane and detect empty spaces for car insertion.

- PolyHaven Dataset: 11 HDR environment maps with manually placed ground planes and virtual objects. A pseudo-ground-truth rendering is created by lighting the inserted object with the environment map itself.

User Study

A user study was conducted to evaluate the perceptual realism of virtual object insertion. Participants compared pairs of images generated by DiPIR and baseline methods, selecting the more realistic image. The study involved 9 users, and the results were averaged across multiple trials.

Baselines

DiPIR was compared with several state-of-the-art methods for lighting estimation and virtual object insertion, including Hold-Geoffroy et al., NLFE, StyleLight, and DiffusionLight.

Results and Analysis

Quantitative Evaluation

DiPIR outperformed all baseline methods in user preference studies, achieving higher realism scores across various illumination conditions. The method was particularly effective in challenging scenarios like twilight and night scenes, where traditional methods struggled.

Qualitative Comparisons

Qualitative comparisons demonstrated that DiPIR produced high-quality object insertions with accurate lighting effects, shadows, and reflections. The method consistently blended virtual objects into diverse scenes, making it suitable for applications like synthetic data generation and augmented reality.

Ablation Study

An ablation study was conducted to evaluate the impact of different components of DiPIR. The study confirmed the importance of adaptive diffusion guidance, concept preservation, and environment map fusion in achieving high-quality results.

Overall Conclusion

DiPIR leverages the inherent scene understanding capabilities of large diffusion models to guide a physically based inverse rendering pipeline. The method recovers lighting and tone-mapping parameters from a single image, enabling photorealistic insertion of virtual objects. DiPIR outperforms existing state-of-the-art methods in both quantitative and qualitative evaluations, demonstrating its potential for various content creation applications.

Future work could explore more complex lighting representations and extend the rendering formulation to account for additional effects like reflections from the scene onto the inserted object. Recent personalization methods that do not require test-time fine-tuning could also be integrated to reduce overhead and complexity.