Authors:

Meng Luo、Hao Fei、Bobo Li、Shengqiong Wu、Qian Liu、Soujanya Poria、Erik Cambria、Mong-Li Lee、Wynne Hsu

Paper:

https://arxiv.org/abs/2408.09481

Introduction

Background and Motivation

The quest for human-level artificial intelligence encompasses not only possessing intelligence but also understanding human emotions, thus propelling sentiment analysis and opinion mining to become key areas of research focus. Through decades of research, sentiment analysis has seen significant developments across various dimensions and aspects. The field has evolved from traditional coarse-grained analysis, such as document and sentence-level analysis, to fine-grained analysis (e.g., Aspect-based Sentiment Analysis, ABSA), incorporating a wide array of emotional elements and evolving to extract different sentiment tuples, including the extraction of targets, aspects, opinions, and sentiments.

Problem Statement

Despite significant progress, current research definitions of sentiment analysis are still not comprehensive enough to offer a complete and detailed emotional picture, primarily due to several issues:

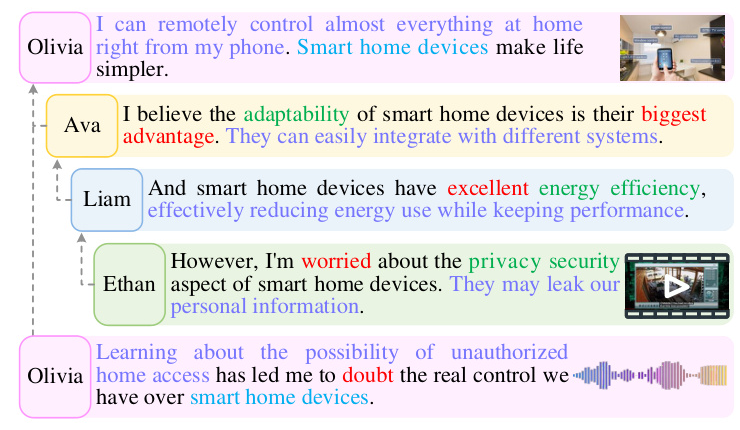

1. Lack of an integrated definition that combines fine-grained analysis, multimodality, and conversational scenarios.

2. Neglect of the dynamic nature of emotions that change over time or due to various factors.

3. Insufficient analysis or identification of the causal reasons and intentions behind sentiments.

To address these gaps, this paper proposes Multimodal Conversational Aspect-based Sentiment Analysis, aiming to provide a more comprehensive and holistic ABSA definition that includes both Panoptic Sentiment Sextuple Extraction and Sentiment Flipping Analysis.

Related Work

Evolution of ABSA

ABSA has evolved from its initial objective of identifying sentiment polarity to more complex tasks such as recognizing targets, aspects, and opinions. The complexity of ABSA tasks has increased with the introduction of combinations of these elements, ranging from paired extraction to triplet and quadruple extractions. Concurrently, multimodal sentiment analysis has garnered increasing attention, incorporating modalities beyond text, such as images, audios, and videos.

Multimodal Sentiment Analysis

The trend in multimodal sentiment analysis has shifted from coarse-grained to fine-grained. The proposed methods mainly focus on exploring feature extraction and fusion from diverse modal inputs, relying on additional structured knowledge. Furthermore, in terms of application scenarios, there has been a shift from analyzing single pieces of text to engaging in multi-turn, multi-party dialogues, aiming to recognize emotions within dialogues to better align with real-world applications.

Gaps in Existing Research

Current ABSA benchmarks still lack a combined perspective and comprehensive definition across granularity, multimodality, and dialogue contexts. For instance, there is an absence of benchmarks for fine-grained sentiment analysis in multimodal dialogue scenarios. Moreover, previous research has not fully leveraged the role of multimodality in ABSA, often considering multimodal information merely as supplementary clues to assist in determining opinions or sentiments.

Research Methodology

Task Definition

Subtask-I: Panoptic Sentiment Sextuple Extraction

Given a dialogue with a replying structure, the task is to extract all sextuples (holder, target, aspect, opinion, sentiment, rationale). The elements can be either continuous text spans explicitly mentioned in utterances or implicitly inferred from contexts or non-text modalities.

Subtask-II: Sentiment Flipping Analysis

Given the same input as in subtask-I, the task detects all sextuples (holder, target, aspect, initial sentiment, flipped sentiment, trigger). The trigger refers to a predefined label among four categories: introduction of new information, logical argumentation, participant feedback and interaction, and personal experience and self-reflection.

New Benchmark: PanoSent

Dataset Construction

The PanoSent dataset is constructed via both human annotation and auto-synthesis. The corpus of dialogues is collected from various social media or forum platforms in different languages. The dialogues are then annotated according to customized guidelines, ensuring high quality through rigorous screening and cross-validation.

Data Insights

PanoSent covers more than 100 common domains and scenarios, featuring multimodality, multilingualism, and multi-scenarios. The dataset includes three mainstream languages: English, Chinese, and Spanish, and supports implicit ABSA, thereby elevating the challenges.

Experimental Design

Multimodal LLM Backbone

A novel Multimodal Large Language Model (MLLM), Sentica, is developed, leveraging the capabilities of existing MLLMs to understand multimodal data. Sentica uses Flan-T5 (XXL) as the core LLM for semantics understanding and decision-making, with ImageBind as the unified encoder for all three non-text modalities.

CoS Reasoning Framework

Inspired by the Chain-of-Thought (CoT) reasoning paradigm, the Chain-of-Sentiment (CoS) reasoning framework is proposed. The framework deconstructs the two subtasks into four progressive, chained reasoning steps:

1. Target-Aspect Identification

2. Holder-Opinion Detection

3. Sentiment-Rationale Mining

4. Sentiment Flipping Trigger Classification

Paraphrase-based Verification

To enhance the robustness of the CoS reasoning process, a Paraphrase-based Verification (PpV) mechanism is introduced. This mechanism converts structured k-tuples into natural language expressions and checks whether the claim is in an entailment or contradiction relationship with the given dialogue context.

Instruction Tuning

Sentica is empowered with the reasoning capabilities of the CoS framework through a three-phase training process, including understanding multimodal representations, executing the sextuple extraction process, and mastering the PpV pattern.

Results and Analysis

Main Results

Panoptic Sentiment Sextuple Extraction

The performance of different methods on Subtask-I is compared, showing that Sentica, when equipped with the CoS framework and PpV mechanism, exhibits the strongest global performance across different task evaluation granularities and languages.

Sentiment Flipping Analysis

Similar trends are observed in Subtask-II, with Sentica demonstrating significant superiority over other methods. The complete system (Sentica+CoS+PpV) achieves the best performance.

Analysis and Discussion

Necessity of Synthetic Data

Training on real-life data yields better results compared to synthetic datasets. However, using synthetic data as an additional supplement significantly enhances the final performance, proving the necessity of constructing synthetic data.

Role of Multimodal Information

Multimodal information is comprehensive and all-encompassing, assisting in determining sentiment polarity and serving as a direct source of information for judging the sextuple elements. Removing any type of modal signal results in a downgrade in performance.

Performance for Explicit and Implicit Elements

The performance of implicit elements is consistently lower than that of explicit elements, indicating that recognizing implicit elements is more challenging.

Rationality of PpV Mechanism

The PpV mechanism outperforms both direct verification and no verification, ensuring that each reasoning step is built on verified information and enhancing the overall robustness of sentiment analysis.

Overall Conclusion

This paper introduces a novel multimodal conversational ABSA, providing a comprehensive and panoptic definition of sentiment analysis that aligns with the complexity of human-level emotional expression and cognition. The PanoSent dataset and the proposed Chain-of-Sentiment reasoning framework, together with the Sentica MLLM and paraphrase-based verification mechanism, serve as a strong baseline for subsequent research, opening up a new era for the ABSA community.