Authors:

Meghdad Dehghan、Jie JW Wu、Fatemeh H. Fard、Ali Ouni

Paper:

https://arxiv.org/abs/2408.09568

Introduction

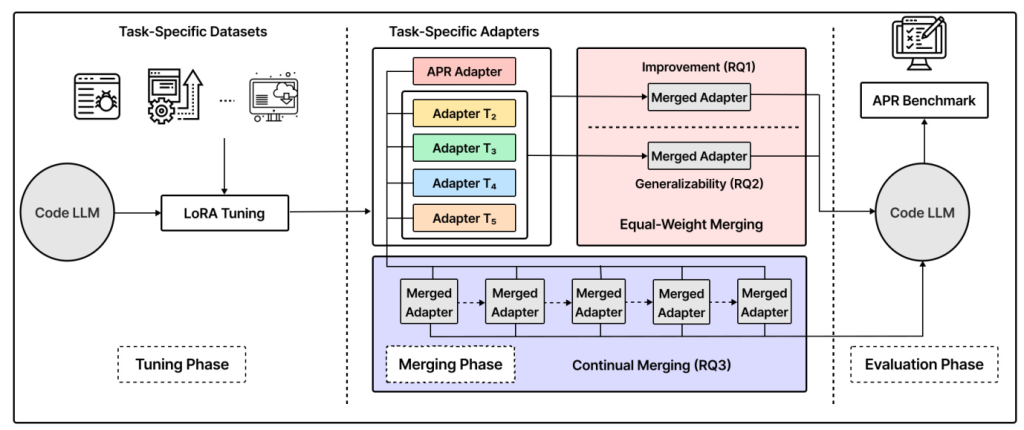

In the realm of Software Engineering (SE), automating code-related tasks such as bug prediction, bug fixing, code generation, and more, has been a focal point of research. Large Language Models (LLMs) have demonstrated significant potential in these areas. However, training and fine-tuning these models for each specific task can be resource-intensive and time-consuming. This study introduces MergeRepair, a framework designed to merge multiple task-specific adapters in Code LLMs, specifically for the Automated Program Repair (APR) task. The primary objective is to explore whether merging these adapters can enhance the performance of APR without the need for extensive retraining.

Related Work

Automated Program Repair

Automated Program Repair (APR) has a rich history in SE, with early approaches focusing on training deep neural networks to map buggy code to fixed code snippets. The advent of pre-trained language models has shifted the focus towards leveraging these models for APR through instruction-tuning. However, the potential of merging task-specific adapters for APR remains underexplored.

Adapters

Adapters are small, specialized modules used in Parameter-Efficient Fine-Tuning (PEFT) of LLMs. They have shown promising results in optimizing memory usage and adapting large models to specific tasks. Various adapter architectures, such as LoRA and IA3, have been proposed, each targeting specific layers and parameters of transformer-based models.

Merging Models

Merging models involves combining the parameters of multiple models trained on distinct tasks to create a unified model. This approach has shown success in various domains, including natural language processing. Techniques like weight-space averaging, TIES-Merging, and DARE have been proposed to efficiently merge models. However, the application of these techniques to code-related tasks, particularly APR, is still an open research area.

Research Methodology

Research Questions

The study aims to answer the following research questions:

- RQ1: Does merging APR adapter with other task-specific adapters improve the performance of APR?

- RQ2: Can merged adapters of other tasks generalize to APR?

- RQ3: How does continual merging of other task-specific adapters with the APR adapter influence the performance of APR?

Merging Techniques

The study explores three merging techniques:

- Weight-Space Averaging: A straightforward approach that averages the weight parameters of the specialized models.

- TIES-Merging: A three-step approach that trims redundant parameters, resolves parameter sign conflicts, and averages the remaining parameters.

- DARE: A two-step method that drops a certain ratio of parameters and resizes the remaining ones to reconstruct an embedding vector similar to the initial parameter embeddings.

Experimental Design

Datasets

The study utilizes the CommitPackFT dataset, which contains code samples from GitHub repositories, including commit messages, old contents, and new contents. The dataset is classified into five tasks: Development, Misc, Test & QA, Improvement, and Bug Fixes (APR).

Models

Two state-of-the-art Code LLMs, StarCoder2 and Granite, are used for the experiments. These models are chosen for their performance on APR tasks and their compatibility with the LoRA adapter.

Steps for Each Research Question

- RQ1: Merge APR adapter with other task-specific adapters one by one and evaluate the performance on the APR benchmark.

- RQ2: Merge non-APR adapters and evaluate their generalizability to APR.

- RQ3: Implement continual merging by sequentially adding task-specific adapters to the merged adapter and evaluate the performance on APR.

Evaluation Metrics

The models are evaluated using pass@k and RobustPass@k scores on the HumanEvalFix benchmark. These metrics assess the functional correctness and robustness of the generated code samples.

Results and Analysis

The results of the study will be explained at a later stage. The analysis will include studying the internal adapter parameters and the correct-to-incorrect ratio of the generated samples when merging task-specific adapters.

Overall Conclusion

This study introduces MergeRepair, a framework for merging task-specific adapters in Code LLMs to enhance the performance of Automated Program Repair. By exploring various merging techniques and evaluating their impact on APR, the study aims to provide insights into the potential of merging adapters for code-related tasks. The findings could inspire further research and practical applications in reducing the effort of training new adapters and leveraging existing ones for new tasks.

This blog post provides a detailed overview of the study, highlighting the motivation, methodology, and potential implications of merging task-specific adapters in Code LLMs for APR. The results and further analysis will be shared in subsequent updates.