Authors:

Henrik Abgaryan、Ararat Harutyunyan、Tristan Cazenave

Paper:

https://arxiv.org/abs/2408.06993

Exploring the Potential of Large Language Models in Job Shop Scheduling

Introduction

The Job Shop Scheduling Problem (JSSP) is a critical challenge in optimizing production processes. It involves allocating a set of jobs to a limited number of machines while minimizing factors like total processing time or job delays. Traditional approaches, such as mathematical programming and heuristic algorithms, often struggle with scalability and complexity. Recent advancements in artificial intelligence (AI) have introduced promising solutions like reinforcement learning and graph neural networks. This paper explores the potential of Large Language Models (LLMs) for JSSP, introducing a novel supervised dataset and demonstrating the effectiveness of LLM-based scheduling.

Related Work

JSSP is known to be NP-hard, making exact solutions generally infeasible for large instances. Traditional methods, including constraint programming and heuristic approaches like Priority Dispatching Rules (PDRs), have been widely used. Recent research has focused on deep learning and neural networks, particularly deep reinforcement learning (DRL), to address JSSP. While LLMs have shown potential in mathematical reasoning and optimization tasks, their application to JSSP remains unexplored until now.

Preliminary

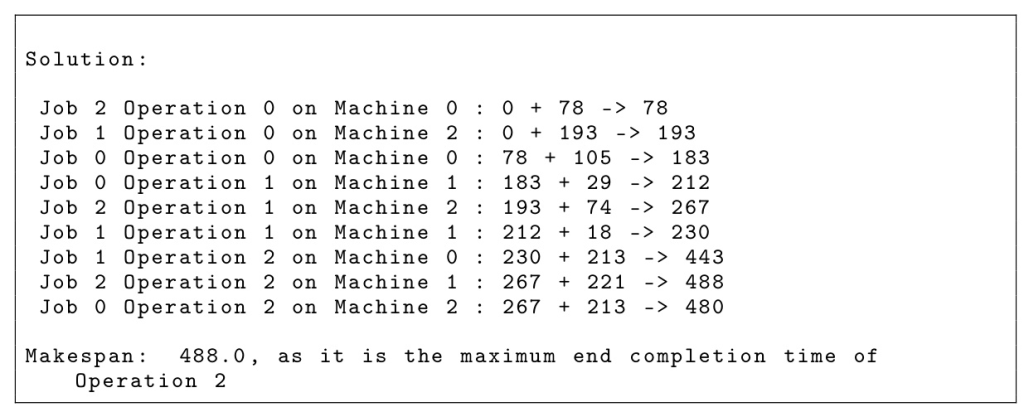

JSSP involves a set of jobs and machines, where each job must be processed through a sequence of operations on specific machines. The objective is to determine a schedule that minimizes the makespan, the maximum completion time of all jobs, while meeting all constraints. The complexity of a JSSP instance is determined by the number of jobs and machines.

Dataset Generation

To train LLMs for JSSP, the problem must be represented in natural language. The authors converted the traditional matrix-based representation of JSSP into a human-readable format using two approaches: Job-Centric and Machine-Centric.

Job-Centric Approach

This approach organizes tasks by jobs, detailing the sequence of operations, corresponding machines, and durations for each job.

Machine-Centric Approach

This approach organizes tasks by machines, detailing the sequence of operations, corresponding jobs, and durations for each machine.

Zero-shot Inference and Label Generation

The authors initially attempted zero-shot inference with the Phi-3-Mini-128K-Instruct model but found it inadequate for generating feasible solutions. They then created a supervised dataset with approximately 120,000 random JSSP problems and their solutions using Google’s OR-Tools. This dataset includes problems of various sizes and durations, enhancing the model’s generalization capability.

Training

The dataset was used to fine-tune the Phi-3 model using the LoRA (Low-Rank Adaptation) method, which improves the efficiency of fine-tuning large pre-trained language models. The training involved specific configurations to optimize memory and computational efficiency. The training and validation curves indicate the model’s learning progress.

Evaluation

The model’s performance was evaluated on a separate dataset of various problem sizes. The evaluation involved parsing and validating the LLM-generated solutions to ensure feasibility.

Hyperparameter Tuning

The authors conducted a grid search to determine the best sampling hyperparameters, which significantly improved the model’s performance.

Comparative Analysis with Other Neural Approaches

The fine-tuned Phi-3 model was compared with other neural approaches, including deep reinforcement learning (L2D) and self-supervised learning (SLJ). The results show that the Phi-3 model performs competitively, achieving a relatively low median gap from the optimal solution.

Conclusion

This paper demonstrates the potential of LLMs in addressing JSSP. The fine-tuned Phi-3 model, enhanced with appropriate sampling methods, offers a promising new avenue for tackling JSSP, sometimes matching or surpassing traditional neural network approaches.

Limitations and Future Work

The study highlights several limitations, including the computational overhead of fine-tuning LLMs and the need for further research on larger problem sizes. Future work should explore integrating LLMs with other AI techniques, such as reinforcement learning and graph neural networks, to combine their strengths.

In summary, this paper introduces a novel supervised dataset for LLM training for JSSP, offering valuable insights and a robust framework for further exploration in this area.