Authors:

Bohao Wang、Feng Liu、Jiawei Chen、Yudi Wu、Xingyu Lou、Jun Wang、Yan Feng、Chun Chen、Can Wang

Paper:

https://arxiv.org/abs/2408.08208

Leveraging Large Language Models for Denoising Sequential Recommendations: An In-Depth Look at LLM4DSR

Introduction

Large Language Models (LLMs) have revolutionized artificial intelligence with their remarkable capabilities in content comprehension, generation, and semantic reasoning. This paper explores the innovative application of LLMs in denoising sequential recommendations, a task that relies heavily on the accuracy of users’ historical interaction sequences. These sequences often contain noise due to various factors, such as clickbait or accidental interactions, which can significantly degrade the performance of recommendation models. The proposed method, LLM4DSR, aims to leverage LLMs to identify and replace noisy interactions, thereby enhancing the accuracy and effectiveness of sequential recommendations.

Preliminary

Sequential Recommendation

Sequential recommendation systems predict the next item a user is likely to interact with based on their historical interaction sequence. Formally, let ( V = {v_1, v_2, \ldots, v_{|V|}} ) be the set of items, and ( S_i = {s_{i1}, s_{i2}, \ldots, s_{i|S_i|}} ) denote the (i)-th historical interaction sequence. The goal is to predict the next item ( s_{i(t+1)} ) given the sequence ( S_{i1:t} ).

Denoise Task Formulation

The objective of the denoising task is to identify and remove noise within a sequence ( S ), generating a noiseless sequence ( S’ = {s’1, s’_2, \ldots, s’{|S’|}} ). Unlike previous methods that simply delete noisy items, LLM4DSR replaces identified noise with more appropriate items, maintaining the sequence length and potentially introducing useful information.

Method

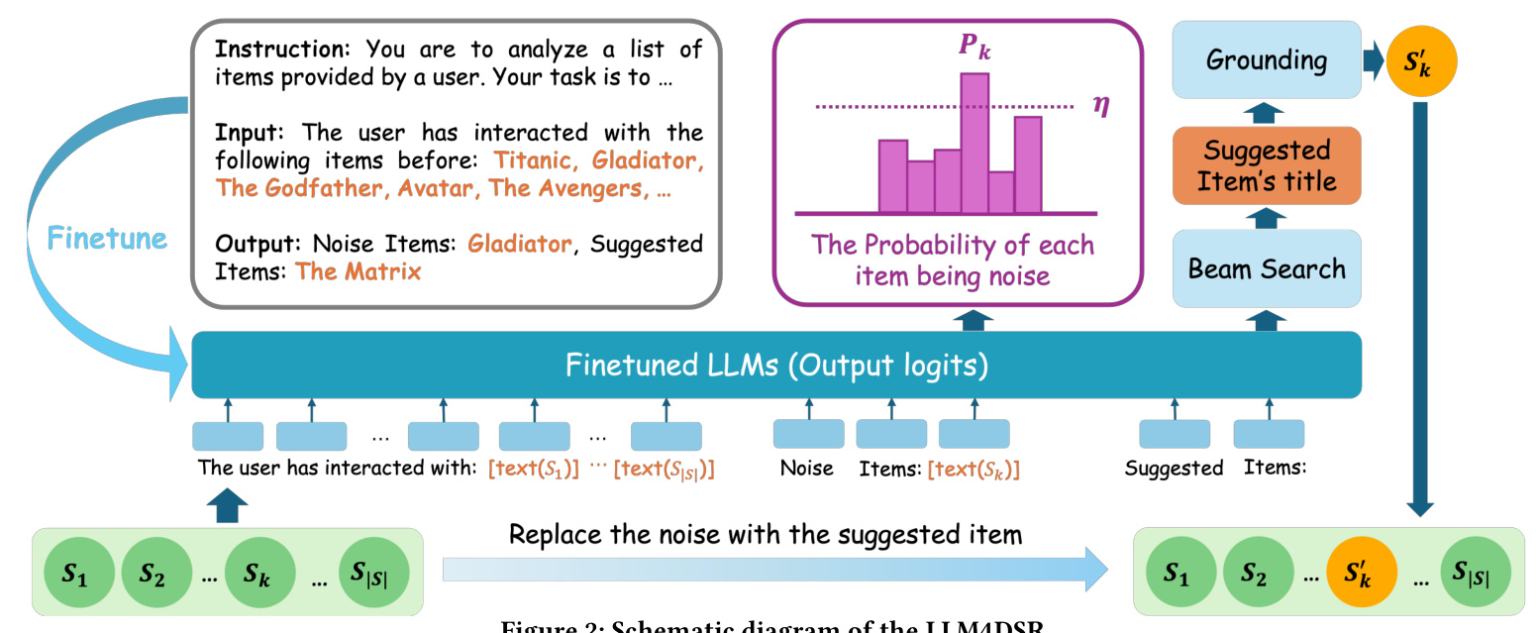

Training LLM as a Denoiser

To adapt pre-trained LLMs for denoising tasks, a self-supervised fine-tuning process is employed. An instruction dataset is constructed by replacing a proportion of items in sequences with randomly selected alternatives. The LLM is then fine-tuned to identify noisy items and suggest appropriate replacements.

Prompt for Self-Supervised Task:

- Instruction: Identify items that do not align with the main interests reflected by the majority of the items and suggest alternatives.

- Input: A list of items the user has interacted with.

- Output: Noise items and suggested replacements.

Uncertainty Estimation

An uncertainty estimation module is introduced to assess the reliability of the identified noisy items. The probability of each item being noise is calculated using the LLM, and items with probabilities exceeding a threshold ( \eta ) are classified as noise.

Replace Noise with Suggested Items

Instead of simply removing noise, LLM4DSR replaces it with items that better match the user’s preferences. The LLM generates a suggested item, which is then aligned with the item set using grounding techniques to ensure it is a valid replacement.

Experiments

Experimental Settings

Three real-world datasets (Amazon Video Games, Amazon Toy and Games, and Amazon Movies) were used, with sequences segmented using a sliding window of length 11. Two noise settings were tested: natural noise in real-world datasets and artificial noise introduced by randomly replacing items in sequences.

Performance Comparison (RQ1)

LLM4DSR consistently outperformed existing state-of-the-art denoising methods across various datasets and backbones. Explicit denoising methods like STEAM and HSD showed diminished effectiveness when retrained on different backbones, while implicit methods like FMLP and CL4SRec exhibited unstable performance.

Ablation Study (RQ2)

An ablation study was conducted to verify the contributions of various components of LLM4DSR. The results highlighted the effectiveness of each component, particularly the replacement operation, which significantly improved performance by providing items that better align with user interests.

Case Study (RQ3)

A case study demonstrated how LLM4DSR leverages open knowledge to identify and replace noise. For example, in a user’s viewing history predominantly consisting of drama, romance, and comedy films, the LLM identified a science fiction film as noise and suggested a more appropriate replacement.

Related Work

Sequential Recommendation

Sequential recommendation systems predict the next item based on historical interactions, considering the temporal order to capture dynamic changes in user interests. Various deep learning models, such as RNNs, CNNs, and self-attention mechanisms, have been employed to enhance these systems.

Denoising Recommendation

Denoising methods aim to mitigate the impact of noisy data on recommendation systems. Implicit methods reduce noise influence without explicitly removing it, while explicit methods directly modify sequence content. LLM4DSR leverages the extensive open knowledge of LLMs to enhance denoising effectiveness.

LLMs for Recommendation

LLMs have been utilized in various recommendation tasks, including collaborative filtering, sequential recommendation, and CTR tasks. They have also been employed for explaining item embeddings, recommendation results, and conversational recommendations. LLM4DSR is the first to explore LLMs for sequence recommendation denoising.

Conclusion

LLM4DSR is a novel method for sequence recommendation denoising using LLMs. It leverages the extensive open knowledge embedded in LLMs to identify and replace noisy interactions, significantly enhancing recommendation performance. Future research could focus on transferring the denoising capabilities of LLMs to smaller models to reduce computational overhead.

Ethical Considerations

Denoising methods transfer the model’s prior knowledge to the data, potentially amplifying biases. It is crucial to ensure that the outputs of pre-trained LLMs are inherently fair and to conduct bias testing when selecting the backbone model.