1. Abstract

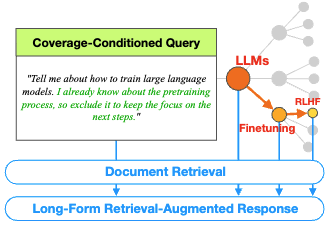

This paper addresses the challenge of generating tailored long-form responses from large language models (LLMs) in coverage-conditioned (C2) scenarios, where users request specific information ranges. The authors propose QTREE, a dataset of 10K hierarchical queries representing diverse perspectives on various topics, and QPLANNER, a 7B language model that generates customized query outlines for C2 queries. By utilizing QTREE and QPLANNER, the paper demonstrates the effectiveness of query outlining in C2 scenarios and verifies the benefits of preference alignment training for generating better outlines and long-form responses.

2. Quick Read

a. Research Methodology

The paper introduces a novel approach to handle C2 queries by exploring query outlining. The key components of the methodology include:

- QTREE Construction: Decompose user queries into a hierarchical structure of diverse subtopics (QTREE) to unfold the scope of information within LLMs.

- Coverage Query Generation: Combine a randomly selected background query from QTREE with an intent operation (inclusion/exclusion) to create coverage queries (qcov) that constrain the information coverage.

- Outline Generation and Evaluation: Utilize an LLM to extract candidate outlines from QTREE based on C2 queries and evaluate their quality using another LLM. The best outline is selected for further use.

- QPLANNER Training: Train a 7B language model (QPLANNER) to generate customized outlines for C2 queries by leveraging the extracted outlines and preference alignment training.

Innovation and Improvements: - Focus on C2 Queries: The paper specifically addresses the challenge of generating tailored responses for C2 queries, which are often overlooked in existing research.

- Query Outlining: The paper proposes query outlining as an effective strategy to handle complex C2 queries by exploring diverse perspectives and generating structured outlines.

- Preference Alignment Training: The paper demonstrates the benefits of preference alignment training for improving the quality of generated outlines and long-form responses.

b. Experimentation

The paper evaluates the effectiveness of the proposed approach through both automatic and human evaluations: - Automatic Evaluation: LLMs are used to score the generated outlines based on their alignment with C2 queries. The results show that QPLANNER with preference alignment training significantly outperforms baseline models.

- Human Evaluation: Human evaluators rate the quality of generated outlines and long-form responses. The results confirm the findings from automatic evaluation and demonstrate the benefits of preference alignment training.

c. Strengths and Potential Impact - Effective C2 Query Handling: The proposed approach effectively handles C2 queries by generating tailored outlines and long-form responses, improving user satisfaction.

- Flexible and Scalable: The methodology is flexible and can be adapted to different topics and information needs. It can also be scaled to larger datasets and LLMs.

- Potential Applications: The proposed approach has potential applications in various domains, such as information retrieval, question answering, and content generation.

3. Summary

a. Contributions - Exploration of Query Outlining in C2 Scenarios: The paper explores the effectiveness of query outlining in C2 scenarios by constructing QTREE and demonstrating its benefits.

- QPLANNER Model: The paper introduces QPLANNER, a 7B language model trained to generate customized outlines for C2 queries, improving the quality of long-form responses.

- Evaluation and Analysis: The paper conducts automatic and human evaluations to verify the effectiveness of the proposed approach and analyzes the impact of preference alignment training.

b. Key Innovations - Focus on C2 Queries: The paper specifically addresses the challenge of generating tailored responses for C2 queries, contributing to the development of more effective LLM-based systems.

- Preference Alignment Training: The paper demonstrates the benefits of preference alignment training for improving the quality of generated outlines and long-form responses, providing valuable insights for future research.

c. Future Research Directions - Flexible Outline Generation: Explore more flexible ways to generate outlines with varying numbers of queries based on the complexity of the topic.

- Fact Verification: Incorporate fact verification techniques to ensure the accuracy of retrieved documents and generated responses.

- Benchmarking: Develop comprehensive benchmarks for evaluating long-form responses in C2 scenarios to facilitate further research and development.

- Application Exploration: Investigate the application of the proposed approach in various domains, such as education, healthcare, and customer service.

View PDF:https://arxiv.org/pdf/2407.01158