Authors:

Omar Ghazal、Tian Lan、Shalman Ojukwu、Komal Krishnamurthy、Alex Yakovlev、Rishad Shafik

Paper:

https://arxiv.org/abs/2408.09456

Introduction

In the realm of modern computing, the von Neumann bottleneck has emerged as a significant challenge. This bottleneck arises from the frequent data transfer between memory and processing units, leading to substantial data throughput and energy costs. Traditional computing architectures, which rely on this constant data movement, struggle to keep up with the demands of big data and machine learning (ML) applications.

In-memory computing (IMC) offers a promising solution by processing data directly within the memory array, thereby eliminating the need for constant data movement. This approach leverages the inherent characteristics of memory cells, such as low access time, high cycling endurance, and non-volatility. Emerging non-volatile memory (NVM) devices, like resistive random-access memory (ReRAM), phase-change memory (PCM), and ferroelectric random-access memory (FeRAM), have shown potential for use in IMC systems.

This paper introduces a novel approach that utilizes floating-gate Y-Flash memristive devices, manufactured with a standard 180 nm CMOS process, for in-memory computing. These devices offer analog tunability and moderate device-to-device variation, essential for reliable decision-making in ML applications. The study focuses on integrating the Tsetlin Machine (TM), a state-of-the-art ML algorithm, with Y-Flash memristive devices to enhance scalability and on-edge learning capabilities.

Related Work

In-Memory Computing and Non-Volatile Memory Devices

In-memory computing (IMC) has gained attention as a solution to the von Neumann bottleneck. By processing data directly within memory arrays, IMC reduces data movement and improves computational efficiency. Various NVM devices, such as ReRAM, PCM, and FeRAM, have been explored for IMC applications due to their low access time, high endurance, and non-volatility.

Tsetlin Machines and Learning Automata

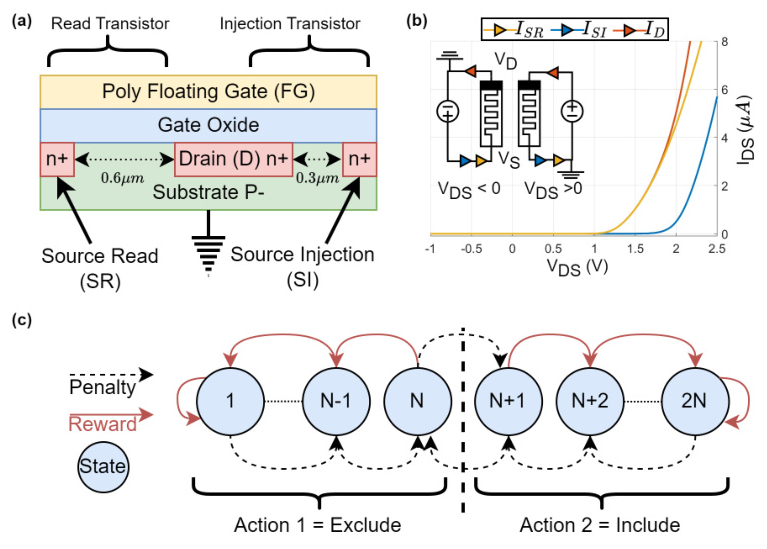

The Tsetlin Machine (TM) is an ML algorithm that employs a learning automaton called the Tsetlin Automaton (TA) as its primary learning component. The TA constructs logical propositions linking input-output pairs in classification tasks, behaving similarly to traditional Finite State Machines (FSMs). The TA incorporates reinforcement learning principles, allowing it to undertake actions based on reward and penalty feedback during training.

Challenges in Integrating Tsetlin Machines with Memristors

Integrating Tsetlin Machines with memristors poses challenges, particularly in mapping the multi-state dynamics of TAs onto memristors’ analog tunable resistance levels. Existing technology often requires unique materials or processes when integrated into industrial standard CMOS processes. The Y-Flash memristor, developed using a CMOS process, offers several advantages, including full CMOS process compatibility, low cycle-to-cycle variations, high yield, low power consumption, analog conductance tunability, self-selection, and high retention time.

Research Methodology

Y-Flash Cell Characteristics

The Y-Flash device consists of two transistors connected in parallel: the read transistor (SR) and the injection transistor (SI). These transistors share a common drain and a polysilicon floating gate. The SR is optimized for low-read voltage operation, while the SI is designed for high-voltage program/erase operations. The Y-Flash device can function as a two-terminal memristor by shortening the two sources, allowing for simpler addressing and more flexibility in different operation modes.

Mapping Tsetlin Automaton States to Y-Flash Cell

The methodology involves encoding the states of the TA as conductances utilizing a Y-Flash memristive device. Each distinct state of the TA is translated into a unique conductance state within the Y-Flash cell. The training procedure employs an accumulating divergence counter (±DC) to adjust the conductance based on the accumulated TA state differences over multiple training data points. When the accumulated DC value surpasses a specified threshold, a programming or erasing pulse is issued to update the conductance.

Experimental Design

Device-to-Device and Cycle-to-Cycle Variations

The experimental design includes thorough validations via device-to-device (D2D) and cycle-to-cycle (C2C) analyses. The Y-Flash device’s performance, reliability, and scalability are evaluated through these analyses. The experiments involve programming and erasing the device to achieve multiple conductance states and measuring the read currents to assess the device’s behavior.

Training the Tsetlin Machine

The TM is trained using the XOR problem dataset to demonstrate the methodology’s effectiveness. The decision boundary for the TA is set, and the state transitions are recorded during the training process. The mapping procedure aims to reduce the complexity of state transitions while maintaining consistency with the TA’s learning dynamics.

Results and Analysis

Conductance States and Device Performance

The Y-Flash device demonstrates the ability to achieve up to 40 distinct conductance states, extendable to 1000 through careful tuning. The device’s current-voltage (IDS −VDS) behavior under positive and negative bias conditions is analyzed, showing nonlinearity and low reverse current, which minimizes sneak-path currents in crossbar arrays.

Cycle-to-Cycle and Device-to-Device Variations

The C2C and D2D variations are evaluated through cycling performance tests and measurements across multiple devices. The results indicate minimal degradation in device performance over repeated cycling, with reliable conductance switching throughout the testing period. The D2D variations show high yield and uniformity, attributed to the CMOS fabrication flow and the high uniformity of the analog switching involved.

Power Consumption and Energy Efficiency

The power consumption and energy efficiency of the Y-Flash device are calculated based on the conductance states achieved during programming and erasing modes. The results highlight the device’s low power consumption and energy efficiency, making it suitable for in-memory computing applications.

Overall Conclusion

This paper presents a novel in-memory computing approach utilizing Y-Flash memristive devices to address data movement and computational efficiency challenges in machine learning architectures. By representing the learning automaton of the Tsetlin Machine within a single Y-Flash cell, the study demonstrates a scalable and energy-efficient hardware implementation that enhances on-edge learning capabilities. The findings highlight the potential of the Y-Flash memristor to advance the development of reliable in-memory computing for machine learning applications. Future research will focus on addressing reliability challenges in memristor devices and optimizing the integration of Tsetlin Machines with Y-Flash technology for broader machine-learning applications.