Authors:

Hongyu Li、Snehal Dikhale、Jinda Cui、Soshi Iba、Nawid Jamali

Paper:

https://arxiv.org/abs/2408.08312

Introduction

Tactile sensing is essential for robots to interact with objects in a manner similar to humans. Tactile sensors can be broadly categorized into vision-based and taxel-based sensors. Vision-based sensors have gained popularity due to their pixel-based representation, which is conducive to deep learning. However, their size limits their application in multi-fingered hands. Taxel-based sensors, despite their challenges such as low spatial resolution and non-standardized representations, offer unique advantages in robotic manipulation. This paper introduces HyperTaxel, a novel framework for enhancing the spatial resolution of taxel-based tactile signals using contrastive learning. The framework aims to map low-resolution taxel signals to high-resolution contact surfaces, leveraging joint probability distributions across multiple contacts to improve accuracy.

Related Work

Representation learning involves encoding informative features from raw data for machine learning tasks. While most studies focus on vision and language domains, tactile representation learning remains underexplored. Previous works have utilized supervised and self-supervised learning methods for tactile sensors, but the transfer from image-based to taxel-based data is challenging. Contact localization has been used to achieve tactile super-resolution, but recent advancements have shifted towards learning-based approaches. This paper proposes a geometrically-informed hyper-resolution algorithm that is invariant to sensor arrangements.

Methodology

Representation Learning

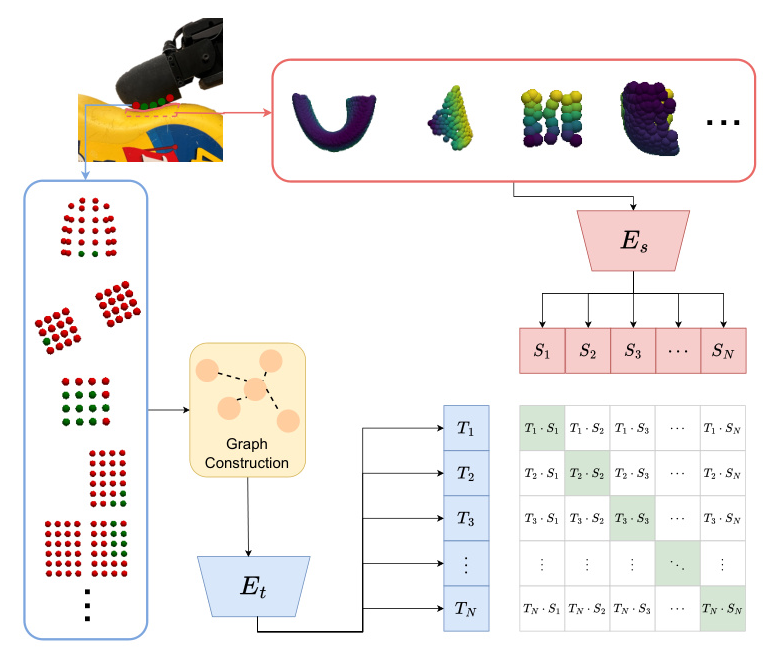

The first stage of the proposed solution involves learning a geometrically-informed representation of tactile signals using a graph neural network and contrastive learning.

Taxel Representation

Tactile data can be represented as point clouds, images, or graphs. The graph representation is chosen for its ability to encode both the spatial arrangement of taxel signals and the absence of contact. An undirected spatial graph is constructed, where vertices represent taxels and edges represent spatial proximity. The vertices have features such as 3D coordinates and corresponding signals. A radius graph is used to construct edges, ensuring stronger interactions between closer sensors.

Tactile Encoder

The constructed taxel graph is processed using a graph neural network (GNN) with message passing layers, a pooling layer, and a non-linear output head. The EdgeConv operator is used to leverage relative features between vertices, capturing the geometric features of the contact surface.

Sensor-Object Contact Surface Representation

The contact surface between the sensor and the object is represented as a cube encapsulating the object’s surface in contact with the sensor. The cube’s dimensions match the sensor’s, and its depth represents penetration into the object’s surface. This contact surface patch is used to learn the correspondence between low-resolution tactile signals and high-resolution object surfaces.

Learning the Tactile Representation

A contrastive learning framework is proposed to learn the tactile representation by exploiting the correspondence between the contact surface and tactile signals. The tactile graph is encoded into tactile embeddings, and the contact surface data is encoded into surface embeddings using PointNet. The dot product of tactile and surface embeddings measures cosine similarity, and a symmetric cross-entropy loss is used to optimize the encoders, bringing matching pairs closer and pushing non-matching pairs farther apart in the embedding space.

Multi-Contact Localization for Hyper-Resolution

The second stage involves using multi-contact localization to transform sparse touch data into detailed object surface geometry, achieving hyper-resolution. A contact database is collected offline, consisting of contact surface patches, corresponding contact signals, and sensor poses. During deployment, multiple sensors in contact with the object provide tactile readings, which are encoded into taxel embeddings. The similarity between taxel and surface embeddings is measured, and candidate poses are ranked. Distance-filtered sets are obtained for each sensor, and an optimal solution is found using a multipartite graph, maximizing the similarities between taxel and surface embeddings.

Datasets

Two datasets were collected using NVIDIA Isaac Sim for a subset of YCB objects. The first dataset is a comprehensive database of tactile sensors interacting with objects, used to evaluate tactile representation learning. The second dataset consists of the Allegro Hand holding an object and executing random trajectories, used to evaluate in-hand 6D pose estimation.

Contact Database

A dataset capturing tactile experiences across the entire surface of an object was constructed. Tactile sensors were simulated using the Contact Sensor provided by Isaac Sim. Points on the object mesh were sampled, and tactile observations and corresponding poses were collected for each type of taxel sensor on the Allegro Hand.

In-Hand Object Dataset

A simulated dataset was collected to evaluate the framework on downstream tasks such as 6D in-hand pose estimation. The Allegro Hand held an object and executed random trajectories, collecting samples for training and validation.

Experiments

The experiments utilized the AdamW optimizer with a learning rate of 0.001. The tactile encoder was pre-trained for 100 epochs, and the pose estimation model was optimized for 500 epochs.

Qualitative Analysis of Learned Tactile Representation

A qualitative analysis using visualizations of tactile embeddings was performed. The tactile embeddings effectively captured geometric features of the contact surface, such as flatness, curvature, and edges, demonstrating consistency across different objects and sensor types.

Hyper-Resolution Performance Evaluation

The performance of the hyper-resolution algorithm was evaluated by comparing it with image-based and point-cloud-based approaches. The proposed method outperformed the baselines in terms of Chamfer distance and rank, demonstrating its effectiveness.

Comparison of Graph Operators and Constructors

The impact of different graph operators and constructors on the learned tactile representation was ablated. The EdgeConv operator combined with the radius graph constructor achieved the best performance, effectively capturing relative features between taxels.

Effect of Multi-Contact Localization on Hyper-Resolution

The effect of the number of contacts on the hyper-resolution algorithm was evaluated. The quality of hyper-resolution improved with more contacts, providing more information and constraints about the object surface.

Effect of HyperTaxel on In-Hand 6D Pose Estimation

The effectiveness of the approach was evaluated by integrating it with ViTa, an existing visuotactile model for 6D pose estimation. The hyper-resolution method improved the performance of ViTa, reducing angular and position errors.

Real Robot Results

The model trained on synthetic data was deployed on a multi-fingered gripper affixed to a Sawyer robot. The tactile sensors captured surface contact points on real YCB objects. The representation effectively distinguished different surface types, outperforming baselines in surface classification tasks.

Conclusions

HyperTaxel presents a novel framework for learning a geometrically-informed representation of taxel-based tactile signals to achieve hyper-resolution of contact surfaces. The framework leverages a graph-based representation and contrastive learning to map low-resolution taxel signals to high-resolution contact surfaces. Extensive experiments demonstrated the framework’s effectiveness in capturing geometric features, improving pose estimation performance, and enabling robust sim-to-real transfer. Future directions include incorporating temporal information, expanding the contact database, and applying the framework to other modalities.