Authors:

Kaushik Rangadurai、Siyang Yuan、Minhui Huang、Yiqun Liu、Golnaz Ghasemiesfeh、Yunchen Pu、Xinfeng Xie、Xingfeng He、Fangzhou Xu、Andrew Cui、Vidhoon Viswanathan、Yan Dong、Liang Xiong、Lin Yang、Liang Wang、Jiyan Yang、Chonglin Sun

Paper:

https://arxiv.org/abs/2408.06653

Introduction

In the realm of machine learning and recommender systems, the retrieval stage is crucial for narrowing down millions of candidate ads to a few thousand relevant ones. This paper introduces the Hierarchical Structured Neural Network (HSNN), a novel approach designed to address the limitations of traditional Embedding Based Retrieval (EBR) systems. HSNN leverages sophisticated interactions and model architectures to enhance the retrieval process while maintaining sub-linear inference costs.

Related Work

Clustering Methods

Clustering algorithms are broadly categorized into hierarchical and partitional methods. Hierarchical clustering, such as agglomerative clustering, starts with small clusters and merges them. Partitional methods like K-means aim to minimize the sum of squared distances between data points and their closest cluster centers. Other techniques include expectation maximization (EM), spectral clustering, and non-negative matrix factorization (NMF).

Embedding Based Retrieval

EBR systems have been widely adopted in search and recommendation systems. These systems use approximate nearest neighbor (ANN) algorithms to retrieve top relevant candidates efficiently. Techniques like tree-based algorithms, locality-sensitive hashing (LSH), and hierarchical navigable small world graphs (HNSW) are commonly used.

Generative Retrieval

Generative retrieval has emerged as a new paradigm for document retrieval, drawing parallels to ad retrieval where ads are treated as documents and users as queries. This approach uses a learnt codebook similar to the hierarchical cluster IDs proposed in HSNN.

Joint Optimization

Joint optimization systems, where item hierarchies and large-scale retrieval systems are optimized together, are closely related to our work. Methods like Deep Retrieval and tree-based models optimize both the tree structure and model parameters jointly.

Motivation

The motivation for HSNN arises from the limitations of the current production baseline:

- Limited Interaction: The Two Tower architecture restricts user-ad interactions.

- Post-Training Clustering: Clustering occurs after training, unaware of retrieval optimization criteria.

- Embedding Updates: Frequent updates to embeddings necessitate new cluster indices, leading to delays.

Model Design

Features and Model Architecture

The Two Tower model, widely used in the industry, outputs fixed-size representations for users and ads, relying on dot-product interactions. HSNN enhances this by introducing interaction features and sophisticated MergeNet architectures.

Disjoint Optimization of Clustering and Retrieval Model

The current system architecture involves separate components for retrieval and clustering, leading to inefficiencies. HSNN proposes a jointly-optimized clustering and retrieval model to improve overall performance.

System Architecture

The system architecture of HSNN involves data loading, clustering, indexing, and serving stages. The process ensures that cluster information is up-to-date and available at serving time.

Proposed Method

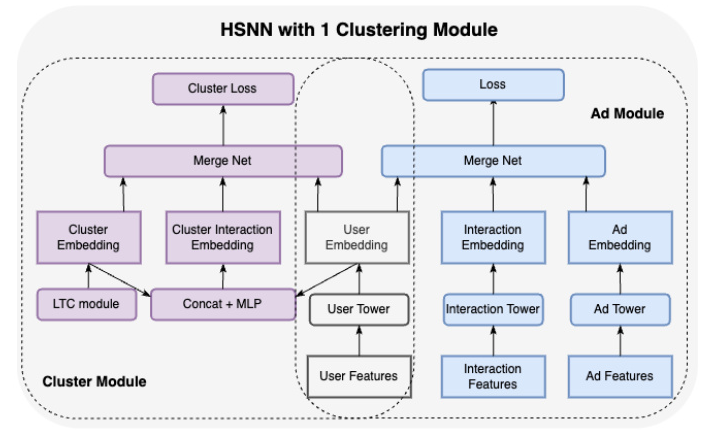

Hierarchical Structured Neural Network (HSNN)

Modeling

HSNN introduces three decoupled towers (user, ad, and interaction) and a MergeNet to capture higher-order interactions. The clustering modules use the Learning To Cluster (LTC) algorithm to generate cluster embeddings.

Indexing

The retrieval model is split into five parts for serving: user tower, ad tower, interaction tower, cluster model, and over-arch model. Cluster IDs and embeddings are indexed for efficient retrieval.

Serving

The serving process involves fetching user embeddings, scoring clusters, and ranking ads based on cluster scores. This approach reduces the number of inferences, improving efficiency.

Learning To Cluster (LTC)

LTC is a gradient-descent based clustering algorithm that co-trains cluster assignments and centroid embeddings. It incorporates curriculum learning, cluster collapse prevention, and hierarchical clustering.

Curriculum Learning

Curriculum learning trains the model from easier to harder tasks, using a scheduling strategy to adjust the softmax temperature over iterations.

Cluster Distribution

To prevent cluster collapse, techniques like FLOPs regularizer and random replacement are employed, ensuring a balanced cluster distribution.

Introducing Hierarchy

HSNN introduces hierarchy through Residual Quantization, using multiple Ad Cluster Towers to handle large numbers of items.

Ablation Studies

Interaction Arch and Features

Ablation studies show the importance of interaction features and sophisticated interaction towers in improving model performance.

Co-Training

Co-training the clustering with the retrieval model significantly improves clustering performance, as demonstrated by various clustering techniques.

LTC Algorithm Ablation

The LTC algorithm’s components, such as curriculum learning and cluster collapse prevention, contribute to its effectiveness.

Experiments

Online Results

HSNN demonstrates substantial improvements in relevance and efficiency metrics over traditional EBR systems. The co-trained approach and use of interaction features contribute to these gains.

Deployment Lessons

Cluster Collapse

Techniques like FLOPs regularizer and random replacement ensure even cluster distribution, preventing cluster collapse.

Staleness of Cluster Centroids

Frequent updates to centroid embeddings prevent staleness, maintaining model accuracy over time.

[illustration: 13]

Conclusion and Next Steps

HSNN introduces a learnable hierarchical clustering module that enhances the retrieval stage in recommendation systems. Future work will explore more complex interactions and personalization through user clustering.

Acknowledgements

The authors thank their colleagues for their contributions and support in developing and deploying HSNN.

This blog post provides a detailed interpretation of the paper “Hierarchical Structured Neural Network for Retrieval,” highlighting the motivation, design, methodology, and experimental results of the proposed HSNN system.