Authors:

Kang Du、Zhihao Liang、Zeyu Wang

Paper:

https://arxiv.org/abs/2408.08524

Introduction

Reconstructing physical attributes from multiple observations has long been a challenge in computer vision and computer graphics. Illumination is a highly diverse and complicated factor that significantly influences observations. Illumination Decomposition (ID) aims to achieve controllable lighting editing and produce various visual effects. However, ID is an extremely ill-posed problem, as varying interactions between different lighting distributions and materials can produce identical light effects. This issue is compounded by the complexity of illumination (e.g., self-emission, direct, and indirect illumination). Without priors of geometry and materials, this task becomes exceedingly difficult. Furthermore, the need to accurately decompose materials and geometry, solve the complex rendering equation, and consider multiple light sources and ray bounce exacerbates the challenge.

Many recent works focus on appearance reconstruction. Neural Radiance Field (NeRF) employs an MLP to represent a static scene as a 5D implicit light field, achieving photorealistic novel view synthesis results. 3D Gaussian Splatting (3DGS) further incorporates explicit representation with efficient rasterization, enabling real-time rendering. However, their 3D appearance reconstruction mainly concentrates on view-dependent appearance without further decomposition.

To acquire a controllable light field, a well-posed geometry is necessary to support the subsequent decomposition task. Recently, 2D Gaussian Splatting (2DGS) has emerged as a promising method for geometry reconstruction, utilizing surfel-based representation to ensure geometric multi-view consistency. However, it primarily focuses on geometry reconstruction and falls short in modeling physical materials and light transport on the surface. This limitation impedes its effectiveness in more complex tasks like relighting and light editing.

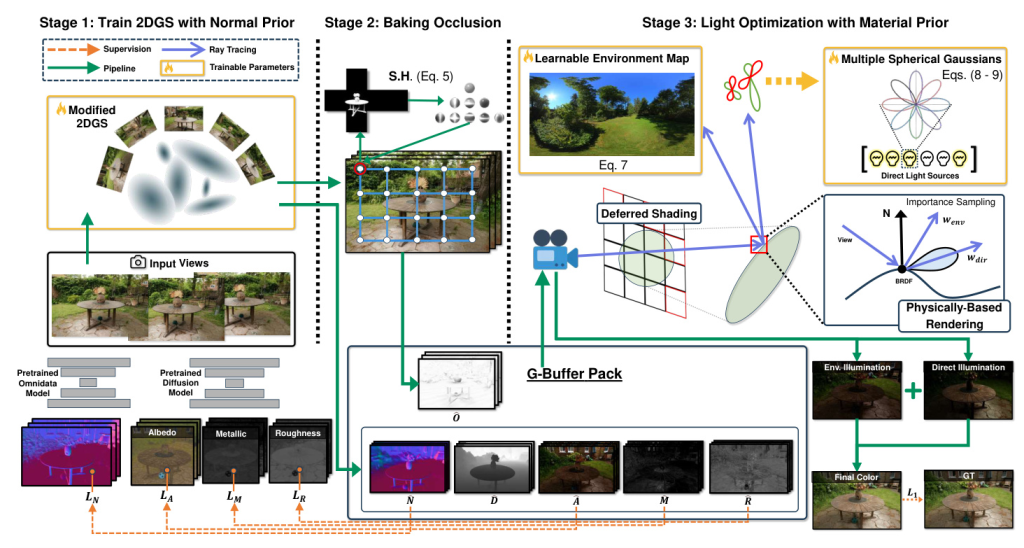

In this paper, we propose a novel framework called GS-ID, designed to decompose illumination by leveraging suitable geometry and materials from multiple observations. Our approach utilizes 2DGS for the reconstruction of geometry and materials. However, we observe that 2DGS falls short in accurately representing reflective and distant regions. To address this limitation, we incorporate a normal prior to obtain a well-posed geometry for subsequent decomposition tasks. For illumination decomposition, we employ a learnable panoramic map to encapsulate multiple ray-bouncing results as environmental illumination. Additionally, we use a set of Spherical Gaussians (SGs) to parametrically model direct light sources, efficiently depicting direct illumination in highlight regions. Furthermore, we adopt deferred rendering. This approach decouples the number of light sources from Gaussian points, facilitating efficient light editing and compositing.

Our GS-ID framework facilitates explicit and user-friendly light editing, enabling the composition of scenes with varying lighting effects. Experimental results demonstrate that our framework surpasses contemporary methods, offering superior control over varying illumination effects.

Related Work

Geometry Reconstruction

Regarding surface reconstruction, some methods use an MLP to model an implicit field representing the target surface. After training, surfaces are extracted using isosurface extraction algorithms. Recently, several methods achieve fast and high-quality geometry reconstruction of complex scenes based on 3DGS. However, these methods often treat the appearance of a functional of view directions, neglecting the modeling of physical materials and light transport on the surface. This limitation hinders their ability to handle complex tasks such as light editing.

Intrinsic Decomposition

To decompose the intrinsics from observations, some monocular methods learn from labeled datasets and estimate intrinsics directly from single images. However, these methods lack multi-view consistency and struggle to tackle out-of-distribution cases. In contrast, other methods construct a 3D consistent intrinsic field from multiple observations. Notably, GaussianShader and GS-IR build upon 3DGS and enable fast intrinsic decomposition on glossy areas and complex scenes. While obtaining impressive results, current methods almost only consider environmental illumination, making precise editing challenging.

Preliminaries

Gaussian Splatting

3D Gaussian Splatting (3DGS) represents a 3D scene as a collection of primitives. Each primitive models a 3D Gaussian associated with a mean vector, covariance matrix, opacity, and view-dependent color formulated by Spherical Harmonic (SH) coefficients. Given a viewing transformation as extrinsic and intrinsic matrices, 3DGS efficiently projects 3D Gaussian into 2D Gaussian on the 2D screen space and performs α-blending volume rendering to obtain the shading color of a pixel.

Physical-Based Rendering

We use Physical-Based Rendering (PBR) to model the view-dependent appearance, enabling effective illumination decomposition. We follow the rendering equation to model the outgoing radiance of a surface point in direction. The term signifies the incident radiance. In particular, we use the Cook-Torrance model to formulate the Bidirectional Reflectance Distribution Function (BRDF).

Methodology

We introduce a novel three-stage framework for illumination decomposition called GS-ID. Unlike previous methods focusing solely on environmental illumination, GS-ID decomposes the light field using environmental illumination (represented by a learnable panoramic map) and parametric direct light sources (modeled by Spherical Gaussians).

Stage 1: Reconstruction Using Normal Prior

In this stage, we adopt 2DGS to reconstruct geometry. However, we observe that 2DGS mistakenly interprets glossy regions as holes and reduces the expressiveness of distant areas. To address these issues, we incorporate priors from a monocular geometric estimator to enhance the output geometric structures. Specifically, we use a pre-trained Omnidata model to provide normal supervision. Additionally, we employ Intrinsic Image Diffusion to generate materials for supervising the subsequent decomposition stage.

Stage 2: Baking Ambient Occlusion

To model environmental light transport more accurately, we bake probes that precompute and store occlusion. This enhances albedo reconstruction and illumination decomposition by calculating the exposure of each point near obstructing surfaces. We use probes with spherical harmonics (SH) coefficients to store scene occlusion. After Stage 1, we fix the geometry and regularly place occlusion probes within the bounded 3D space. Each probe is designed to cache the current depth cube-map. We label the depths below a certain threshold as occlusion, resulting in the occlusion cube-map. Finally, we cache the occlusion in the form of SH coefficients.

Stage 3: Light Optimization with Material Prior

Directly solving the render equation is challenging due to the nearly infinite ray bouncing in the real world. In Stage 3, we divide light into environmental and direct illumination. Direct illumination refers to light that directly hits the surface with one bounce, while environmental illumination involves light reflected from media. We derive the radiance from incidence in the rendering equation.

Environment Illumination

According to the rendering equation, environment illumination can be reformulated into its diffuse and specular components. We adopt the image-based lighting (IBL) model and split-sum approximation to handle the intractable integral.

Direct Illumination

Although environmental light encompasses illumination from all directions, the absence of specific light source positions complicates lighting edits and diminishes the representation of highlights. To address this issue, we adopt the approach proposed by Wang et al., utilizing a mixture model of scattered Spherical Gaussians (SG mixture) to simulate direct illumination with 3-channel weights representing RGB colors. Each SG mixture can be represented as a direct light source. This method enhances the expressiveness of highlights and increases the flexibility of lighting edits.

Experiments

Dataset and Metrics

We conduct experiments on real-world datasets, such as Mip-NeRF 360, to evaluate our illumination decomposition approach. Our evaluation metrics include PSNR, SSIM, and LPIPS. Additionally, we utilize the TensoIR synthetic dataset for comparative analysis of illumination decomposition. This dataset features scenes rendered with Blender under various lighting conditions, along with ground truth normals and albedo.

Comparisons

Our work aims to improve normal accuracy in Stage 1 and emphasize illumination decomposition in Stage 3. To evaluate this, we conduct comparisons using public datasets. First, we use the Mip-NeRF 360 datasets to compare novel view synthesis results and normal estimation in Stage 1, contrasting our approach with 2DGS and other contemporary methods. Then we use Mip-NeRF 360 and TensorIR Synthetic datasets to compare novel view synthesis results and ID comparison outcomes in Stage 3.

Ablation Studies

We introduce 2DGS for illumination decomposition (ID) and propose supervision training using a diffusion model as priors. Our method is evaluated on TensorIR Synthetic and Mip-NeRF 360 datasets, demonstrating its effectiveness in ID tasks. The following sections present a detailed ablation study on geometry and material priors, direct illumination, environment illumination, and deferred rendering.

Applications

We conduct light editing applications using the recovered geometry, material, and illumination from our GS-ID. The results demonstrate that GS-ID effectively handles precise light editing, generating photorealistic renderings. Furthermore, our parametric light sources allow for illumination extraction from one decomposed scene and integration into another scene without additional training.

Conclusion

We introduce GS-ID, a novel three-stage framework for illumination decomposition that enables photorealistic novel view synthesis, intuitive light editing, and scene composition. GS-ID integrates 2D Gaussian Splatting with intrinsic diffusion priors to regularize normal and material estimation. We decompose the light field into environmental and direct illumination, employing parametric modeling and optimization schemes for enhanced illumination decomposition. Additionally, GS-ID utilizes deferred rendering in screen space to achieve high rendering performance.

Our work has a few limitations. For example, GS-ID relies on the priors from intrinsic diffusion methods, which fails to generalize to out-of-distribution cases, resulting in degeneration decomposition. Our future work includes improving the applicability of GS-ID. We plan to explore various applications, such as simulating a wide range of parametric light sources (e.g., area light) to increase the diversity of lighting effects. Furthermore, we will consider the visibility between direct lights and surface points to integrate shadow effects into novel view synthesis, ensuring more realistic and harmonic results, especially for the scene composition.

Code:

https://github.com/dukang/gs-id