Authors:

Udo Schlegel、Daniel A. Keim、Tobias Sutter

Paper:

https://arxiv.org/abs/2408.10628

Introduction

Understanding how models process and interpret time series data remains a significant challenge in deep learning, especially for applications in safety-critical areas such as healthcare. In this paper, the authors introduce Sequence Dreaming, a technique that adapts Activation Maximization to analyze sequential information, aiming to enhance the interpretability of neural networks operating on univariate time series. By leveraging this method, they visualize the temporal dynamics and patterns most influential in model decision-making processes. This approach is tested on a time series classification dataset encompassing applications in predictive maintenance.

Related Work

In the realm of explainable artificial intelligence (XAI), elucidating the operational intricacies of machine learning algorithms, particularly for time series data, is imperative for advancing the field’s theoretical and practical applications. Activation Maximization and DeepDream are two techniques that have emerged to make the complex inner workings of deep neural networks (DNNs) more transparent.

- Activation Maximization focuses on identifying the input patterns that maximize the response of specific neurons or layers, revealing the features and patterns a model perceives as most salient.

- DeepDream leverages the layers of neural networks to generate intricate, dream-like images that highlight the learned features in a visually compelling way.

These methods have been instrumental in improving model interpretability and providing valuable insights into diagnosing model behavior. However, applying Activation Maximization to time series data presents unique challenges due to the complexity of temporal data.

Research Methodology

Activation Maximization

Activation Maximization is a technique designed to reveal the features most salient to individual neurons within a trained neural network, enabling interpretability. The process involves identifying the inputs that provoke maximal activation in specific neurons by iteratively refining the input to maximize a neuron’s activation. This technique is adapted for time series data in this study.

Regularization Techniques

To counteract the generation of unrealistic or excessively noisy sequences, the authors enhance Sequence Dreaming with a range of regularization techniques, including:

- Exponential Smoothing

- Gaussian Blur

- Clamping to Borders

- L2 Decay

- Random Scaling

- Moving Average Smoothing

- Random Reinitiation

These modifications are designed to mitigate overfitting to noise and emphasize the underlying patterns critical for model decisions.

Experimental Design

Dataset and Model

The evaluation is conducted on the FordA dataset, a well-established univariate time series classification benchmark for anomaly detection in car engines. This dataset consists of time series samples with 500 time points each, comprising 3,601 samples for training and 1,320 for testing.

The model employed is a conventional ResNet architecture featuring three ResNet blocks, each containing three Conv1D layers, followed by a final linear classifier layer. The model is trained using the Adam optimizer over 500 epochs, achieving an accuracy of 0.99 on the training set and 0.95 on the test set.

Hyperparameter Search

A grid search is utilized for the hyperparameter search in Sequence Dreaming, with minimum and maximum values outlined in the table below:

Results and Analysis

Visual Evaluation

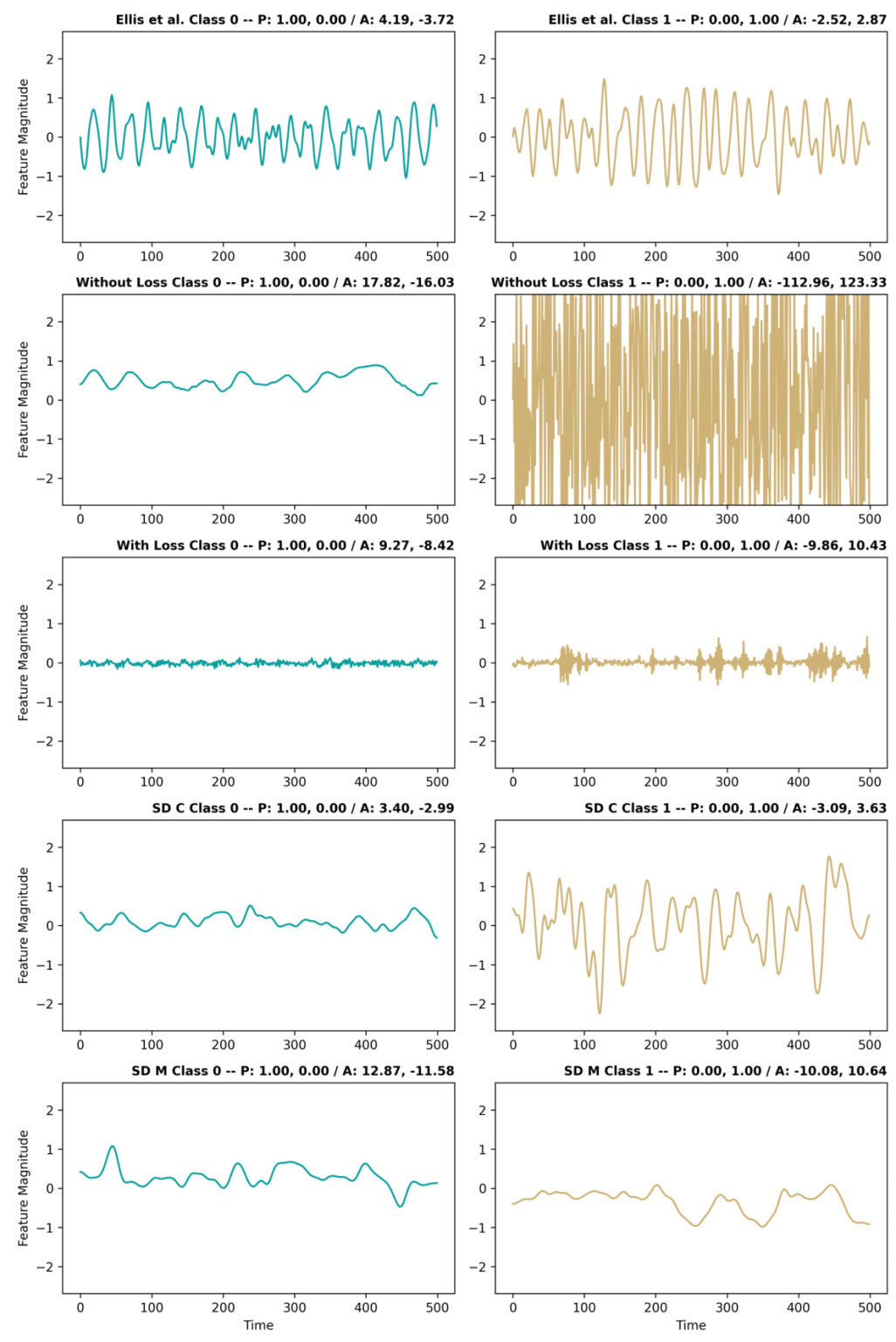

The visual evaluation presents the generated time series as line plots for direct comparison. The figure includes methods from Ellis et al., the approach without loss change, the approach with loss change, Sequence Dreaming on the class center, and the maximization using Sequence Dreaming.

Out-of-Distribution Evaluation

The authors employ outlier analysis using the Mahalanobis distance on time series data and activations to assess the plausibility of the generated activation maximization input toward the model and the data.

Visual Out-of-Distribution Evaluation

The results are further illustrated using violin plots to show activations and the results of the generated time series, as well as PCA projections of the activations.

Overall Conclusion

The study introduces Sequence Dreaming, an adaptation of Activation Maximization techniques for DeepDream explicitly tailored for time series data. By applying this method, the authors aim to enhance the interpretability of deep learning models that process time series data, shedding light on the temporal dynamics or patterns these models capture. The results demonstrate that adding regularization terms to data transformation and the loss function can be effective, producing convincing results comparable to those of frequency domain approaches.

Future Work

Several promising directions are poised to refine the Sequence Dreaming process for time series data, including:

- Exploring advanced regularization techniques.

- Adopting genetic or evolutionary algorithms.

- Incorporating wavelet transforms for a more comprehensive analysis of time series.

- Heavily incorporating attributions in the process to lead to more plausible activation maximization time series.

The study advances the field of model interpretability for time series analysis and offers a framework for improving the transparency and trustworthiness of models deployed in critical decision-making domains.

This blog post provides a detailed interpretive summary of the paper “Finding the DeepDream for Time Series: Activation Maximization for Univariate Time Series,” highlighting the methodology, experimental design, results, and future directions for research.