Authors:

Xiao Wang、Shiao Wang、Pengpeng Shao、Bo Jiang、Lin Zhu、Yonghong Tian

Paper:

https://arxiv.org/abs/2408.09764

Introduction

Human Action Recognition (HAR) is a crucial research area in computer vision and artificial intelligence, primarily driven by the advancements in deep learning techniques. Traditionally, HAR has relied heavily on RGB cameras to capture and analyze human activities. However, RGB cameras face significant challenges in real-world applications, such as varying light conditions, fast motion, and privacy concerns. These limitations necessitate the exploration of alternative technologies.

Event cameras, also known as Dynamic Vision Sensors (DVS), have emerged as a promising alternative due to their unique advantages, including low energy consumption, high dynamic range, and dense temporal resolution. Unlike RGB cameras, event cameras capture changes in the scene asynchronously, making them highly efficient in low-light and high-speed scenarios. Despite these advantages, existing event-based HAR datasets are often low resolution, limiting their effectiveness.

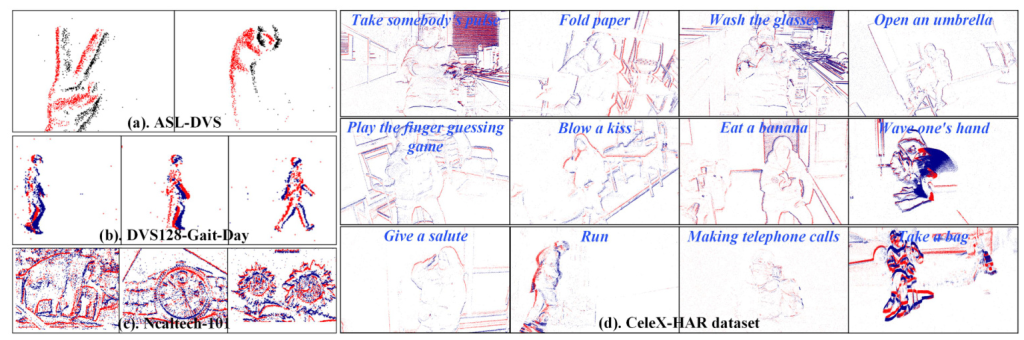

To address this gap, this study introduces CeleX-HAR, a large-scale, high-definition (1280 × 800) human action recognition dataset captured using the CeleX-V event camera. The dataset includes 150 common action categories and 124,625 video sequences, considering various factors such as multi-view, illumination, action speed, and occlusion. Additionally, the study proposes a novel Mamba vision backbone network, EVMamba, designed to enhance event stream-based HAR by leveraging spatial and temporal scanning mechanisms.

Related Work

Event-based Recognition

Event-based recognition has seen significant advancements, with research focusing on three primary approaches: Convolutional Neural Networks (CNN), Spiking Neural Networks (SNN), and Graph Neural Networks (GNN). Each approach leverages the unique representation of event streams to achieve efficient recognition.

- CNN-based Models: Wang et al. proposed a CNN model for recognizing human gaits using event cameras. These models effectively encode spatiotemporal information for recognition tasks.

- SNN-based Models: SNNs, as the third generation of neural networks, offer energy-efficient recognition by encoding event streams. Notable works include Peter et al.’s weight and threshold balancing method and Nicolas et al.’s sparse backpropagation method.

- GNN-based Models: GNNs have also been explored for event-based recognition. For instance, Gao et al. proposed a multi-view event camera action recognition framework, HyperMV, which captures cross-view and temporal features using a multi-view hypergraph neural network.

Human Action Recognition

HAR has been extensively studied, with CNNs being widely used for encoding spatiotemporal information. Recent advancements include Transformer-based models, which excel at capturing long-range dependencies but are computationally expensive. Notable works include Wang et al.’s dynamic visual sensor-based framework and Li et al.’s multimodal Transformer network for integrating RGB/Event features.

State Space Model

State Space Models (SSM) have gained popularity for their ability to model long-range dependencies in sequences. Gu et al. introduced structured state space sequence models (S4) for deep learning, which efficiently model long sequences. Subsequent works have explored the potential of SSM in various domains, including video modeling and enhancing model performance through multiple scanning orders.

Research Methodology

Overview

The proposed framework leverages the Mamba network for event-based human action recognition. The framework integrates event data representations (event images and event voxels) to fully utilize the rich temporal information inherent in event data. A novel voxel temporal scanning mechanism is introduced to process voxel grids chronologically, effectively integrating dense temporal information.

Input Representation

Event streams are represented as (E \in \mathbb{R}^{W \times H \times T}), where each event point (e_i = [x, y, t, p]) includes spatial coordinates ((x, y)), timestamp (t), and polarity (p). Event frames (EF \in \mathbb{R}^{T’ \times C \times H \times W}) are generated by segmenting the event stream into clips. Additionally, event voxels (EV \in \mathbb{R}^{a \times b \times c}) are used to preserve temporal information.

Network Architecture

The framework employs the Mamba network for feature extraction from event frames and voxels. The network includes:

- Mamba-based Visual Backbone: Event frames are partitioned into patches and transformed into token representations. The VMamba backbone network, constructed with four-stage VSS blocks, extracts robust spatial and temporal features.

- Voxel Temporal Scanning Mechanism: This mechanism enhances temporal information by scanning event voxels chronologically and transforming them into event tokens.

- Classification Head: The extracted features are fused and fed into a classification head, which maps feature dimensions to predicted scores for each action category.

Experimental Design

CeleX-HAR Benchmark Dataset

The CeleX-HAR dataset was collected following specific protocols to ensure diversity and comprehensiveness:

- Multi-view: Actions were recorded from different views (front, left, right, down, and up).

- Various Illumination: Videos were captured under different illumination conditions (low, middle, and full).

- Camera Motions: Both moving and stationary cameras were used to capture actions.

- Video Length: Actions typically lasted 2-3 seconds, with some extending beyond 5 seconds.

- Speed of Action: Videos included actions at low, medium, and high speeds.

- Occlusion: Partial occlusion was considered in some videos.

- Glitter: Videos with conspicuous flash were included.

- Capture Distance: Videos were recorded at varying distances (1-2 meters, 2-3 meters, and beyond 3 meters).

- Large-scale & High-definition: The dataset includes 124,625 high-definition event video sequences covering 150 human activities.

Statistical Analysis and Baselines

The dataset comprises 150 categories of common human actions, with 124,625 videos split into training (99,642) and testing (24,983) subsets. Baseline models for comparison include CNN-based models (e.g., ResNet50, ConvLSTM), Transformer-based models (e.g., Video-SwinTrans, TimeSformer), RWKV-based models, and Mamba-based models.

Results and Analysis

Dataset and Evaluation Metric

Experiments were conducted on multiple datasets, including ASL-DVS, N-Caltech101, DVS128-Gait-Day, Bully10K, Dailydvs-200, and CeleX-HAR. The top-1 accuracy metric was used for evaluation.

Implementation Details

The framework was optimized end-to-end with a learning rate of 0.001 and weight decay of 0.0001. The SGD optimizer was used, and the model was trained for 30 epochs. The VMamba backbone network included 33 S6 blocks, and 8 event frames were used as input.

Comparison with Other SOTA Algorithms

The proposed method achieved state-of-the-art performance across multiple datasets:

- ASL-DVS: Achieved 99.9% top-1 accuracy, outperforming methods like M-LSTM and graph-based models.

- N-Caltech101: Achieved 93.9% top-1 accuracy, significantly better than ResNet50 and EFV++.

- DVS128-Gait-Day: Achieved 99.0% top-1 accuracy, surpassing EVGait-3DGraph.

- Bully10K: Achieved state-of-the-art performance, outperforming HRNet and EFV++.

- Dailydvs-200: Achieved superior performance, surpassing TSM and Swin-T.

- CeleX-HAR: Achieved 72.3% top-1 accuracy, outperforming TSM and Transformer-based methods.

Component Analysis

The effectiveness of the proposed method was validated through component analysis. The voxel temporal scanning mechanism significantly improved accuracy, demonstrating the importance of dense temporal information.

Ablation Study

Ablation studies investigated different event representations, fusion methods, trajectory length thresholds, number of input frames, and input resolutions. The results highlighted the importance of integrating event frames and voxels, using simple addition for feature fusion, and optimizing input frames and resolution.

Visualization

Confusion matrices and feature distributions were visualized to illustrate the model’s performance. The feature maps generated by the proposed model showed a heightened focus on the target, and top-5 prediction results demonstrated accurate recognition of various actions.

Overall Conclusion

This study introduces CeleX-HAR, a large-scale, high-definition event-based HAR dataset, and proposes EVMamba, a novel event stream-based HAR model. The dataset addresses the limitations of existing low-resolution datasets and provides a comprehensive benchmark for future research. EVMamba leverages innovative spatio-temporal scanning mechanisms to achieve state-of-the-art performance across multiple datasets. The release of the dataset and source code is anticipated to stimulate further research and development in event-based HAR and computer vision.

Code:

https://github.com/event-ahu/celex-har