Authors:

Khadija Iddrisu、Waseem Shariff、Noel E.OConnor、Joseph Lemley、Suzanne Little

Paper:

https://arxiv.org/abs/2408.10395

Introduction

Face and eye tracking are pivotal tasks in computer vision with significant applications in healthcare, in-cabin monitoring, attention estimation, and human-computer interactions. Traditional frame-based cameras often struggle with issues such as under-sampling fast-moving objects, scale variations, and motion-induced shape deformations. Event cameras (ECs), also known as neuromorphic sensors, offer a promising alternative by capturing changes in local light intensity at the pixel level, producing asynchronously generated data termed “events.” This study evaluates the integration of conventional algorithms with event-based data transformed into a frame format, preserving the unique benefits of event cameras.

Related Work

Face Detection and Tracking

Face tracking has applications in neuroscience, automotive systems, and more. ECs, with their low latency and high temporal resolution, offer promising applications in face analysis tasks such as gaze estimation, face pose alignment, yawn detection, and emotion recognition. However, the absence of event-based datasets for face tracking has limited widespread attention in this domain. Previous works have utilized various representations and algorithms, including patch-based sparse dictionaries, purely event-based approaches, and kernelized correlation filters.

Eye Tracking

Traditional eye tracking relied on conventional cameras, but recent attention has shifted towards ECs due to their asynchronous data presentation and low latency. Foundational works used traditional image processing and statistical methods, while recent studies have leveraged neural networks to address the unique challenges posed by sparse event data. Techniques such as Change-Based Convolutional Long Short-Term Memory (CB-ConvLSTM) models, Spiking Neural Networks (SNN), and U-Net based networks have been proposed for precise pupil tracking and eye region segmentation.

Research Methodology

Dataset

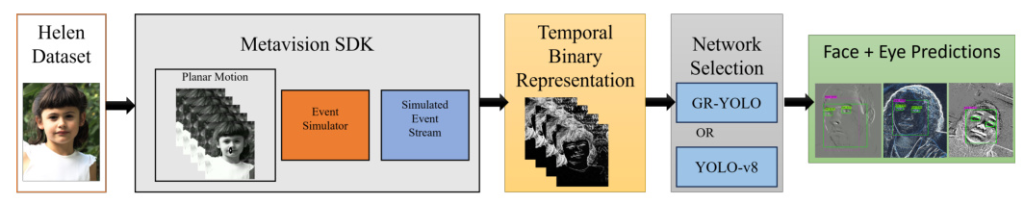

The availability of datasets in event-based vision, particularly for face and eye tracking, remains limited. This study utilizes the Helen Facial Landmarks Dataset, comprising 2,300 images with landmark annotations, converted into an event format using an event simulator. The PlanarMotionStream object of the Metavision software suite was used to simulate camera motion across the images, creating a realistic simulation of 6-Degrees of Freedom (DOF) motion. This process generates a continuous stream of events, addressing the problem of under-sampling.

Event Representation

Events are transformed into a representation accepted by Convolutional Neural Networks (CNNs) using the Temporal Binary Representation (TBR) method. TBR employs a binary method for processing events in pixels over a fixed time window, creating a binary representation for each pixel indicating the presence or absence of an event. These binary values are stacked into a tensor, converted into a decimal number, and normalized, allowing the representation of multiple accumulation times in a single frame without information loss.

Experimental Design

Network Architecture

GR-YOLOv3

The GR-YOLO architecture integrates the YOLOv3 Tiny model with a Gated Recurrent Unit (GRU), enabling the model to understand motion and changes over time. This architecture simplifies the model’s interface with existing architectures and enhances adaptability in real-world scenarios.

YOLOv8

YOLOv8 offers enhanced accuracy and speed, making it suitable for real-time applications. It features a fully convolutional architecture, an enhanced backbone network, and the elimination of anchor boxes, improving its accuracy and robustness in detecting objects of various sizes.

Training

The dataset, comprising 2,330 accumulated frames, was divided into a training and validation set with a ratio of 80:20. The training process was implemented in PyTorch and trained on an NVIDIA GeForce RTX 2080 Ti GPU using an AdamW optimizer with a learning rate of 1 × 10−3 and a weight decay of 1 × 10−3. The Mean Squared Error (MSE) was used as the loss function.

Results and Analysis

Quantitative Results: Synthetic Data Evaluation

The effectiveness of the dataset for face and eye tracking was assessed by comparing the outcomes of testing three iterations of YOLO models on the test set of the synthetic data. The frame-based methodology demonstrated excellent performance, with YOLOv8 leading in terms of error minimization and localization accuracy.

Quantitative Results: Evaluation on Real Event Camera Data

The models trained on the synthetic dataset were tested against real event camera datasets, including the Faces in Event Stream (FES) dataset and naturalistic driving data. The results indicated that the frame-based approach effectively generalizes to real event camera datasets, with YOLOv8 showing noticeable improvement in performance across all metrics and datasets compared to GR-YOLOv3.

Qualitative Results

Qualitative analysis highlighted both models’ capability of predicting faces and eyes from videos, demonstrating robust performance in the presence of rapid motion, different head positions, and occlusions.

Overall Conclusion

This study successfully demonstrated the viability of integrating conventional algorithms with event-based data transformed into a frame format for face and eye tracking. The frame-based approach offers computational efficiency and accessibility, addressing the issues of under-sampling present in traditional RGB cameras. The findings validate the dataset’s utility and highlight the distinct advantages of frame-based approaches, making them a preferable choice for applications requiring high reliability and low computational demand. Future research can explore the potential of event-based vision technology in various applications, including sports analytics, blink and saccade detection, emotion recognition, and gaze tracking.

Acknowledgement

This research was conducted with the financial support of Science Foundation Ireland (SFI) under grant no. [12/RC/2289_P2] at the Insight SFI Research Centre for Data Analytics, Dublin City University in collaboration with FotoNation Ireland (Tobii).