Authors:

Richard H. Moulton、Gary A. McCully、John D. Hastings

Paper:

https://arxiv.org/abs/2405.18753

Introduction

In the rapidly evolving field of cybersecurity, the reliability and integrity of AI-driven research are paramount. As AI systems are increasingly deployed to protect critical infrastructure, analyze network traffic, and detect advanced persistent threats (APTs), a significant challenge has emerged: the reproducibility crisis. This crisis, where many studies’ results cannot be reliably reproduced or replicated, threatens the foundation of scientific progress and the practical deployment of robust models in real-world applications.

Adversarial robustness, the study of ensuring that deep neural networks (DNNs) maintain functionality in the face of intentional or unintentional input perturbations, is crucial for developing resilient cyber defenses. However, the rapid evolution of AI technologies and the growing complexity of cybersecurity landscapes have made reproducing and validating research results increasingly difficult. This paper presents a case study that highlights the challenges of reproducing state-of-the-art research in adversarial robustness, aiming to spark a crucial dialogue within the cybersecurity community about the need for robust, verifiable research.

Related Work

Adversarial Robustness in Cybersecurity

Adversarial robustness refers to the ability of machine learning models to maintain their performance and integrity when faced with maliciously crafted inputs. In cybersecurity, this concept is critical for several applications:

- Network Intrusion Detection: AI-based intrusion detection systems (IDS) must be robust against adversarial examples that could mask malicious network traffic as benign.

- Malware Analysis: Machine learning models used for malware classification need to resist adversarial perturbations that could cause malicious software to be misclassified as harmless.

- Phishing Detection: AI systems designed to identify phishing attempts must be resilient against subtle manipulations that could cause fraudulent emails to bypass detection.

- Anomaly Detection in IoT Networks: AI models monitoring IoT networks must withstand adversarial attacks that could mask abnormal behavior indicative of a security breach.

The Reproducibility Crisis in Cybersecurity

The reproducibility crisis poses several risks to the cybersecurity community:

- Unreliable Defense Mechanisms: If adversarial robustness techniques cannot be reliably reproduced, verifying their effectiveness becomes challenging, potentially leading to the deployment of vulnerable AI systems.

- Hindered Progress: The inability to reproduce results impedes the cumulative advancement of knowledge, slowing down the development of more robust AI-driven security solutions.

- Eroded Trust: Persistent reproducibility issues may erode trust in AI-based cybersecurity solutions, leading to reluctance in adopting these technologies.

- Increased Vulnerability: The inability to quickly validate and build upon existing research could leave organizations vulnerable to new attack vectors.

Research Methodology

Research Design

This study aimed to explore the challenges in the reproducibility of AI-driven cybersecurity research in the context of adversarial robustness. Using a case study methodology, it examined specific issues in the reproduction process, providing insights for broader discussions on reproducibility in cybersecurity. The paper “SoK: Certified Robustness for Deep Neural Networks” was chosen for its relevance to AI-driven cybersecurity and the availability of tools and datasets. The goal was to reproduce the results using the authors’ VeriGauge toolkit.

Attempting to Reproduce the Results

The study followed the original paper’s procedures, including software setup and dependency installation. To support VeriGauge’s GPU needs, the NVIDIA GeForce RTX 4090 driver on Windows 11 was updated, and Ubuntu 22.04 with the latest NVIDIA CUDA toolkit was installed on WSL2. The first challenge was that the non-Python dependencies listed in Verigauge/eran/install.sh were outdated or incompatible with current software repositories, necessitating manual sourcing of these packages.

Navigating Software and Hardware Compatibility Issues

Configuring the experimental environment revealed numerous software and hardware compatibility issues, complicating the reproduction of research across different computational environments. Conflicts between deep learning libraries, CUDA toolkits, and GPU drivers caused cryptic errors and unexpected terminations of the VeriGauge toolkit. Overcoming these issues required exploring alternative hardware, installing specific software versions, and conducting extensive research.

Experimental Design

Software and Hardware Setup

The experimental setup involved updating the NVIDIA GeForce RTX 4090 driver on Windows 11 and installing Ubuntu 22.04 with the latest NVIDIA CUDA toolkit on WSL2. The non-Python dependencies listed in Verigauge/eran/install.sh were manually sourced due to their outdated or incompatible nature with current software repositories.

Challenges Encountered

- Software Version Conflicts: The specified software versions in the Python ‘requirements.txt’ were not all available, necessitating manual adjustments.

- TensorFlow Compatibility: Versions of TensorFlow newer than TensorFlow 2.0 do not support the contrib module, requiring the use of TensorFlow 1.x, which is not available for Python 3.8 or newer.

- GPU Access Issues: VeriGauge couldn’t access the GPU due to compatibility issues between the installed NVIDIA driver and CUDA toolkit.

- Memory Constraints: The process was terminated by the OOM (out of memory) killer due to RAM exhaustion, even after reconfiguring WSL to access the full 16GB of RAM and allocating a 64GB swap space.

Successful Execution

Despite these challenges, a subset of the original tests was successfully run. The kReLU method on the MNIST dataset, using a fully connected neural network, was investigated further as it was the first successful test. A successful run produced five log files, providing valuable insights into the reproducibility of the original study.

Results and Analysis

Successfully Running a Subset of Results

A subset of the original tests was successfully run, with the kReLU method on the MNIST dataset being the first successful test. The successful run produced five log files, providing valuable insights into the reproducibility of the original study.

Inability to Run All Key Tests

Despite being a relatively recent creation, the VeriGauge toolkit faced challenges due to the ever-evolving software ecosystem. Outdated or incompatible dependencies made installation and execution difficult. The intricate interplay between the toolkit, deep learning libraries, and hardware dependencies added further challenges.

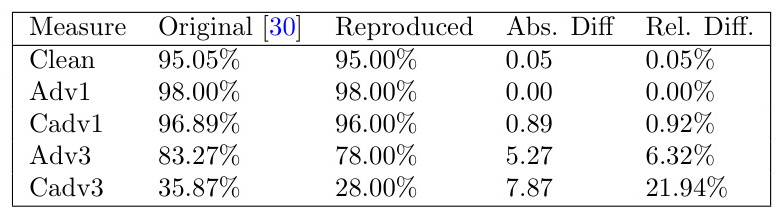

Discrepancies between Original and Reproduced Results

Even when reproduction appeared successful, discrepancies between the original and reproduced results emerged. These discrepancies raise concerns about the long-term reproducibility of research due to software evolution. Comparing the original results with the reproduced results required reviewing test log files.

Overall Conclusion

The reproducibility crisis in adversarial robustness research presents a critical challenge that reverberates throughout the cybersecurity landscape. This crisis undermines the scientific integrity of AI research and poses significant risks to the practical implementation of AI-driven security solutions. The challenges encountered in this study underscore the urgent need for the cybersecurity research community to adopt best practices that prioritize reproducibility. These practices include containerization to standardize computational environments, comprehensive documentation, and collaborative initiatives to foster transparency and open science. By addressing these issues, researchers can ensure that the AI models designed to protect critical infrastructure and secure networks against threats are more reliably robust.

Acknowledgments

ChatGPT assisted with the grammar of this original work.