Authors:

Jinze Sun、Yongpan Sheng、Lirong He

Paper:

https://arxiv.org/abs/2408.07911

Introduction

Knowledge graphs (KGs) have become increasingly significant in natural language processing and knowledge engineering tasks due to their ability to model real-world facts using multi-relationship graph structures. However, real-world knowledge is dynamic, leading to the development of temporal knowledge graphs (TKGs) that encode relationships and events over time. Temporal knowledge graph reasoning (TKGR) models aim to extrapolate new facts from historical data, addressing the inherent incompleteness of TKGs.

Despite advancements in TKGR models, existing approaches often learn biased data representations and spurious correlations, failing to discern causal relationships between events. This paper introduces a novel framework, CEGRL-TKGR, which incorporates causal structures into graph-based representation learning to enhance the understanding of causal relationships and improve prediction accuracy.

Related Work

Temporal Knowledge Graph Reasoning

TKG inference focuses on predicting future facts based on historical events. Various models have been developed, including:

- CyGNet: Uses a copy-generating mechanism to capture global repetition rates.

- GHNN and GHT: Construct temporal point processes to capture temporal dynamics.

- RE-NET and RE-GCN: Introduce graph neural networks (GNNs) to model structural and temporal dependencies.

- TLogic and TITer: Use logical rules and reinforcement learning for interpretable models.

These models, however, often overlook confounding factors, leading to incorrect predictions based on non-causal correlations.

Causal Representation Learning

Causal representation learning in graphs aims to improve the explanatory power and generalization performance of GNNs by applying causal reasoning principles. Various methods have been proposed, such as DIR, GOOD, CAL, and CFLP, which focus on distinguishing causal features from confounding features. However, none have combined causal learning with TKGR.

Preliminary

Notations and Task Formulation

A TKG ( G ) is formalized as a sequence of graph slices ({G_1, G_2, \ldots, G_T}), where each slice ( G_t ) consists of facts occurring at timestamp ( t ). The task is to predict the missing entity in a query ((e_s, r, ?, t)).

A Causal Perspective on the TKGR Task

The GNN-based inference process can be abstracted through a causal graph, encompassing variables such as graph data ( G_t ), causal features ( C ), confounding features ( N ), representations ( R ), and predictions ( Y ). The causal embedding captures the causal features, while confounding features introduce spurious correlations.

Causal Intervention

Causal intervention helps disentangle the effects of confounding features by modeling ( P(Y | do(C)) ) instead of ( P(Y | C) ). This involves severing the backdoor pathway from ( N ) to ( Y ) to mitigate the impact of confounding features.

The Proposed Approach

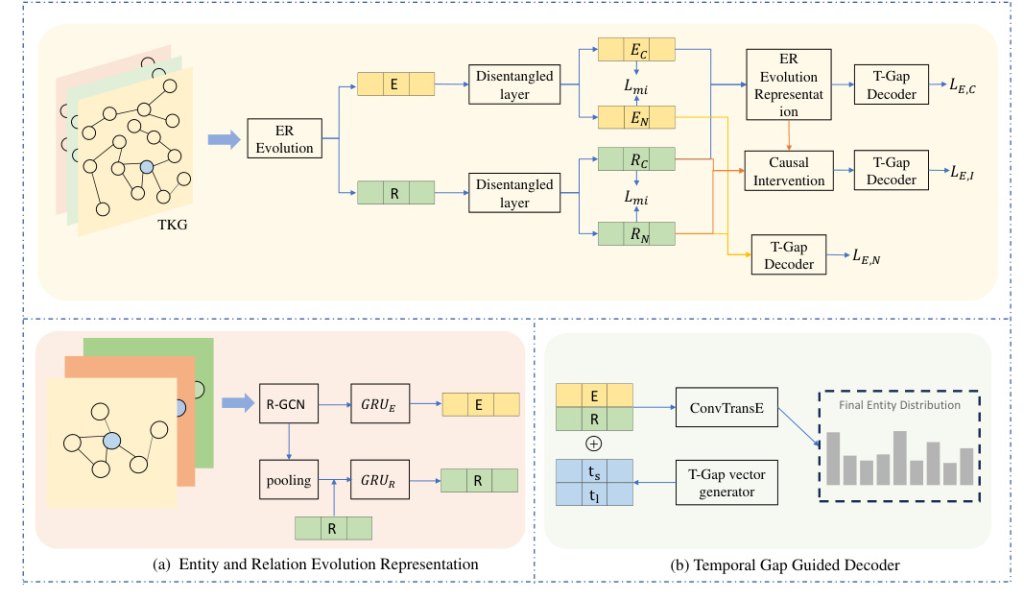

CEGRL-TKGR consists of three parts: representation learning, disentangled learning, and a temporal gap-guided decoder.

Entity and Relation Evolution Representation

Representation learning involves aggregating information from multiple relationships and neighbors within each ( G_t ). The ω-layer RGCN model is used to update entity and relation representations over time.

Disentangled Causal and Confounding Features

Entity and relational representations are separated into causal and confounding features using a decoupling module. Mutual information minimization is employed to ensure independence between these features.

Temporal Gap Guided Decoder

A specially crafted decoder determines the likelihood score of potential entities and relations, considering the time intervals of events. The ConvTransE decoder processes the embeddings and time interval vectors to produce the final entity distribution.

Causal Intervention and Training Objective

Causal-based embeddings learn the intrinsic causes of events, while confounding features address biases. The intervention features combine causal and confounding features at the representation level. The loss function for the link prediction task incorporates supervised classification loss, KL-Divergence, and mutual information loss.

Experiments and Analysis

Experimental Settings

The model is evaluated on six datasets: ICEWS14, ICEWS18, ICEWS05-15, YAGO, WIKI, and GDELT. The mean reciprocal rank (MRR) and Hits@k are used as evaluation metrics.

Experimental Results and Discussion

CEGRL-TKGR outperforms state-of-the-art baselines in link prediction tasks, demonstrating its ability to mitigate the impact of confounding features and uncover causal relationships.

Performance on Noisy Temporal Knowledge Graphs

CEGRL-TKGR shows stable performance on noisy datasets, effectively reducing the impact of confounding features compared to models without causal enhancement.

Ablation Study and Analysis on Parameter Sensitivity

Ablation studies confirm the effectiveness of the causal enhancement module and temporal gap-guided decoder. The model is relatively stable across different parameter settings, with optimal ranges identified for hyperparameters.

Case Study

A case study on the ICEWS14 dataset illustrates CEGRL-TKGR’s ability to learn causal relationships, leading to accurate predictions.

Conclusion

CEGRL-TKGR integrates causal structures with graph representation learning to address the limitations of existing TKGR models. Comprehensive experiments demonstrate its effectiveness in improving prediction accuracy and robustness. Future work will explore further applications of causal learning in TKGR tasks.