Authors:

Chun Jie Chong、Chenxi Hou、Zhihao Yao、Seyed Mohammadjavad Seyed Talebi

Paper:

https://arxiv.org/abs/2408.07004

Introduction

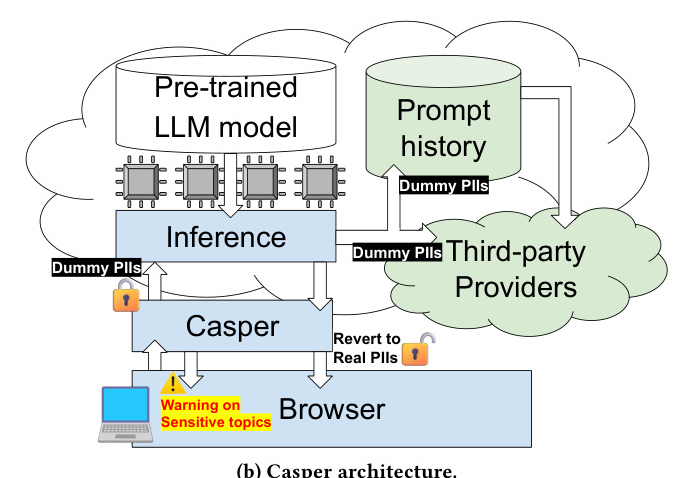

Large Language Models (LLMs) have become integral to various online applications, including chatbots, search engines, and translation tools. These models, trained on vast datasets, offer powerful capabilities but also raise significant privacy concerns. Users’ prompts, which may contain sensitive information, are processed and stored by cloud-based LLM providers and shared with third-party plugins. This paper introduces Casper, a browser extension designed to sanitize prompts by detecting and removing sensitive information before they are sent to LLM services.

Background

Online LLM Services

Cloud-based LLM services like OpenAI’s ChatGPT and Microsoft’s Copilot have made powerful language models accessible to regular users. These services collect user prompts for improving model responses and may share data with third-party providers. Privacy policies of these services indicate that user data is collected and used for various purposes, including service improvement and research.

Benefits and Risks of Prompt Collection

While collecting prompts helps improve LLM responses, it also poses privacy risks. Sensitive information in prompts can be leaked through data breaches or adversarial machine learning attacks. Users are increasingly concerned about their privacy, and there is a need for solutions that protect sensitive information without compromising the functionality of LLM services.

Third-Party Extensions to AI Services

Third-party plugins enhance the capabilities of LLM services but also introduce additional privacy risks. These plugins may require users to share their inputs with third-party providers, which can lead to the exposure of sensitive information. Privacy policies of these plugins often mention data sharing with business affiliates and partners, further complicating the privacy landscape.

Threat Model

Casper assumes that users are honest and use uncompromised systems and browsers. LLM service providers are trusted to fulfill user requests correctly but are not trusted to withstand malicious attacks. Third-party providers are considered to be at a higher risk due to varying privacy and security policies.

Motivation and Design Goals

Straw-Man Solutions

- Local LLM Inference: Performing all LLM inference locally on the user’s device is not feasible due to the high computational resources required.

- Cloud-based PII Redaction: Performing PII redaction on the LLM service providers’ side creates trust issues, as users may not trust providers to protect their privacy adequately.

Design Goals

- Comprehensive Privacy Protection: Filter out sensitive personal information with or without fixed patterns.

- Client-Side Filtering: Run entirely on the user’s device without using cloud-based services.

- Lightweight and Efficient: Minimal performance overhead and easy deployment as a browser extension.

- Compatibility: Compatible with existing online LLM services without requiring modifications.

- User Awareness and Control: Inform users of potential privacy risks and allow customization of filtering rules.

Design

System Overview

Casper examines user prompts and identifies two types of private information: Personal Identifiable Information (PII) and sensitive topics. It redacts PIIs by replacing them with unique placeholders and alerts users about sensitive topics, allowing them to review and confirm before sending the prompts to LLM services.

Three-Layered Prompt Sanitization

- Rule-Based Filtering: Identifies and redacts sensitive information based on predefined keywords and patterns.

- Named-Entity Detection: Uses a machine learning model to identify named entities such as names, locations, and organizations.

- Sensitive Topic Identification: Uses a local LLM model to identify privacy-sensitive topics in the prompts.

Data Preparation for Local LLM Topic Identification

Casper extracts nouns from user prompts to reduce token count and improve processing time. It handles long prompts by truncating them to the maximum token limit of the local LLM model and processes them in chunks.

Awareness-Raising User Interface

Casper warns users about privacy risks when their prompts contain PIIs or sensitive topics. The warning message is clear and informative, helping users make informed decisions about sharing their prompts with LLM services.

Feature-Preserving Redaction

Casper replaces detected PIIs with unique placeholders and reverts them to their original forms when responses are received from LLM services. This ensures that LLM services can generate relevant responses without knowing the actual PIIs.

Implementation and Prototype

Casper Browser Extension

Casper is implemented as a browser extension for Google Chrome. It features a configuration panel for setting up personal information, customizing regular expressions, configuring sensitive topics, and selecting models for local LLM and named entity detection.

Rule-Based Filtering

Implemented as keyword search and regular expression match in TypeScript, the rule-based filtering identifies common PII patterns and allows users to customize the rules.

Named-Entity Detection

Casper uses a fine-tuned BERT model for named-entity detection, loaded through the Transformers.js library. Users can adjust the confidence threshold and replace the model with other compatible models.

Local LLM-based Topic Identification

Casper uses the WebLLM framework to perform local LLM inference for topic identification. Users can select from various LLM models based on their needs and hardware capabilities.

User Interface Integration

Casper integrates with the ChatGPT web interface, analyzing user prompts before submission. It uses a keystroke combination (Control-Enter) to trigger prompt analysis and displays a warning window if privacy risks are detected.

Evaluation

Evaluation Setup

Casper is evaluated on Apple MacBook Air and MacBook Pro with different hardware configurations. The evaluation involves processing synthesized prompts and measuring the effectiveness and performance overhead of Casper’s three-layered filtering mechanism.

Named-Entity Detection

Casper is evaluated on 1000 synthesized prompts with named entities and 1000 prompts without named entities. The results show high true positive and true negative rates, demonstrating Casper’s effectiveness in detecting named entities.

Sensitive Topic Identification

Casper’s LLM-based sensitive topic identification is evaluated using synthesized prompts with medical and legal topics. The results show high detection rates for both medical and legal topics, with Llama 3 achieving higher accuracy than Llama 2.

Performance Overhead

Casper introduces minimal performance overhead, with processing times increasing with prompt length. The CPU and memory usage are relatively low, and the GPU usage is fully utilized for local LLM inference.

Related Work

Trusted and Confidential GPU Computing

Trusted computing technologies protect security-sensitive applications from privileged adversaries. Casper can benefit from these technologies to further protect user privacy and security.

Browser GPU Stack Security

WebGPU and WebGL introduce security risks by allowing web apps to access privileged GPU code paths. Casper relies on browser security features to protect the confidentiality and integrity of local LLM inference.

Client-Side Filtering

Client-side filtering is a common practice in client-server applications to remove sensitive information before sending it to a server. Casper addresses the unique challenges of filtering PIIs and privacy-sensitive topics in LLM prompts.

Privacy-Preserving Machine Learning

Privacy-preserving machine learning techniques like federated learning and homomorphic encryption aim to protect data privacy. Casper focuses on providing a lightweight solution in a client-server LLM inference setting.

Discussion

Users of Other Languages

Casper’s design can be extended to support global users by incorporating keywords, patterns, and named-entity recognition models for other languages. Pre-trained local LLM models for various languages are also available.

Performance Optimization

Several opportunities exist to further optimize Casper’s performance, including more efficient pattern matching algorithms and named-entity recognition models. As WebGPU matures and more efficient local LLM models are developed, Casper’s performance is expected to improve.

Conclusions

Casper addresses the privacy implications of using online LLM services by detecting and removing private and sensitive information from user prompts. It is a lightweight and efficient browser extension that integrates seamlessly with existing LLM services. Casper demonstrates high accuracy in detecting private and sensitive information with minimal performance overhead, making it suitable for real-world applications.