Authors:

Jin Wang、Arturo Laurenzi、Nikos Tsagarakis

Paper:

https://arxiv.org/abs/2408.08282

Introduction

Autonomous behavior planning for humanoid robots in unstructured environments is a significant challenge in robotics. This involves enabling robots to plan and execute long-horizon tasks while perceiving and correcting deviations between task execution and high-level planning. Recent advancements in large language models (LLMs) have shown promising capabilities in planning and reasoning for robot control tasks. This paper proposes a novel framework leveraging LLMs to enable humanoid robots to autonomously plan behaviors and execute tasks based on textual instructions, while also observing and correcting failures during task execution.

Related Works

Achieving autonomy in locomotion and manipulation tasks remains a high-level challenge in robotics. Previous research has focused on motion planning, trajectory optimization, and data-driven approaches to generate appropriate multimodal reference trajectories for dexterous manipulation. Model-free reinforcement learning has also demonstrated impressive performance in specific tasks and unstructured environments. However, these methods often overlook the ability to understand instructions and reason about tasks, making it challenging to achieve autonomy and adaptability in task-driven mobile manipulation.

With the emergence of LLMs, several transformer-based architectural planners have played a pivotal role in predicting and generating robot actions guided by natural language instructions. However, these end-to-end strategies often require substantial training data and expert demonstrations. In contrast, some approaches leverage LLMs’ semantic comprehension capabilities as higher-level task planners, transforming instructions into executable lower-level actions in a zero- or few-shot manner. Nonetheless, these methods tend to assume task success and overlook potential discrepancies between planned expectations and real-world execution.

Autonomous Robot Behavior Planning

Problem Statement

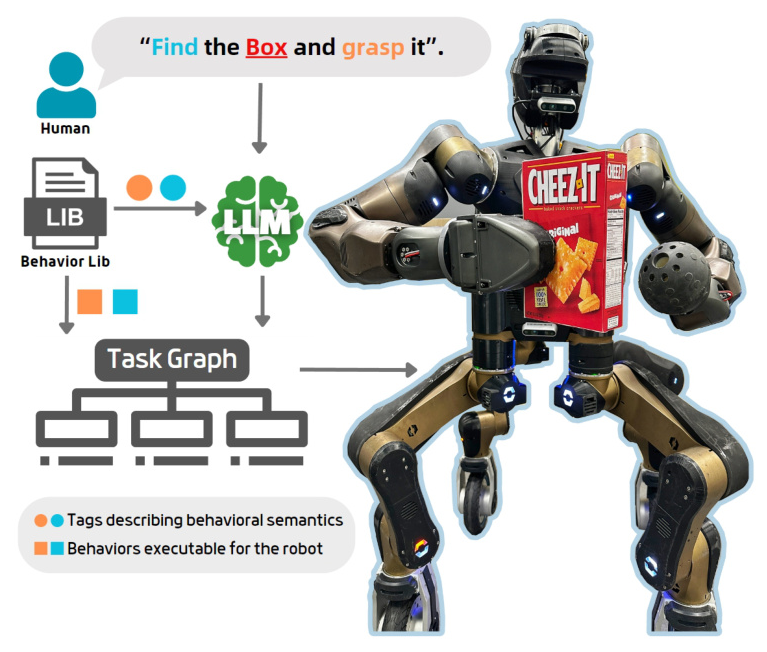

The problem of performing autonomous loco-manipulation tasks based on higher-level instructions involves encoding human instructions into a hierarchical sequence of robot behaviors. The proposed framework assumes that a behavior library (lib) is provided to the robot, consisting of a set of action and perception behaviors that can be directly executed by the robot. The LLM generates a task graph corresponding to the instruction, encapsulating a sequential arrangement of behaviors necessary for the robot to execute in various states to accomplish the designated task.

Overview of the Framework

The proposed robot behavior planning system leverages language models to perform autonomous loco-manipulation tasks. The system first creates a library of behaviors for the robot, divided into action behaviors and perceptual behaviors, each containing a corresponding behavior tag and behavior code. Prompts are defined in advance, including conditional information for input to the LLM, a description of the robot’s features, the skills the robot possesses, and a description of the expected outputs.

When a robot is called upon to perform a task, the human provides task instructions as input, which are fed to the LLM along with the prompts and behavior tags from the behavior library. The LLM generates a sequence of the robot’s behaviors based on the given task and stores it in an XML-formatted file, which is used to generate a Behavior Tree (BT) that controls the robot’s task execution. The behavior code in the behavior library and the BT generated by the LLM together form a task graph, which instructs the robot to perform different behaviors according to the current robot state and conditions, ultimately completing the task.

Language Model for Behavior Planning and Modification

Behavior Library

Controlling a robot to accomplish complex actions in a long-horizon task is challenging. To link semantic behaviors with the actual execution of actions by the robot, a behavior library is designed for the robot, such that each skill can directly control the robot to complete basic actions. This approach improves the interpretability of each step of the task process and reduces the deviation between high-level tasks and low-level execution by partitioning the complex task into a sequence of actions consisting of several behavior skills.

The behavior library is classified into action behaviors and perceptual behaviors. Action behaviors control the robot to complete whole-body motions such as moving and manipulating. Perceptual behaviors rely on the robot’s internal sensors to detect the position of objects, evaluate the robot’s state, and reason whether the current task is complete or if there are failures.

LLM Generated Task Planner

LLMs utilize their extensive knowledge of semantic data and text comprehension reasoning capabilities to provide answers to human instructions. To obtain the desired output, constraints are imposed on the instructions given as input using prompt words. Prompts are used as input to the LLM along with human instructions and behavior tags, consisting of information about the current state of the robot, its hardware configuration, the concept of a behavior library, its components, sample applications, and the expected output format.

Behavior Trees are used as both an intermediate bridge and an output of the LLM. The use of Behavior Trees provides a hierarchical, tree-structured framework for controlling the robot’s actions and decision-making processes. The LLM generates the behavior tree framework based on the behavioral skills and task instructions, which is stored in an XML file. The task graph is responsible for loading the behavior tree and invoking behaviors from the behavior library according to the node guidance.

Failure Detection and Recovery

To determine whether a task is successfully completed or deviates during execution, a failure detection and recovery mechanism is incorporated into the task graph. Visual questions and answers (VQA) are used as perceptual behaviors to determine the current state of the robot performing the task. Proprioceptive sensing like torque and distance is also developed as behaviors to detect potential failures in specific tasks. The perceptual behaviors in the behavior library provide multiple alternatives and combinations for the failure detection nodes, allowing the LLM to design the behavior tree based on the reasoning of different tasks.

Experiment and Evaluation

Experiment Setup

The experiment was conducted using objects from the YCB dataset commonly found in an office kitchen. The test environment was an open area inside the lab, with objects randomly placed on a desk. The CENTAURO robot, a hybrid wheels and legs quadruped robot with a humanoid upper body, features 37 degrees of freedom and a two-fingered claw gripper, enabling it to perform a wide range of loco-manipulation tasks. The action behaviors in the behavior library were designed based on Cartesian I/O, requiring no extra training. The Xbot functions as middleware, providing real-time communication between the robot’s various underlying actuators and the task commands through an API interface. For message transmission between the LLM, behavior library, Behavior Tree, and the robot, the Robot Operating System (ROS) was utilized, and simulation experiments were conducted in the Gazebo simulator.

Autonomous Humanoid Loco-manipulation Task

Behavior Planning with LLM

The LLM’s behavior planning capabilities for robot tasks of varying complexity were tested. The experiment was conducted for eight different tasks, including tasks with failure detection and recovery (FR). Standard instructions were provided for the given objects and the behaviors created in the behavior library. The Behavior Tree was used to load the XML files generated by the LLM and verify their feasibility. The appropriateness of the behavioral planning and the successful completion of the task were manually verified. Each task was planned a total of 50 times, all using the same behavior library and prompt. The time for each task graph generation was recorded, as well as the executable and success rate of the behavioral planning.

Long-horizon Task Execution

After verifying that the behavioral plans generated by the LLM can be converted into an executable Behavior Tree, experiments were conducted using the CENTAURO robot in both simulation and real-world environments. The last six tasks from the behavior planning experiments were selected to test the actual performance of the robot executing LLM-planned behaviors. Different descriptions of instruction and different target objects were used to verify the LLM’s ability to reason about the simple task and plan the robot’s behavior. For tasks that require in-process failure detection and recovery, the LLM incorporates perceptual behaviors in the behavioral planning phase and attempts to recover if it detects that the planned action fails to complete the task.

Results Analysis

The experiments evaluated the behavioral planning capabilities of the LLM for tasks with varying complexity levels, applying it to the CENTAURO robot. With a defined behavior library and appropriate prompts, the LLM can generate corresponding behavior plans based on different task instructions, achieving a high planning success rate and task execution rate. These rates vary with the task’s complexity and the number of behaviors needed to complete it. Incorporating failure detection and recovery into the task process increases the difficulty of behavior planning, affecting the success rate of the generated task graphs. However, it significantly boosts the robot’s execution success rate in both simulation and real-world settings.

Conclusion

This work introduces an autonomous online behavioral planning framework utilizing a large language model (LLM) for performing robot loco-manipulation tasks based on human language instructions. The framework proposes the concept of a behavior library and designs action and perception behaviors, which are both interpretable and pragmatically efficient. The LLM organizes these behaviors into a task graph with a hierarchical structure, derived from the understanding of given instructions. The robot follows the nodes in this task graph to sequentially complete the task, detecting and attempting to correct possible failures by integrating the visual language model with intrinsic perceptions throughout the task process. Experiments with the CENTAURO robot validate the achieved performance and practicality of this framework in robotic task planning.

Future work will focus on enriching the robot’s behavior library and improving the prompts system, enabling the LLM to better plan and optimize behavioral sequences automatically based on the robot’s intrinsic mobility, manipulation, and perceptual strengths. Additionally, improving the dynamic planning and multiconditional reasoning capability of the framework, including behavioral replanning in response to external perturbations or the introduction of artificial subtasks during a task, will be explored.