Authors:

Weimin Yin、Bin Chen adn Chunzhao Xie、Zhenhao Tan

Paper:

https://arxiv.org/abs/2408.08084

Introduction

In the realm of artificial intelligence, the challenge of catastrophic forgetting—where a model forgets previously learned information upon learning new tasks—has been a significant hurdle. This paper introduces a novel method, Weight Balancing Replay (WBR), to address this issue in class-incremental learning using pre-trained models. The proposed method aims to maintain a balance between the weights of new and old tasks, thereby mitigating the effects of catastrophic forgetting.

Related Work

The paper categorizes existing approaches to tackle catastrophic forgetting into four main types:

- Parameter Regularization-Based Methods: These methods protect acquired knowledge by preserving the invariance of critical parameters. However, they often fall short under challenging conditions.

- Sample Buffer-Based Methods: These methods store samples from old tasks to retrain the model alongside new tasks. While effective, they face limitations related to buffer size and privacy concerns.

- Architecture-Based Methods: These methods introduce additional components to store knowledge, often requiring significant additional parameters.

- Pretrained Models-Based Methods: These leverage the representation capabilities of pre-trained models for continual learning, often using techniques like prompt tuning and model mixing.

Prerequisites

Continual Learning Protocols

Continual learning involves training a model on non-stationary data from a sequence of tasks. The goal is to train a single model to predict labels for any sample from previously learned tasks, even when the task identity is unknown during testing. This paper focuses on the more challenging class-incremental learning setting.

Benchmark for Continual Learning with Pretrained Models

Recent research has shown that pre-trained models (PTMs) can significantly enhance continual learning performance. The paper uses the SimpleCIL benchmark to evaluate the fundamental continual learning ability provided by a PTM within an algorithm.

Weight Balanced Replay

From Classifier Bias to Network Bias

The paper proposes identifying and correcting biases between the forgetting model and the normal model. The continual learning process is defined as ( f^{b-1}{D_b}(x; \theta) ), and the goal is to align it with the normal training process ( f{D_1 \cup \cdots \cup D_b}(x; \theta) ).

Approximate Bias and Balance

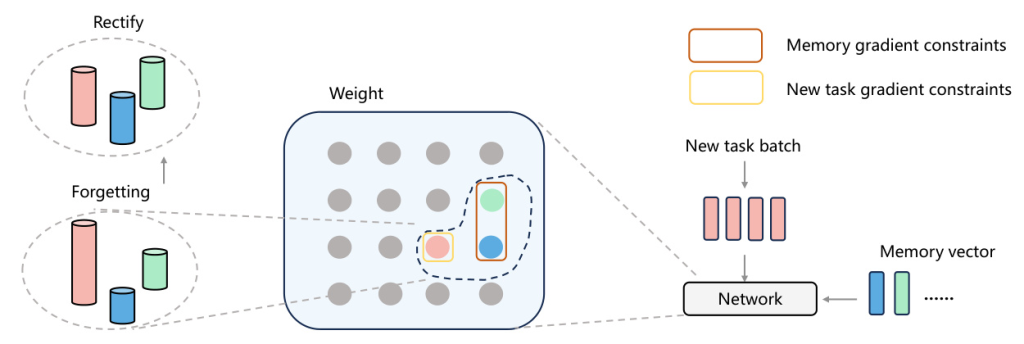

The WBR method uses a replay-based approximate correction strategy to dynamically adjust parameters during new task training. It introduces an importance function to evaluate the significance of each parameter and applies different gradient constraints to the training of the current task and the calculation of bias.

Optimization Objective for WBR

The optimization objective for WBR is to minimize the end-to-end training loss function while controlling the gradient magnitude. The loss function is computed using softmax cross-entropy and is controlled by gradient clipping functions.

Experiments

Datasets and Experimental Details

The experiments were conducted on Split CIFAR-100 and MNIST datasets. The evaluation metrics used were final accuracy ( A_B ) and average accuracy ( \bar{A} ). The experiments were conducted in both non-pretrained and pretrained environments.

Main Results

Non-Pretrained Environment

The results indicate that significant forgetting can be mitigated by maintaining a balance between the weights of old and new tasks. The impact of network depth, learning rate, and balance constraints on forgetting was analyzed.

Pretrained Environment

The WBR method outperformed all comparison methods in terms of training speed and achieved competitive performance. The results suggest that the balance of weights is closely related to catastrophic forgetting.

Limitations

The influence of the memory vector from the first layer on subsequent layers weakens with increasing network depth, which contradicts the advantages of deep learning. Additionally, using only MLPs for experiments is incomplete, and more complex architectures like convolutional or attention modules need to be explored.

Conclusion

The paper proposes a novel method, Weight Balancing Replay (WBR), to overcome catastrophic forgetting by maintaining a balance between the weights of new and old tasks. The method significantly outperforms previous state-of-the-art approaches in training speed for class-incremental learning without sacrificing performance.