Authors:

Zijian Zhang、Sara Aronowitz、Alán Aspuru-Guzik

Paper:

https://arxiv.org/abs/2408.08463

A Theory of Understanding for Artificial Intelligence: Composability, Catalysts, and Learning

Introduction

Understanding is a multifaceted concept that has intrigued philosophers, scientists, and AI researchers alike. This paper proposes a framework for analyzing understanding in artificial intelligence (AI) based on the notion of composability. The authors suggest characterizing understanding by a subject’s ability to process relevant inputs into satisfactory outputs from the perspective of a verifier. This framework is versatile and can be applied to non-human subjects, such as AIs, non-human animals, and institutions.

The paper also introduces the concept of catalysts—inputs that enhance output quality in compositions—and discusses how analyzing these catalysts can reveal the structure of a subject. The authors argue that a subject’s learning ability can be viewed as its ability to compose inputs into its inner catalysts. The paper concludes by examining the importance of learning ability for AIs to attain general intelligence and how language models might overcome existing limitations in AI understanding.

What is Understanding?

Understanding is a complex concept that applies to various objects and subjects. For instance, one might say Alice understands Newtonian mechanics (a theory), an LLM understands black holes (an object), or Charlie understands that the sky is blue (a proposition). This diversity implies that a general theory of understanding must be universally applicable.

The paper distinguishes between “knowing” and “understanding,” noting that understanding implies additional abilities related to the object. For example, understanding that the sky is blue involves knowing why it is blue, not just the fact itself. This complexity makes it challenging to define a universal rule for understanding.

Most contemporary philosophers focus on propositional understanding, limiting the object of understanding to propositions. However, this approach is insufficient for AI, where understanding certain domains or subject areas is of direct interest.

The paper proposes a practical and minimal account of understanding, linking it to behaviors of all kinds. This account encompasses all kinds of responses, including belief and other mental states, and imposes few constraints on the subject’s inner structure.

Understanding as Composability

To design a practical, minimal theory of understanding, the authors introduce the concept of composition:

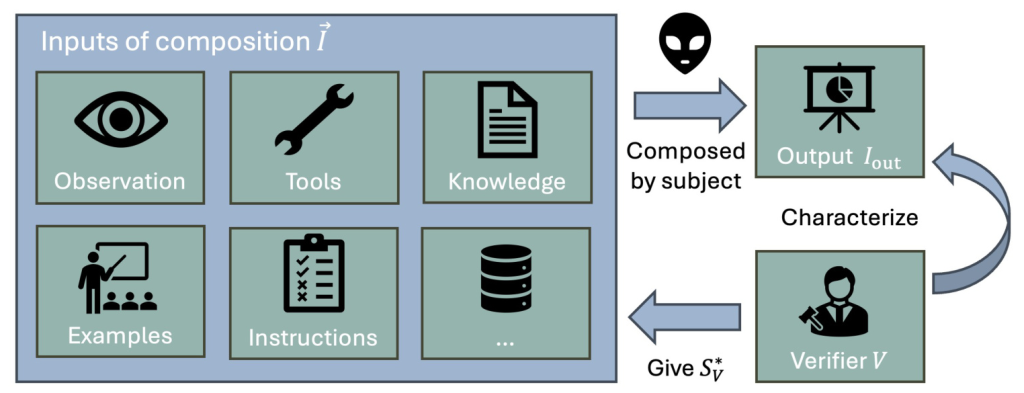

Definition 1 (Composition): Composition is a process in which a subject creates the output ( I_{out} ) given a list of inputs ( \vec{I} = [I_0, I_1, \ldots] ).

This definition is flexible enough to describe broad behaviors related to understanding. For example, a person using syllogistic reasoning can be seen as composing statements into a conclusion. The inputs and outputs can be anything deemed reasonable by the verifier.

Definition 2 (Understanding as Composability): A subject’s understanding of an object ( O ), in the view of a verifier ( V ), can be fully characterized by the set ( S ), which contains all the tuples ( (\vec{I}, I_{out}) ), where ( \vec{I} ) is from the set ( S^*V ) of all the lists of inputs that ( V ) deems to be related to ( O ) and ( I{out} ) is the output of the subject composing ( \vec{I} ).

This framework allows for analyzing understanding by the set ( S ) that includes all behaviors of the subject about the understood object ( O ). The verifier’s understanding can influence their assessment of understanding, and different verifiers may make different judgments about a subject’s understanding of an object.

Characterizing Understanding

Characterizing understanding involves analyzing the set ( S ) and the corresponding understanding. Two useful heuristics for this analysis are universality and scale.

Universality

Humans can process multiple types of information, such as verbal, auditory, visual, and tactile inputs. This generality allows humans to compose multi-type inputs related to an object and produce multi-type outputs. For example, humans can read a novel and draw the scenarios in it.

Current AI systems, as of 2024, have not reached the same level of universality as humans. This implies that these AIs’ understanding is different from humans and falls short in composing many inputs that human verifiers might find critical. However, the increased universality of large language models (LLMs) is an important advancement, enabling them to process various types of inputs and produce human-friendly outputs.

Scale

The scale of inputs and outputs a subject can handle is another important aspect of understanding. For example, a subject that can handle large-scale bodies of information is generally expected to handle smaller ones with similar complexity. Most LLMs are constrained by a limited input size, whereas humans can process long inputs, such as articles or videos, albeit more slowly.

Structure of Understanding

The framework’s minimal nature allows for flexible definitions of subjects, enabling the analysis of composite subjects. For example, in the case of assisted safe driving, the subject could be Bob, the safety system, or the car, depending on the context.

Catalyst

Catalysts are inputs that enhance the subject’s understanding. For example, a proof can be a catalyst for solving a problem, as it simplifies the verification process. Catalysts can be internal or external to the subject and can include tools, instructions, and explanations.

Definition 3 (Catalyst): Suppose a subject understands ( O ) with the set ( S ), in the view of verifier ( V ) (with ( S^_V )). In the view of ( V ), ( C ) is a catalyst of understanding if it satisfies that, for each element ( \vec{I} = [I_1, I_2, \ldots] ) of ( S^V ), composing ( \vec{I}’ = [C, I_1, I_2, \ldots] ) and using the output ( I^*{out} ) to replace the original ( I_{out} ) in each ( (\vec{I}, I_{out}) \in S ), makes a new set ( S’ ) that is more favorable in the view of ( V ).

Catalysts can be designed to enhance the performance of LLMs, such as chain-of-thought prompting and retrieval-augmented generation (RAG). From a philosophical perspective, explanations can be viewed as catalysts that boost understanding.

Subject Decomposition

Subject decomposition involves breaking down a subject into its components to analyze its understanding. For example, a neural network can be decomposed into its architecture and parameters. This decomposition helps identify the roles of different components in forming understanding.

Definition 4 (Subject Decomposition): Regarding a subject’s understanding of the object ( O ), ( C ) is an inner catalyst if (1) ( C ) is a part of the subject; (2) ( C ) can be regarded as a catalyst that is composed by a primitive subject who is a part of the subject.

Acquisition of Understanding

The acquisition of understanding is related to the addition or improvement of catalysts. For example, a subject can improve its understanding by using better catalysts or updating its inner catalysts.

Claim 1 (Acquisition of Understanding): According to verifier ( V ), the subject must obtain and employ a new catalyst (inner or external) to make a subject’s understanding more favorable to ( V ).

Claim 2 (Learning Ability): A subject’s learning ability is determined by its ability to compose related inputs into an update of the subject’s inner catalysts.

General Intelligence and Learning

The paper argues that learning ability is critical for AI systems to reach general intelligence. Humans have strong learning abilities, enabling them to acquire understanding over time. Current AI systems, including LLMs, have demonstrated some learning ability, but there are still significant gaps compared to humans.

Gaps in Learning Ability

Humans can handle universal types of inputs for learning, whereas neural networks generally accept a narrow format of inputs. Additionally, humans can process long inputs and build coherent knowledge networks, whereas LLMs are constrained by input size and struggle with knowledge coherence.

Autocatalysis and Learning

LLMs have a unique property of autocatalysis, where their outputs can serve as catalysts for further processing. This property is closely related to learning ability, enabling LLMs to use tools and follow instructions to build knowledge.

Observation 1: LLMs can use their outputs as catalysts.

This autocatalytic property of LLMs paves the way for implementing human-level artificial learners, enabling them to process various types of inputs and build coherent knowledge networks.

Conclusion

The paper proposes a practical and minimal framework to characterize understanding by the subject’s behavior in composing inputs into outputs. The framework introduces the concept of catalysts to analyze the structure of understanding and emphasizes the importance of learning ability for AI systems to reach general intelligence. The authors argue that LLMs’ autocatalytic property brings us closer to the goal of general intelligence.

For future work, the authors suggest exploring more characteristics of understanding, such as creativity, and implementing practical software systems that employ the concepts laid out in the paper.