Authors:

Natchapon Jongwiriyanurak、Zichao Zeng、June Moh Goo、Xinglei Wang、Ilya Ilyankou、Kerkritt Srirrongvikrai、Meihui Wang、James Haworth

Paper:

https://arxiv.org/abs/2408.10872

Introduction

Road traffic crashes are a significant global issue, causing millions of deaths annually and imposing a substantial economic burden, particularly in low- and middle-income countries (LMICs). Traditional methods for road safety assessment, such as those employed by the International Road Assessment Programme (iRAP), involve extensive manual surveys and coding, which are costly and time-consuming. This paper introduces V-RoAst (Visual question answering for Road Assessment), a novel approach leveraging Vision Language Models (VLMs) to automate road safety assessments using crowdsourced imagery. This method aims to provide a scalable, cost-effective solution for global road safety evaluations, potentially saving lives and reducing economic burdens.

Related Work

Computer Vision for Road Attribute Detection

Traditional computer vision models have been extensively used for specific road attribute detection tasks, such as crack detection, pothole detection, and pavement distress detection. These models, including Fully Convolutional Networks (FCNs) and VGG-based models, have shown promise in segmenting and classifying road attributes. However, they require large amounts of labeled data for training, which is time-consuming and limits their scalability across different regions due to visual variations in road attributes.

Vision Language Models

Large Vision Language Models (LVLMs) have gained prominence in the computer vision domain, capable of processing both image and text inputs to perform tasks such as image captioning, image-text matching, visual reasoning, and visual question answering (VQA). These models, including GPT-4o, Gemini 1.5, and others, have demonstrated potential in various tasks without additional training, making them suitable for zero-shot learning scenarios. However, their application in road feature detection remains underexplored.

Visual Question Answering (VQA)

VQA involves answering open-ended questions based on images, with several datasets like GQA, OK-VQA, and KITTI being used to evaluate models. This study leverages VQA to define a new image classification task for VLMs, exploring their potential to perform road assessment tasks without the need for extensive training data.

Research Methodology

ThaiRAP Dataset

The dataset used in this study is sourced from ThaiRAP, comprising 2,037 images captured across Bangkok, Pathum Thani, and Phranakorn Sri Ayutthaya provinces. These images represent 519 road segments, with each segment having 1-4 images used to code 52 attributes according to the iRAP standard. The dataset highlights the imbalance of classes within attributes, posing a challenge for traditional models.

Data Preprocessing

To address class imbalance, the dataset was divided into training, testing, validation, and unseen sets. Augmentation techniques, such as adding noise, were applied to classes with fewer samples to ensure a balanced distribution. The baseline models were trained on the training set and evaluated on the testing and unseen sets.

Baseline Models

VGGNet and ResNet were used as baseline models for comparison. These models were adapted for multi-attribute classification by sharing a single encoder across all tasks and allocating separate decoders for each task. This structure allows the models to extract common features and address each task using task-specific decoders.

Experimental Design

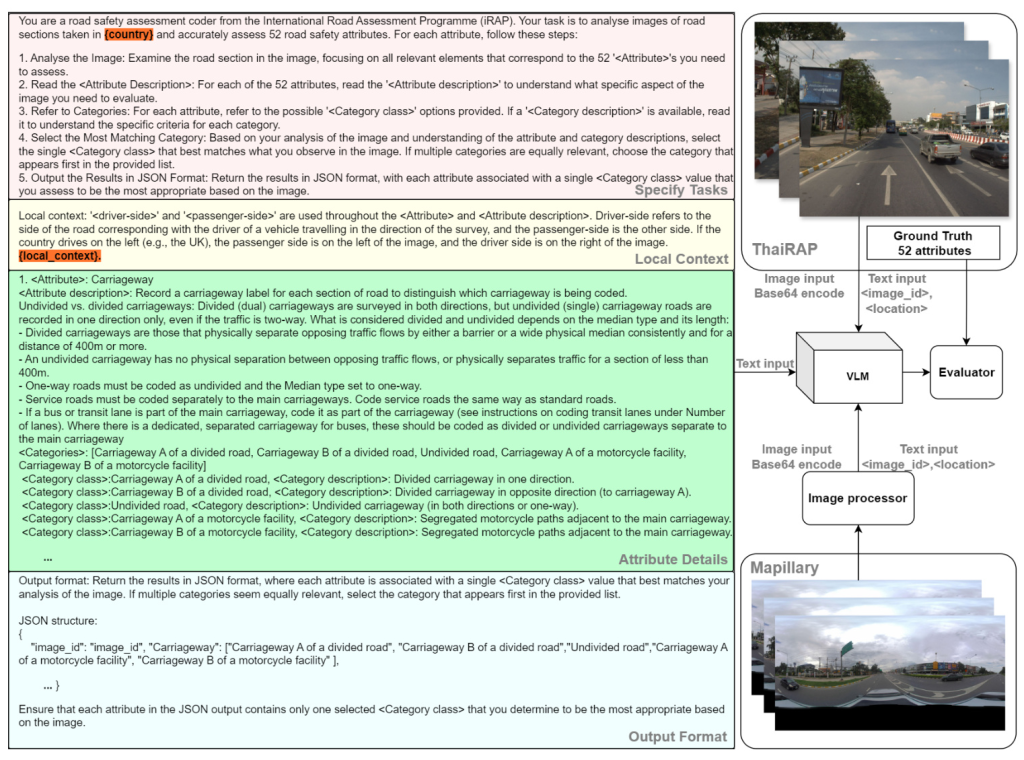

V-RoAst Framework

The V-RoAst framework is designed to be easily applicable in any city, requiring minimal data science expertise. The approach includes text input for system instructions and prompts, as well as image prompts. The workflow involves inputting an image and its associated information prompts to generate responses for the 52 attributes of the image.

Instructions and Prompts

Instructions are divided into four parts: task specification, local context, attribute details, and output format. The model is guided through a step-by-step process to define the category class of each attribute based on the image. Local context information, such as the side of the road on which countries drive, is provided to help the model understand the image better. The output is formatted in JSON, ensuring clarity and consistency.

VLMs Used

Gemini-1.5-flash and GPT-4o-mini were used to evaluate the framework. These models do not require training or significant computational resources, making them accessible for use by local stakeholders. The experiments were conducted using the Google AI Gemini platform and the OpenAI API.

Image Processor for Mapillary Imagery

Crowdsourced Street View Images (SVIs) from Mapillary were used to supplement the ThaiRAP dataset. Images were obtained using a 50-meter buffer around ThaiRAP locations and processed to align with the ThaiRAP data format. These images were then used to evaluate the star rating for various modes of transport.

Results and Analysis

Attribute Classification Performance

The performance of V-RoAst using Gemini-1.5-flash and GPT-4o-mini was compared to the baseline models (ResNet and VGG) using metrics such as accuracy, precision, recall, and F1 score. The results showed that while baseline models outperformed VLMs on most attributes, VLMs achieved comparable performance on certain attributes, particularly those without strong spatial characteristics.

Qualitative Assessment Using VQA

One of the key advantages of using LVLMs is their ability to perform VQA tasks. This capability allows non-data science experts to improve the models by intuitively adapting prompts based on the model’s interpretations. This iterative process helps refine the model’s predictions and enhance accuracy.

Scalable Star Rating Prediction

The confusion matrix for star rating prediction using Mapillary images demonstrated that V-RoAst effectively identifies high-risk roads with ratings below 3 stars for motorcyclists. The approach’s conservative stance in assessing safety levels highlights its potential for national-scale star rating prediction using crowdsourced imagery.

Overall Conclusion

The V-RoAst approach utilizing VLMs such as Gemini-1.5-flash and GPT-4o-mini offers a promising alternative to traditional CNN models for road safety assessment. While VLMs demonstrate relatively weaker performance in dealing with spatial attributes, their ability to perform VQA and adapt through prompt engineering makes them valuable for local authorities in LMICs. V-RoAst provides a cost-effective, automated method for global road safety assessments, potentially saving lives and reducing economic burdens. Future work will focus on fine-tuning VLMs for robustness and exploring other modalities, such as remote sensing imagery and geographical information data, for reliable road assessment.