Authors:

Paper:

https://arxiv.org/abs/2408.09967

Introduction

Background

Linear programming (LP) has been a fundamental optimization technique since its inception by Dantzig in 1947. It is widely used in various fields such as operations research, economics, and engineering due to its ability to optimize objectives subject to linear constraints. However, traditional LP methods face limitations when dealing with non-linear, high-dimensional, and dynamic environments.

On the other hand, machine learning (ML), particularly deep learning, has shown remarkable success in modeling complex patterns and making predictions from large datasets. Despite these strengths, ML models often lack the explicit interpretability and rigorous constraint satisfaction that LP offers. This has led researchers to explore hybrid approaches that combine the strengths of both LP and ML.

Problem Statement

The primary challenge addressed in this paper is the integration of LP constraints within the loss function of an unsupervised ML model. This hybrid approach aims to leverage the interpretability and constraint satisfaction of LP while benefiting from the flexibility and learning capacity of ML. The proposed method is particularly beneficial in unsupervised or semi-supervised settings where traditional LP methods may struggle due to the lack of labeled data.

Related Work

Linear Programming and Its Applications

Linear programming is a cornerstone in optimization theory, aiming to maximize or minimize a linear objective function subject to a set of linear constraints. It has extensive applications in resource allocation, production planning, transportation, and finance. However, LP is inherently limited to linear relationships, which may not capture the complexities of real-world problems where relationships among variables are often non-linear and dynamic.

Machine Learning and Its Limitations

Machine learning, especially deep learning, has revolutionized the modeling of complex patterns in high-dimensional data spaces. ML models can make predictions, classify data, and identify hidden patterns without explicit programming for each task. However, the black-box nature of deep learning models poses challenges in interpretability and constraint satisfaction. Standard ML models may also struggle to incorporate domain-specific knowledge, such as optimization constraints, into their learning process.

Hybrid Approaches: Combining LP and ML

The integration of LP and ML has been explored in various forms, primarily focusing on supervised learning scenarios. Recent studies have embedded LP solvers within neural networks, allowing the network to learn decision variables that satisfy LP constraints. These approaches typically involve differentiable optimization layers, enabling the gradients of the LP solutions to flow through the network, thus allowing the LP problem to be part of the end-to-end learning process.

Despite these advances, the application of such hybrid approaches in unsupervised learning remains underexplored. This paper addresses this gap by introducing a method that incorporates LP constraints directly into the loss function of an unsupervised ML model.

Research Methodology

Model Overview

The core idea of the proposed method is to integrate the objectives and constraints of an LP problem directly into the loss function of an unsupervised ML model. This is achieved by defining a custom loss function that includes both a reconstruction loss and a penalty term derived from the LP constraints.

Loss Function Design

The proposed loss function for the unsupervised learning model is defined as:

- Reconstruction Loss: Measures the difference between the input data and its reconstruction. This term encourages the model to learn a meaningful representation of the input data.

- LP Loss: Encapsulates the constraints and objectives of the LP problem. This term penalizes the model if the generated solutions violate the LP constraints. The LP loss can be formulated as:

[

\text{LP Loss} = \sum (\text{penalty for constraint violations})

] - Regularization Parameter: ( \lambda ) controls the trade-off between the reconstruction loss and the LP loss. A higher ( \lambda ) places more emphasis on satisfying the LP constraints.

Training Procedure

The model is trained by minimizing the custom loss function. During each training iteration, the model updates its parameters to reduce both the reconstruction error and the LP violation. The gradients for the LP loss are computed using automatic differentiation, allowing the model to learn in an end-to-end fashion.

Experimental Design

Dataset and Experimental Setup

To assess the effectiveness of the proposed method, experiments were conducted on both synthetic and real-world datasets designed to mimic complex optimization problems where LP constraints play a critical role.

Synthetic Dataset

For the synthetic dataset, data points representing resource allocation scenarios in a hypothetical hospital setting were generated. Each data point consisted of features such as available doctors, nurses, equipment units, maximum available time, and treatment time requirements. The objective was to allocate resources to maximize the number of patients treated while satisfying resource constraints. A total of 10,000 samples were generated and normalized using Min-Max scaling.

Real-World Dataset

For the real-world dataset, data from a hospital’s scheduling and resource allocation system was used. The dataset included historical records of resource usage, treatment times, and outcomes over a one-year period, resulting in 5,000 samples.

Training and Implementation Details

The hybrid model was implemented using PyTorch with the following architecture:

- Encoder: A fully connected neural network with two hidden layers (256 and 128 units) with ReLU activation functions.

- Latent Space: A bottleneck layer with 3 units representing the core features used for reconstruction.

- Decoder: A mirror image of the encoder with layers (128 and 256 units) and ReLU activations.

- LP Constraints: Integrated into the loss function with the objective to minimize constraint violations while maintaining high reconstruction accuracy.

The model was trained using the Adam optimizer with a learning rate of 0.0001 over 100 epochs with a batch size of 64.

Results and Analysis

Constraint Satisfaction

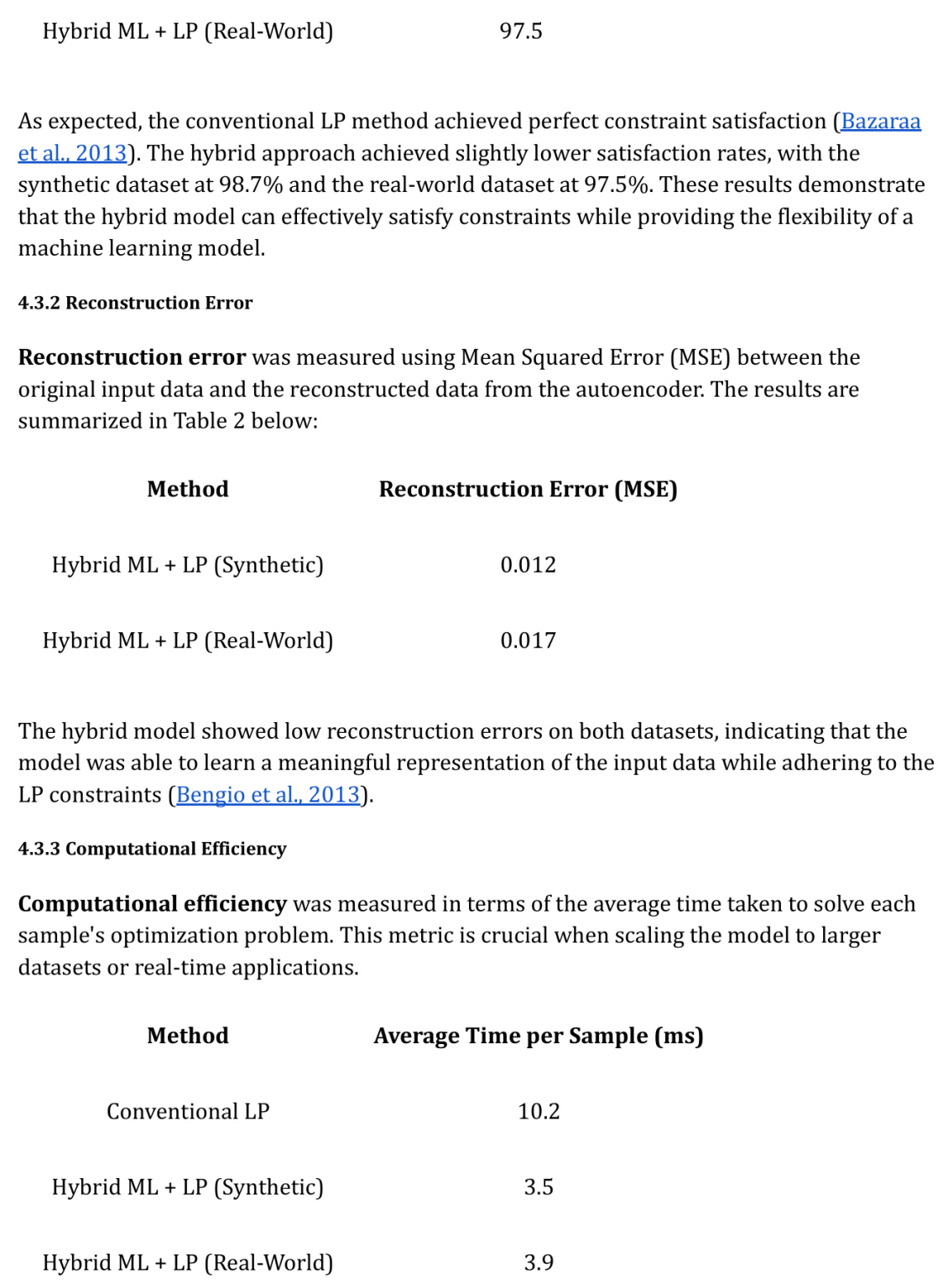

Constraint satisfaction was measured as the percentage of samples for which the LP constraints were satisfied within a small tolerance. The results are summarized in Table 1.

Reconstruction Error

Reconstruction error was measured using Mean Squared Error (MSE) between the original input data and the reconstructed data from the autoencoder. The results are summarized in Table 2.

Computational Efficiency

Computational efficiency was measured in terms of the average time taken to solve each sample’s optimization problem. This metric is crucial when scaling the model to larger datasets or real-time applications.

Robustness to Noise and Incomplete Data

The robustness of the hybrid model was tested by introducing noise into the input data and randomly omitting some feature values. The hybrid model handled noisy and incomplete data more effectively than the conventional LP method, maintaining a high level of constraint satisfaction and low reconstruction error under these conditions.

Comparison with Conventional LP Approach

The conventional LP approach, while optimal for satisfying constraints, lacks the flexibility and adaptability that the hybrid model offers. The hybrid approach not only achieves near-optimal constraint satisfaction but also benefits from the ability to learn from data and generalize to new scenarios. This makes it particularly suited for dynamic environments where traditional LP methods may struggle to adapt to changing conditions.

Overall Conclusion

This paper introduced a novel hybrid approach that integrates linear programming into the loss function of an unsupervised machine learning model. By combining the interpretability and constraint satisfaction of LP with the flexibility and learning capacity of ML, this method offers a robust solution for complex optimization problems. The experimental results demonstrate that this approach is effective in satisfying constraints, achieving low reconstruction error, and improving computational efficiency compared to conventional LP methods. Future work will explore extensions to more complex non-linear constraints and applications in semi-supervised learning settings.