Authors: Richard Ren, Steven Basart, Adam Khoja, Alice Gatti, Long Phan, Xuwang Yin, Mantas Mazeika, Alexander Pan, Gabriel Mukobi, Ryan H. Kim, Stephen Fitz, Dan Hendrycks

Category: Machine Learning, Artificial Intelligence, Computation and Language, Computers and Society

ArXiv: http://arxiv.org/abs/2407.21792v1

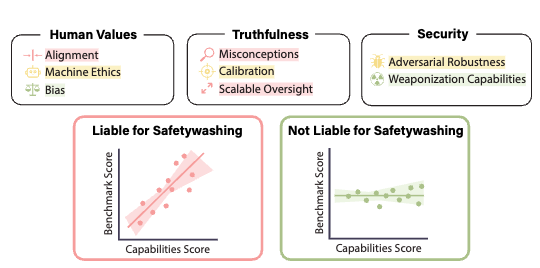

Abstract: As artificial intelligence systems grow more powerful, there has been increasing interest in “AI safety” research to address emerging and future risks. However, the field of AI safety remains poorly defined and inconsistently measured, leading to confusion about how researchers can contribute. This lack of clarity is compounded by the unclear relationship between AI safety benchmarks and upstream general capabilities (e.g., general knowledge and reasoning). To address these issues, we conduct a comprehensive meta-analysis of AI safety benchmarks, empirically analyzing their correlation with general capabilities across dozens of models and providing a survey of existing directions in AI safety. Our findings reveal that many safety benchmarks highly correlate with upstream model capabilities, potentially enabling “safetywashing” — where capability improvements are misrepresented as safety advancements. Based on these findings, we propose an empirical foundation for developing more meaningful safety metrics and define AI safety in a machine learning research context as a set of clearly delineated research goals that are empirically separable from generic capabilities advancements. In doing so, we aim to provide a more rigorous framework for AI safety research, advancing the science of safety evaluations and clarifying the path towards measurable progress.

Summary: Content Summary:

Problems:

– AI safety research is not well-defined, making it unclear for researchers how to contribute.

– There is confusion about the difference between AI safety benchmarks and general AI capabilities improvements.

– The potential for “safetywashing,” where general capability improvements are misrepresented as safety advancements.

Solutions:

– Conducted a comprehensive meta-analysis of AI safety benchmarks.

– Empirically analyzed the correlation between safety benchmarks and general capabilities.

– Surveyed existing directions in AI safety research.

Results:

– Many AI safety benchmarks are highly correlated with general capabilities, showing potential safetywashing.

– Improvement in general capabilities (scaling parameters, training data) can lead to performance gains across safety benchmarks.

– Alignment theory’s intuitive arguments may mislead, suggesting that empirical measurement is needed.

– Proposes a more rigorous framework for AI safety research with empirically distinguishable research goals.

– Recommends future safety benchmarks report their correlation with general capabilities.

– Empirical measurement should replace reliance on alignment theory for guiding AI safety research.