Authors:

Bingyu Li、Da Zhang、Zhiyuan Zhao、Junyu Gao、Yuan Yuan

Paper:

https://arxiv.org/abs/2408.07891

Introduction

The rapid growth of textual information on social media has made sentiment analysis a crucial tool for understanding social opinions and product evaluations. Traditional sentiment analysis models, while effective, often struggle with integrating diverse semantic information and lack interpretability. This paper introduces a novel approach by combining quantum mechanics (QM) principles with deep learning models to enhance text sentiment analysis. The proposed Quantum-inspired Interpretable Text Sentiment Analysis Architecture (QITSA) leverages the commonalities between text representation and QM principles to improve model accuracy and interpretability.

Related Work

Text Sentiment Analysis

Text sentiment analysis has evolved through various methods, including lexicon-based approaches and deep learning techniques. Lexicon-based methods rely on predefined sentiment lexicons but face challenges in scalability and addressing semantic dependencies. Deep learning methods, such as CNNs, RNNs, and attention mechanisms, have shown significant improvements in capturing semantic dependencies. However, these models still struggle with integrating multiple sentiment information and lack interpretability.

Quantum-Inspired Deep Learning Models

Quantum mechanics principles have been increasingly applied to natural language processing (NLP) tasks due to their similarities with text representation. Pure quantum computing algorithms have been extended to text sentiment analysis, but they face limitations in processing large-scale textual data. Quantum-inspired deep learning models combine the interpretability of quantum mechanics with the scalability of deep learning, leading to more effective and interpretable models.

Method

Preliminary Concepts

Quantum Superposition

The quantum superposition principle describes the ability of a quantum system to exist in multiple states simultaneously until observed. This principle is mathematically represented as:

[ |\psi\rangle = \sum_i c_i |i\rangle ]

where ( c_i ) are the probability amplitudes for the quantum to be in state ( |i\rangle ).

Quantum Density Matrix

The quantum density matrix is used to describe the state of a quantum system, especially when it contains multiple particles. It is expressed as:

[ \rho = \sum_i p_i |\psi_i\rangle \langle \psi_i| ]

where ( p_i ) are the probability values, and ( |\psi_i\rangle ) are the superposition states.

Quantum-Inspired Language Representation

In sentiment analysis, understanding the meaning of words is crucial. Quantum mechanics views the multiple meanings of a word as a quantum superposition. The semantic state of a sentence is represented using a density matrix, capturing word associations and interactions. The emotional polarity scores of words are regarded as phase information, while semantic information serves as amplitude.

Quantum-Inspired Text Embedding Layer

Using the quantum exponential representation, word embedding information is treated as the amplitude and sentiment information as the phase. This fusion into a complex-valued word vector is expressed as:

[ v_j = \text{SemInfo}(v_j) \cdot e^{i \cdot \text{EmoPhase}(v_j)} = r_j \cdot e^{i \cdot \beta_j} ]

Euler’s formula is used to convert the complex exponential form into its real and imaginary components, simplifying the operations.

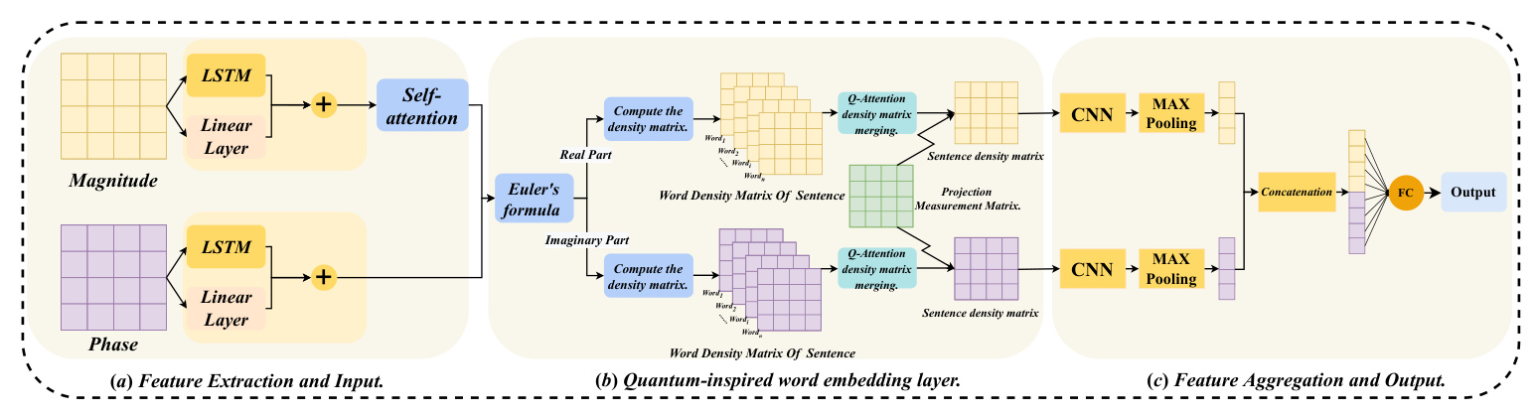

Quantum-Inspired Feature Extraction Layer

The proposed model uses an LSTM-attention mechanism to model dependencies within the text. The semantic and sentiment information of words are extracted using LSTM and self-attention mechanisms. The quantum-inspired word embedding layer calculates the words’ density matrix representation and fuses them into a sentence text embedding matrix. A Q-Attention mechanism is proposed to enhance the model’s attention to keywords in the text.

Feature Aggregation and Output

The final step involves using CNNs to extract features from the density matrix representation of the text. The output matrix is the weighted sum of the density matrices, with the weights as the attention scores. The final text embedding representation is passed through a fully connected layer to obtain the final result.

Experimental Results

Experimental Details

The experiments were conducted on a single NVIDIA 3090 GPU using AdamW as the optimizer. The binary cross-entropy loss function was chosen, and the GloVe word vector model was used as the pre-trained model.

Dataset

Five binary classification benchmark test sets were used to evaluate the model’s performance: MR, SST, SUBJ, CR, and MPQA.

Evaluation Metric

Accuracy, recall, and F1 score were chosen as metrics to evaluate the model’s generalization ability.

Results on Different Datasets

The proposed model consistently outperformed others, achieving the highest accuracy in most datasets. It demonstrated superior efficacy in text classification, providing valuable insights for future research.

Recall on Different Datasets

The model showed consistent performance across the benchmark test sets, with high precision and recall, particularly on the SUBJ dataset.

Visualization

Visualization experiments compared the effectiveness of quantum-inspired text embedding layers. The sentiment integration method enabled the model to focus on words with significant sentiment polarity changes.

Ablation Experiment

Impact of Different Embedding Data on Test Accuracy

Experiments showed that integrating BERT word embeddings with sentiment polarity information consistently improved performance across multiple datasets.

Effectiveness Analysis of Q-Attention Mechanism

Q-Attention improved accuracy on several datasets, demonstrating its ability to capture sentiment information, especially with keywords.

Analysis of Different Feature Concentration and Extraction Modules

The CNN-Diagonal model demonstrated superior text classification accuracy by effectively capturing local features and long-distance dependencies.

Conclusion

This study explores text sentiment analysis using quantum-inspired deep learning models. The proposed Quantum-Inspired Text Information Representation method efficiently captures semantic features using quantum superposition principles and density matrices. Future research directions include enriching datasets, optimizing the model, expanding the types of embedding data, and enhancing the interpretability of deep learning models.

Appendices

Description of the Chosen Dataset

Detailed descriptions of the selected datasets, including MR, SST, CR, MPQA, and SUBJ, are provided.

Visualization of Sentiment Information Extracted by WordNet

Visualization of sentiment information extracted using SentiWordNet verifies the validity of the input data.

Visualization of Text Semantic Vectors

Further visualization experiments on the datasets showcase the effectiveness of the proposed model.

Training Curve

Training convergence plots of the proposed model on the datasets demonstrate effective error reduction and learning capability.