Authors:

Zhonghang Li、Long Xia、Lei Shi、Yong Xu、Dawei Yin、Chao Huang

Paper:

https://arxiv.org/abs/2408.10269

Introduction

Urban transportation systems are the backbone of modern cities, facilitating the movement of people and goods. Accurate traffic forecasting is essential for effective urban planning and transportation management, enabling efficient resource allocation and enhanced travel experiences. However, existing traffic prediction models often struggle with generalization, particularly in zero-shot prediction scenarios for unseen regions and cities, and long-term forecasting. This is due to the inherent challenges in handling the spatial and temporal heterogeneity of traffic data and significant distribution shifts across time and space.

In this study, we introduce OpenCity, a novel spatio-temporal foundation model designed to address these challenges. OpenCity integrates the Transformer architecture with graph neural networks to model complex spatio-temporal dependencies in traffic data. By pre-training on large-scale, heterogeneous traffic datasets, OpenCity learns rich, generalizable representations that can be applied to a wide range of traffic forecasting scenarios.

Related Work

Deep Urban Traffic Prediction Models

Deep learning has significantly advanced spatio-temporal models for traffic forecasting. These models leverage deep neural networks to learn effective representations that capture the spatial and temporal dependencies inherent in urban traffic data. Common approaches include recurrent neural networks (RNN), temporal convolutional networks (TCN), and attention networks for temporal correlations, and graph convolutional networks (GCN) and graph attention networks (GAT) for spatial correlations.

Spatio-Temporal Self-Supervised Learning

Self-supervised learning (SSL) has emerged as an effective strategy for enhancing spatio-temporal learning models. SSL paradigms include spatio-temporal contrastive SSL, generative SSL, and predictive SSL, each aiming to improve model performance and generalization. However, these approaches often fall short in zero-shot forecasting capabilities.

Leveraging Large Language Models in Urban Computing

Large language models (LLMs) have been integrated into urban computing to enhance spatio-temporal representation learning and model generalization. However, LLMs face limitations such as high computational demands and reliance on manually-collected Point-of-Interest (POI) data, which restrict their generalization capabilities.

Research Methodology

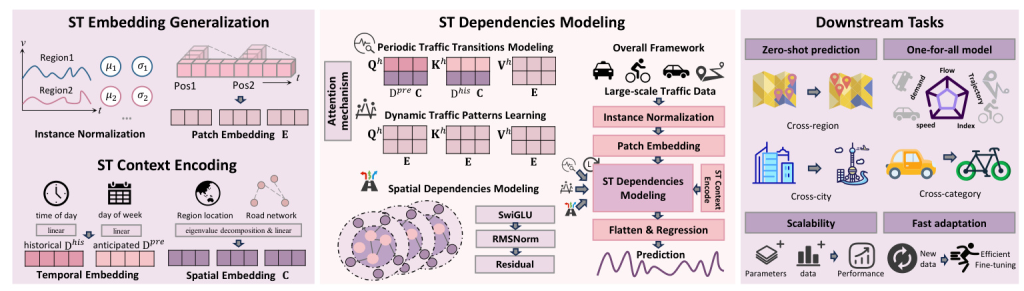

Spatio-Temporal Embedding for Distribution Shift Generalization

To address distribution shifts across spatial and temporal dimensions, OpenCity employs instance normalization and patch-based embedding. Instance normalization processes data using the mean and standard deviation of individual input instances, rather than global training set statistics, to accommodate zero-shot traffic prediction tasks. Patch-based embedding partitions data along the temporal dimension, reducing computational and memory overhead for long-term traffic prediction.

Spatio-Temporal Context Encoding

OpenCity integrates temporal and spatial context cues to capture complex spatio-temporal patterns. Temporal context encoding leverages patch-based segmentation to extract features related to the time of day and day of the week. Spatial context encoding incorporates the underlying spatial context within the traffic network, using eigenvalue decomposition to obtain region embeddings that encode structural information.

Spatio-Temporal Dependencies Modeling

OpenCity models both periodic and dynamic traffic patterns using a TimeShift Transformer architecture. This allows the model to capture periodic traffic transitions and dynamic dependencies among different time segments. Spatial dependencies are modeled using graph convolutional networks (GCNs), capturing the strong spatial correlations in transportation networks.

Experimental Design

Data Sources and Characteristics

The generalization capabilities and predictive performance of OpenCity were evaluated using a diverse set of large-scale, real-world public datasets covering various traffic-related data categories, including traffic flow, taxi demand, bicycle trajectories, traffic speed statistics, and traffic index statistics from regions across the United States and China.

Evaluation Scenarios

The evaluation scenarios included:

– Cross-Region Zero-Shot Evaluation: Assessing OpenCity’s ability to generalize to unseen regions within a city.

– Cross-City Zero-Shot Evaluation: Examining the model’s capacity to adapt to completely new cities.

– Cross-Task Zero-Shot Evaluation: Testing the model’s ability to forecast different types of traffic-related data.

– Unified Model Supervised Evaluation: Evaluating the versatility and adaptability of OpenCity within a supervised learning framework.

– Cross-Data Fast Adaptation Evaluation: Measuring the model’s cost-efficient adaptation capabilities to new traffic datasets.

Results and Analysis

Zero-shot vs. Full-shot Performance

OpenCity demonstrated significant zero-shot learning capabilities, outperforming most baseline models even without fine-tuning. This highlights the model’s robustness and effectiveness at learning complex spatio-temporal patterns in large-scale traffic data. OpenCity consistently secured top or second positions on several datasets, underscoring its versatility and adaptability.

Exceptional Supervised Performance

In a supervised learning evaluation, OpenCity maintained excellent performance and held a leading advantage in most evaluation metrics. The model effectively extracted universal periodic and dynamic spatio-temporal representations, addressing the issue of poor prediction performance caused by cross-time and cross-location distribution shifts.

Model Fast Adaptation Capabilities

OpenCity demonstrated rapid adaptation abilities for downstream tasks. After efficient fine-tuning, OpenCity’s performance substantially improved, outperforming all compared models. This rapid adaptability underscores OpenCity’s potential as a foundational traffic forecasting model.

Ablation Study

An ablation study assessed the individual contributions of various components within OpenCity. The study revealed that each component, including dynamic traffic pattern modeling, periodic traffic transition modeling, spatial dependencies modeling, and spatio-temporal context encoding, significantly enhanced the model’s performance.

Scaling Law Investigation

OpenCity’s zero-shot generalization performance progressively improved as both parameter and data scale increased. This suggests the model’s ability to extract valuable knowledge from extensive datasets, with its learning capabilities enhanced by parameter expansion.

Comparison with Large Spatio-Temporal Pre-trained Models

OpenCity maintained a significant performance advantage over other prominent large spatio-temporal pre-trained models. The model achieved a win-win in performance and efficiency, highlighting its potential as a powerful large-scale model for traffic benchmarks.

Overall Conclusion

OpenCity represents a significant advancement in traffic prediction models, offering precise zero-shot prediction performance across multiple traffic forecasting scenarios. By integrating the Transformer architecture with graph neural networks and pre-training on large-scale traffic datasets, OpenCity demonstrates exceptional generalization capabilities. The model effectively handles data with varying distributions and boasts high computational efficiency, paving the way for a powerful, generalized traffic prediction solution applicable to diverse urban environments and transportation networks.