Authors:

Junming Wang、Dong Huang、Xiuxian Guan、Zekai Sun、Tianxiang Shen、Fangming Liu、Heming Cui

Paper:

https://arxiv.org/abs/2408.10618

Introduction

Air-ground robots (AGRs) have become increasingly significant in applications such as surveillance and disaster response due to their dual capabilities of flying and driving. However, navigating these robots in dynamic environments, such as crowded areas, poses significant challenges. Traditional navigation systems, which rely on 3D semantic occupancy networks and Euclidean Signed Distance Field (ESDF) maps, struggle with low prediction accuracy and high computational overhead in such scenarios.

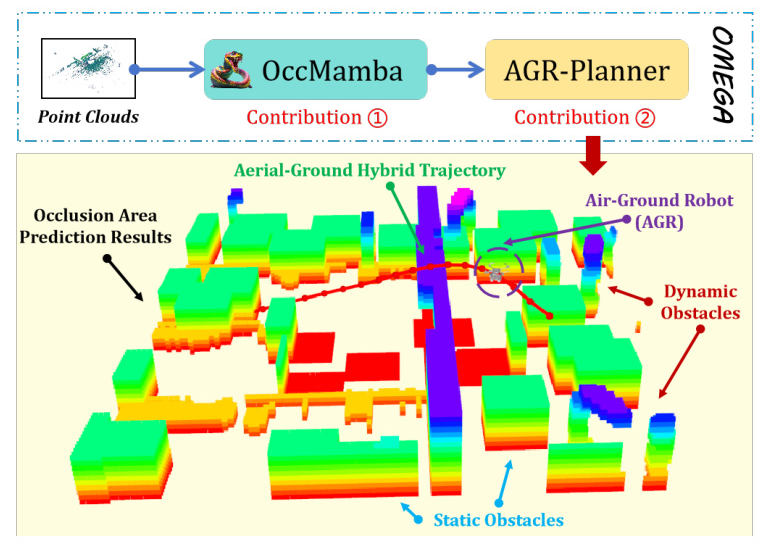

To address these challenges, the paper introduces OMEGA, a novel navigation system for AGRs that integrates OccMamba, a 3D semantic occupancy network, and AGR-Planner, an efficient path planner. OccMamba separates semantic and occupancy predictions into independent branches, enhancing prediction accuracy and reducing computational overhead. AGR-Planner employs Kinodynamic A* search and gradient-based trajectory optimization for efficient and collision-free path planning without relying on ESDF maps.

Related Work

Dynamic Navigation Systems for AGRs

Previous research has explored various configurations for AGRs, including passive wheels, cylindrical cages, and multi-limb designs. Notable works include:

- Fan et al.: Utilized the A* algorithm for ground-aerial motion planning, adding energy costs to aerial paths to favor ground paths.

- Zhang et al.: Developed a path planner and controller relying on ESDF maps, which suffer from high computational demands and limited perception in dynamic environments.

- Wang et al.: Introduced AGRNav, the first AGR navigation system with occlusion perception capability, but it struggles in dynamic environments due to its simple perception network and path planner.

3D Semantic Occupancy Prediction

3D semantic occupancy prediction is crucial for interpreting occluded environments by merging geometry with semantic clues. Research in this area can be categorized into:

- Camera-based approaches: Utilize visual data to infer occupancy, such as MonoScene and VoxFormer.

- LiDAR-based approaches: Handle outdoor scenes via point clouds, including S3CNet and JS3C-Net.

- Fusion-based approaches: Combine camera imagery with LiDAR data for enhanced performance, exemplified by the Openoccupancy benchmark.

State Space Models (SSMs) and Mamba

State-Space Models (SSMs) like the S4 model have proven effective in sequence modeling, capturing long-range dependencies. The Mamba model enhances SSMs by introducing data-dependent layers, improving efficiency in processing long sequences. Mamba has excelled in various domains, including robot manipulation and point cloud analysis.

Research Methodology

OccMamba Network Structure

OccMamba features three branches: semantic, geometric, and BEV fusion. The semantic and geometric branches are supervised by multi-level auxiliary losses, which are removed during inference. Multi-scale features generated by these branches are fused in the BEV space to reduce computational overhead.

Semantic Branch

The semantic branch consists of a voxelization layer and three encoder Sem-Mamba Blocks. The input point cloud is converted to a multi-scale voxel representation, aggregated by maximum pooling to obtain a unified feature vector for each voxel. These features are projected into the BEV space, resulting in dense BEV features.

Sem-Mamba Block

The Sem-Mamba block leverages Mamba’s efficient long-distance sequence modeling and linear-time complexity to improve semantic representation learning. The block processes dense BEV features, enhancing feature representation with richer long-distance dependencies.

Geometry Branch and Geo-Mamba Block

The geometric branch processes voxels obtained from the point cloud using Geo-Mamba blocks, which combine a residual block with a mamba module and a BEV projection module. This branch generates dense BEV features suitable for fusion with semantic features.

BEV Feature Fusion Branch

The BEV fusion branch adopts a U-Net architecture with 2D convolutions. It fuses semantic and geometric features at multiple scales, producing a comprehensive 3D semantic occupancy prediction.

Optimization

The overall objective function for training OccMamba includes semantic loss, completion loss, and BEV loss. The network is trained end-to-end to optimize these losses.

Experimental Design

Evaluation Setup

- 3D Semantic Occupancy Network: OccMamba was trained on the SemanticKITTI dataset using an NVIDIA 3090 GPU. The dataset focuses on semantic occupancy prediction with LiDAR point clouds and camera images.

- Simulation Experiment: Conducted on a laptop with an NVIDIA RTX 4060 GPU in a 20m×20m×5m simulated environment. The experiments involved 200 trials with varied obstacle placements.

- Real-world Experiment: Conducted on a custom AGR platform equipped with a RealSense D435i for point cloud acquisition, a T265 camera for visual-inertial odometry, and a Jetson Xavier NX for real-time computation.

AGR Motion Planner

Kinodynamic Hybrid A* Path Searching

AGR-Planner generates an initial trajectory, identifies collision segments, and employs the kinodynamic A* algorithm to create guidance trajectory segments. This approach optimizes energy efficiency by favoring ground trajectories.

Gradient-Based B-spline Trajectory Optimization

The trajectory is parameterized by a uniform B-spline curve, optimized for collision avoidance, smoothness, dynamical feasibility, and terminal progress. Additional costs for curvature are included to address non-holonomic constraints.

Results and Analysis

OccMamba Performance

OccMamba achieved state-of-the-art performance on the SemanticKITTI dataset, with a completion IoU of 59.9% and a mIoU of 25.0%. It operates efficiently, with an inference speed of 22.1 FPS and a compact network architecture.

Simulated Air-Ground Robot Navigation

OMEGA demonstrated a 98% planning success rate in dynamic environments, significantly reducing planning time compared to ESDF-reliant baselines. The synergy between OccMamba’s detailed environmental perception and AGR-Planner’s efficient path computation ensures reliable navigation.

Real-world Air-Ground Robot Navigation

OMEGA achieved a 96% average success rate in five dynamic scenarios, with lower average energy consumption compared to competitors. The system’s ability to predict obstacle distributions and generate multiple candidate paths optimizes energy efficiency and traversal time.

Overall Conclusion

OMEGA represents a significant advancement in AGR navigation systems, addressing the challenges of dynamic environments with its efficient and accurate 3D semantic occupancy network, OccMamba, and its energy-efficient path planner, AGR-Planner. Extensive testing demonstrates OMEGA’s superior performance in both simulated and real-world scenarios, making it a promising solution for autonomous navigation in complex and dynamic environments.