Authors:

Rafael M. Mamede、Pedro C. Neto、Ana F. Sequeira

Paper:

https://arxiv.org/abs/2408.10175

Introduction

The rapid advancement of machine learning-based biometric applications, particularly facial recognition systems, has brought to light significant concerns regarding their fairness, safety, and trustworthiness. Initial research primarily focused on enhancing recognition performance, but recent studies have shifted towards understanding and mitigating biases within these systems. This shift is driven by incidents of misclassification in critical scenarios, such as criminal trials, and the need for transparency as mandated by regulations like the EU General Data Protection Regulation (GDPR).

This study investigates the impact of occlusions on the fairness of facial recognition systems, with a particular focus on demographic biases. By using the Racial Faces in the Wild (RFW) dataset and synthetically adding realistic occlusions, the study evaluates the performance of face recognition models trained on the BUPT-Balanced and BUPT-GlobalFace datasets. The research introduces a novel metric, the Face Occlusion Impact Ratio (FOIR), to quantify the extent to which occlusions affect model performance across different demographic groups.

Related Work

The issue of bias in facial recognition (FR) models has been extensively studied, revealing significant demographic differentials. Studies have shown that these algorithms are generally less accurate for women, older adults, and individuals of African and Asian descent compared to men and individuals of European descent. Research has also highlighted the influence of other soft biometric characteristics, such as face shape and facial hair, on the verification performance of FR systems.

Recent advancements in explainable artificial intelligence (xAI) tools have facilitated the detection and potential mitigation of biases in FR systems. However, the performance of these tools can be influenced by demographic characteristics, leading to varying effectiveness across different groups. Despite these advancements, the impact of biases in occluded face recognition (OCFR) scenarios remains underexplored. This study aims to bridge this gap by aligning research on biases in OCFR with the use of xAI tools to understand model predictions on occluded datasets.

Research Methodology

Experimental Design

The study’s experimental design involves the following steps:

-

Generate Realistic Synthetic Occlusions: Synthetic occlusions are created using two protocols from the 2022 Competition on Occluded Face Recognition. These protocols involve affine transformations of occlusion images (e.g., masks, sunglasses) to fit corresponding facial landmarks. Protocol 1 adds a single occlusion, while Protocol 4 adds either a single or two occlusions, reflecting varying degrees of severity.

-

Evaluate Model Performance and Fairness: The baseline performance of FR models is assessed using unoccluded image pairs, measuring accuracy, False Match Rate (FMR), and False Non-Match Rate (FNMR). Fairness metrics are also evaluated to determine initial disparities. The models are then evaluated using occluded image pairs to identify changes in performance and fairness caused by the occlusions.

-

Utilize Explanation Methods: The xSSAB method is used to obtain important regions for verification across demographics. The Face Occlusion Impact Ratio (FOIR) is calculated to determine the percentage of important pixels that fall onto occluded areas. Statistical differences in FOIR across demographics are analyzed to understand model behavior differences.

Models

The study considers models using two architectures: ResNet34 and ResNet50. These models are trained on the BUPT-Balanced and BUPT-GlobalFace datasets, which are designed to study biases in FR models. The training process involves the ElasticArcFace loss function, data augmentation, and optimization techniques to enhance model performance.

Group Fairness Metrics

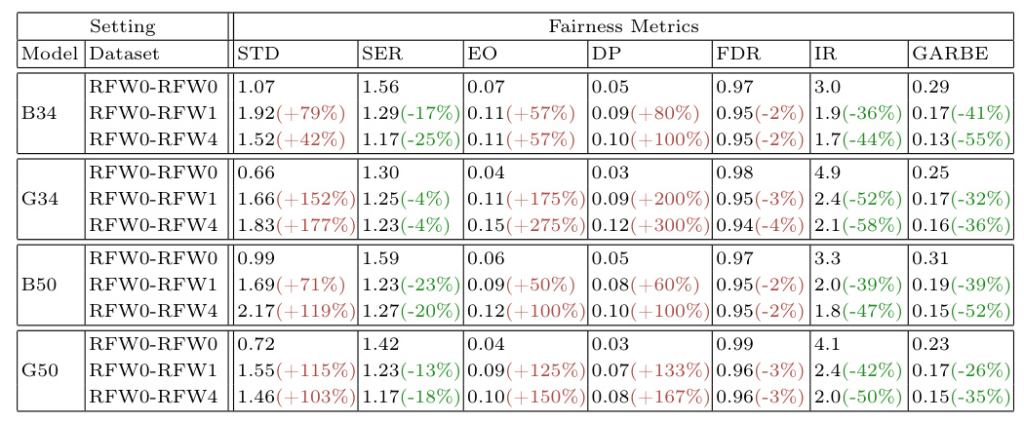

The study employs several fairness metrics to quantify inequalities in model performance across demographics:

- STD of Accuracy (STD): Measures the standard deviation of verification accuracies across protected groups.

- Skewed Error Ratio (SER): Evaluates the discrepancy between predictive performance of groups.

- Fairness Discrepancy Rate (FDR): Combines discrepancies in FMR and FNMR across protected groups.

- Inequity Rate (IR): Uses ratios between error rates to assess fairness.

- Gini Aggregation Rate for Biometric Equitability (GARBE): Based on the Gini coefficient, measures fairness in biometric applications.

- Demographic Parity (DP): Assesses whether the model’s selection rate is independent of sensitive group membership.

- Equalized Odds (EO): Evaluates whether the model performs equally well for each sensitive group.

Experimental Results

Assessing Model Performance and Fairness

The experimental results reveal significant findings regarding model performance and fairness in occluded scenarios:

- Equalized Odds (EO) and Demographic Parity (DP): Both metrics show a large increase in occluded scenarios, indicating increased bias in the reliability of predictions.

- Fairness Discrepancy Rate (FDR): Shows small decreases in occluded scenarios, with larger disparities in FMR observed in more occluded scenarios.

- Standard Deviation (STD): Increases in both occluded scenarios, with African examples showing the largest accuracy decrease.

- Skewed Error Ratio (SER), Inequity Rate (IR), and GARBE: Indicate a fairness increase in occluded scenarios, primarily driven by the overall increase in error rates.

Fairness Increase of Ratio-based Metrics in Occluded Scenarios

The decrease in SER, IR, and GARBE metrics suggests a fairness increase in occluded scenarios. However, this is primarily due to the high overall error increase. The dispersion of error rates also increases, indicating unfairer results under occlusions. Future work should focus on refining these metrics to ensure genuine improvements in fairness.

Pixel Attribution in Occluded Scenarios

The study introduces the FOIR metric to analyze the contribution of occluded pixels to model predictions. The results indicate significant differences in FOIR across ethnicities in False Non-Match (FNM) scenarios, with African faces showing a higher degree of importance of occluded pixels. This correlates with the largest increase in FNMR observed for African individuals.

Overall Conclusion

The study highlights the significant impact of occlusions on the fairness of facial recognition systems. Occlusions lead to a pronounced increase in error rates, with the impact being unequally distributed across ethnicities. The FOIR metric provides a valuable tool for quantifying these biases, revealing that occlusions disproportionately affect certain demographic groups.

Addressing fairness in occluded scenarios is crucial for developing robust and equitable facial recognition systems. Future research should focus on enhancing training protocols, designing more robust model architectures, and implementing comprehensive fairness evaluations that account for real-world conditions, including occlusions. By ensuring fair performance under occluded conditions, we can build trust in these technologies and ensure they benefit all users equitably.