Authors:

Yuankun Xie、Chenxu Xiong、Xiaopeng Wang、Zhiyong Wang、Yi Lu、Xin Qi、Ruibo Fu、Yukun Liu、Zhengqi Wen、Jianhua Tao、Guanjun Li、Long Ye

Paper:

https://arxiv.org/abs/2408.10853

Evaluating the Effectiveness of Current Deepfake Audio Detection Models on ALM-based Deepfake Audio

Introduction

The rapid advancements in large language models and audio neural codecs have significantly lowered the barrier to creating deepfake audio. These advancements have led to the emergence of Audio Language Models (ALMs), which can generate highly realistic and diverse types of deepfake audio, posing severe threats to society. The ability to generate deepfake audio that is indistinguishable from real audio has raised concerns about fraud, misleading public opinion, and privacy violations. This study investigates the effectiveness of current countermeasures (CMs) against ALM-based deepfake audio by evaluating the performance of the latest detection models on various types of ALM-generated audio.

Related Work

Advances in Audio Deepfake Detection

The field of audio deepfake detection has seen significant progress, particularly through the ASVspoof competition series. Recent research has shifted focus from improving in-distribution performance to enhancing generalization to wild domains. Notable works include:

- Self-Supervised Learning for Front-End Fine-Tuning: Tak et al. proposed a CM based on self-supervised learning for front-end fine-tuning and AASIST for backend processing, demonstrating remarkable generalization capabilities.

- Domain-Invariant Representations: Xie et al. used three source domains for co-training and learned self-supervised domain-invariant representations to improve CM generalization.

- Stable Learning for Distribution Shifts: Wang et al. proposed a CM based on stable learning to address distribution shifts across different domains.

- Reconstruction Learning Method: Wang et al. utilized a masked autoencoder and proposed a reconstruction learning method focused on real data to enhance the model’s ability to detect unknown forgery patterns.

Despite these advancements, the question remains: Does the current deepfake audio detection model effectively detect ALM-based deepfake audio?

Research Methodology

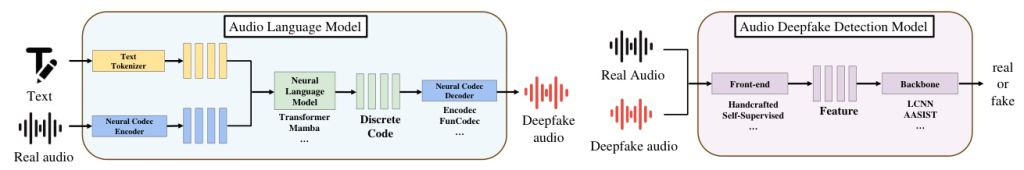

ALM-based Deepfake Audio Generation Pipeline

ALMs have rapidly developed due to advancements in both language models and neural codec models. The typical generation pipeline involves converting the audio waveform into discrete code representations through the encoder part of the neural codec. The language model decoder then performs contextual learning, and the non-autoregressive neural codec decoder generates the audio waveform from the discrete codes.

Data Collection

The study collected 12 types of ALM-based deepfake audio, denoted as A01-A12. Since most ALMs are not open-sourced, the majority of the audio samples were obtained from demo pages. The collected audio samples include:

- A01-AudioLM: High-quality audio generation with long-term consistency.

- A02-AudioLM-music: Coherent piano music continuations.

- A03-VALL-E: Neural codec language model generating discrete codes from textual or acoustic inputs.

- A04-VALL-E X: Cross-lingual neural codec language model.

- A05-SpeechX: Versatile speech generation model leveraging audio and text prompts.

- A06-UniAudio: Versatile audio generation model conditioning on multiple types of inputs.

- A07-LauraGPT: Model taking both audio and text as input and outputting both modalities.

- A08-ELLA-V: Zero-shot TTS framework with fine-grained control over synthesized audio.

- A09-HAM-TTS: TTS system leveraging a hierarchical acoustic modeling approach.

- A10-RALL-E: Robust language modeling method for text-to-speech synthesis.

- A11-NaturalSpeech 3: TTS system enhancing speech quality, similarity, and prosody.

- A12-VALL-E 2: Latest advancement in neural codec language models achieving human parity in zero-shot TTS.

Experimental Design

Countermeasure Training

The study considered two aspects for the countermeasure: the training datasets and the audio deepfake detection (ADD) model. The datasets used were:

- ASVspoof2019LA (19LA): Classic vocoder-based deepfake dataset.

- Codecfake: Latest codec-based deepfake dataset.

The ADD models used handcrafted features (Mel-spectrogram) and pre-trained features (wav2vec2-xls-r). The backbone networks chosen were LCNN and AASIST, commonly used in the field of ADD.

Experimental Setup

The experiments involved training on two different datasets:

- Vocoder-trained CM: Trained using the ASVspoof2019 LA training set.

- Codec-trained CM: Trained using the Codecfake training set.

The test set included the 19LA test set and an in-the-wild (ITW) dataset to evaluate the generalizability of the detection models.

Results and Analysis

Results on Vocoder-based Dataset

The vocoder-trained W2V2-AASIST achieved the best equal error rate (EER) of 0.122% in the 19LA test set and 23.713% in the ITW dataset. This indicates the generalizability of the CM. In cross-domain training scenarios, W2V2-AASIST also achieved good results, with an EER of 3.806% on 19LA and 9.606% on ITW.

Results on ALM-based Dataset

For the 19LA-trained CM, the overall average (AVG) result was not very good, with the lowest AVG being 24.403% for W2V2-AASIST. The EER performance was consistent with the CM’s performance on vocoder-based data. For the codec-trained CM, the results were surprising, with most EER values being 0.000%, indicating a very high distinction between real and fake audio. The best-performing model was W2V2-AASIST, achieving an EER of 9.424%.

Confusion Matrix Analysis

The confusion matrix analysis for the worst cases (A01 and A02) of W2V2-AASIST revealed that the CM failed to detect ALM-based audio and produced false positives on genuine audio. For the codec-trained CM, the poor performance was due to real speech samples being misclassified as fake, while fake samples were correctly identified.

Overall Conclusion

The study addressed the question of whether current deepfake audio detection models effectively detect ALM-based deepfake audio. The surprising results indicate that codec-trained CMs can effectively detect these ALM-based audios, with most EERs approaching 0%. This suggests that the current CM, specifically the codec-trained CM trained with the Codecfake dataset, can effectively detect ALM-based audio. However, there are still shortcomings, particularly high false negative rates, which suggest the need for further research and improvements in countermeasures.

Acknowledgements

This work is supported by the National Natural Science Foundation of China (NSFC) (No.62101553, No.62306316, No.U21B20210, No. 62201571).

References

- Z. Borsos, et al., “Audiolm: a language modeling approach to audio generation,” IEEE/ACM Transactions on Audio, Speech, and Language Processing, 2023.

- F. Kreuk, et al., “Audiogen: Textually guided audio generation,” in The Eleventh International Conference on Learning Representations, 2022.

- C. Wang, et al., “Neural codec language models are zero-shot text to speech synthesizers,” arXiv preprint arXiv:2301.02111, 2023.

- A. Agostinelli, et al., “Musiclm: Generating music from text,” arXiv preprint arXiv:2301.11325, 2023.

- Z. Zhang, et al., “Speak foreign languages with your own voice: Cross-lingual neural codec language modeling,” arXiv preprint arXiv:2303.03926, 2023.

- T. Wang, et al., “Viola: Unified codec language models for speech recognition, synthesis, and translation,” arXiv preprint arXiv:2305.16107, 2023.

- J. Copet, et al., “Simple and controllable music generation,” Advances in Neural Information Processing Systems, vol. 36, 2024.

- X. Wang, et al., “Speechx: Neural codec language model as a versatile speech transformer,” arXiv preprint arXiv:2308.06873, 2023.

- Q. Chen, et al., “Lauragpt: Listen, attend, understand, and regenerate audio with gpt,” arXiv preprint arXiv:2310.04673, 2023.

- D. Yang, et al., “Uniaudio: An audio foundation model toward universal audio generation,” arXiv preprint arXiv:2310.00704, 2023.

- S. Chen, et al., “Vall-e 2: Neural codec language models are human parity zero-shot text to speech synthesizers,” arXiv preprint arXiv:2406.05370, 2024.

- H. Tak, et al., “Automatic speaker verification spoofing and deepfake detection using wav2vec 2.0 and data augmentation,” arXiv preprint arXiv:2202.12233, 2022.

- Y. Xie, et al., “Learning a self-supervised domain-invariant feature representation for generalized audio deepfake detection,” in Proc. INTERSPEECH, vol. 2023, 2023, pp. 2808–2812.

- Z. Wang, et al., “Generalized fake audio detection via deep stable learning,” arXiv preprint arXiv:2406.03237, 2024.

- X. Wang, et al., “Genuine-focused learning using mask autoencoder for generalized fake audio detection,” arXiv preprint arXiv:2406.03247, 2024.

- Y. Xie, et al., “Domain generalization via aggregation and separation for audio deepfake detection,” IEEE Transactions on Information Forensics and Security, 2023.

- A. Nautsch, et al., “Asvspoof 2019: spoofing countermeasures for the detection of synthesized, converted and replayed speech,” IEEE Transactions on Biometrics, Behavior, and Identity Science, vol. 3, no. 2, pp. 252–265, 2021.

- X. Liu, et al., “Asvspoof 2021: Towards spoofed and deepfake speech detection in the wild,” IEEE/ACM Transactions on Audio, Speech, and Language Processing, 2023.

- Y. Xie, et al., “The codecfake dataset and countermeasures for the universally detection of deepfake audio,” arXiv preprint arXiv:2405.04880, 2024.

- A. D´efossez, et al., “High fidelity neural audio compression,” arXiv preprint arXiv:2210.13438, 2022.

- Y. Song, et al., “Ella-v: Stable neural codec language modeling with alignment-guided sequence reordering,” arXiv preprint arXiv:2401.07333, 2024.

- C. Wang, et al., “Ham-tts: Hierarchical acoustic modeling for token-based zero-shot text-to-speech with model and data scaling,” arXiv preprint arXiv:2403.05989, 2024.

- D. Xin, et al., “Rall-e: Robust codec language modeling with chain-of-thought prompting for text-to-speech synthesis,” arXiv preprint arXiv:2404.03204, 2024.

- Z. Ju, et al., “Naturalspeech 3: Zero-shot speech synthesis with factorized codec and diffusion models,” arXiv preprint arXiv:2403.03100, 2024.

- D. Oneata, et al., “Towards generalisable and calibrated synthetic speech detection with self-supervised representations,” arXiv preprint arXiv:2309.05384, 2023.

- J. W. Lee, et al., “Representation Selective Self-distillation and wav2vec 2.0 Feature Exploration for Spoof-aware Speaker Verification,” in Proc. Interspeech 2022, 2022, pp. 2898–2902.

- G. Lavrentyeva, et al., “Stc antispoofing systems for the asvspoof2019 challenge,” arXiv preprint arXiv:1904.05576, 2019.

- J.-w. Jung, et al., “Aasist: Audio anti-spoofing using integrated spectro-temporal graph attention networks,” in Proceedings of the ICASSP, 2022, pp. 6367–6371.

Code:

https://github.com/xieyuankun/alm-add