Authors:

Toshihide Ubukata、Jialong Li、Kenji Tei

Paper:

https://arxiv.org/abs/2408.10266

Introduction

Diffusion models have recently emerged as a powerful class of generative models in the field of Generative Artificial Intelligence (GenAI). These models utilize stochastic processes to transform random noise into high-quality data through iterative denoising. Initially, diffusion models demonstrated their capabilities in image-related tasks such as generation, restoration, enhancement, and editing. The fundamental principle involves introducing noise to training data and learning to reverse this process through iterative denoising, effectively capturing complex data distributions.

Technically, diffusion models use stochastic differential equations to progressively refine noisy inputs into coherent outputs, enabling accurate modeling of the underlying data distribution. This iterative refinement process allows diffusion models to explore a wide solution space, generating high-quality, diverse outputs. Due to these characteristics, diffusion models have recently been applied in planning tasks, especially in high-dimensional scenarios like motion planning. Recent studies have increasingly reported advantages of diffusion models, such as robustness, highlighting their potential as planners.

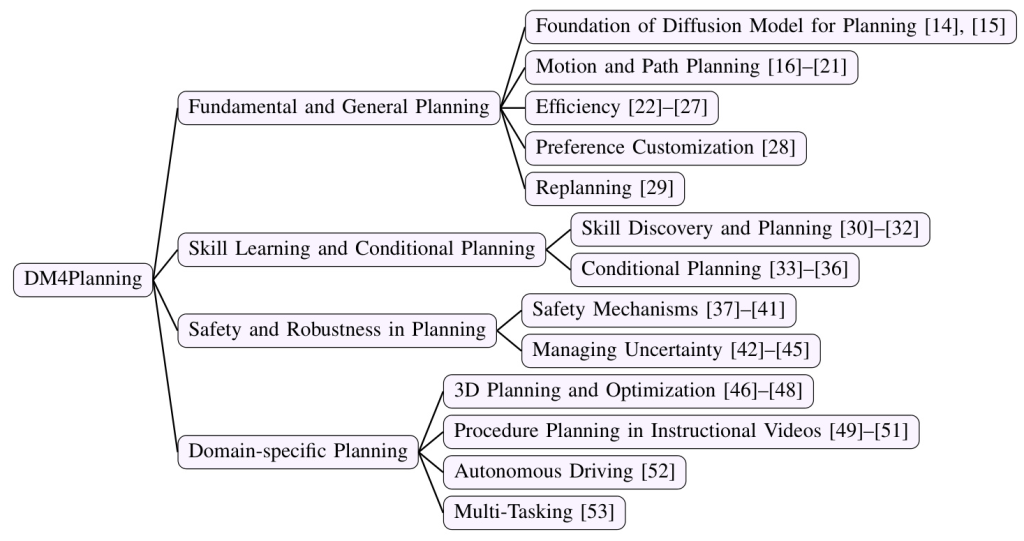

To comprehensively understand the field and promote its development, this paper conducts a systematic literature review of recent advancements in the application of diffusion models for planning. The paper categorizes and discusses the current literature from various perspectives, including datasets and benchmarks, fundamental studies, skill-centric and condition-guided planning, safety and uncertainty management, and domain-specific applications such as autonomous driving. Finally, the paper discusses the challenges and future directions in this field.

Literature Search Methodology

To ensure a comprehensive survey of the current state of the art in diffusion models for planning, a literature search strategy was employed. The literature survey was conducted on IEEE Xplore, ACM Digital Library, Dblp, and ArXiv, focusing on papers published from 2020 until May 31, 2024. The primary keywords used in the search were “diffusion,” “diffuser,” “DDPM,” “plan,” and “replan.”

The search specifically targeted papers related to diffusion-based planning in the reinforcement learning diagram, explicitly excluding those focused on large language models (LLMs) or natural language processing (NLP). Initially, 47 papers were identified, and after applying the filtering criteria, 41 relevant papers were selected. The excluded literature included papers on COVID-19 diffusion models, text generation using autoregressive models, sports analytics planning, collision-free motion planning, radon-220 diffusion in mice tumors, and multimodal LLMs for text-to-image diffusion.

Dataset and Benchmark

The evaluation of diffusion models for planning encompasses a variety of datasets tailored to distinct tasks, ranging from motion planning and maze solving to robotic manipulation and reinforcement learning. Each dataset presents unique challenges that highlight the models’ capabilities and limitations.

Motion and Path Planning

- D4RL Benchmark Suite: Provides standardized datasets and environments for offline reinforcement learning, including tasks such as Maze2D, locomotion tasks like HalfCheetah, Hopper, and Walker2D, and complex multi-step manipulation tasks in kitchen environments.

- Gym-MuJoCo: Simulated environments for continuous control tasks using a physics engine, including HalfCheetah, Hopper, and Walker2D.

- AntMaze: A benchmark environment part of the MuJoCo suite, presenting challenges in path planning and navigation within complex mazes.

- Franka Kitchen, Real-world Kitchen, and Adroit: Involve complex manipulation tasks in both simulated and real-world environments, used to evaluate models like XSkill and Generative Skill Chaining.

- RLBench: Provides a variety of robotic tasks such as opening and closing boxes, used to evaluate adaptive replanning capabilities in dynamic environments.

- Block Stacking Tasks: Involve stacking blocks to achieve specified configurations, assessing the models’ precision in manipulation and planning under physical constraints.

3D Planning

- PROX and ScanNet: Used for 3D scene understanding, human pose generation, grasp planning, and robot arm motion planning.

Instructional Video

- CrossTask and COIN: Focus on generating action sequences from start to goal visual observations, used to evaluate models like PDPP and ActionDiffusion.

Autonomous Driving

- nuPlan: A benchmark for closed-loop planning in autonomous driving, assessing models like Diffusion-ES for optimizing non-differentiable reward functions and following complex instructions in real-time decision-making and trajectory optimization for autonomous vehicles.

Safety-Critical Datasets

- Introduce constraints in experiments using datasets like Maze2D and Gym-MuJoCo to assess the models’ ability to ensure safety and feasibility in trajectory planning, used for evaluating models like SafeDiffuser and Cold Diffusion on the Replay Buffer.

Fundamental and General Planning

Foundation of Diffusion Model for Planning

This section introduces the foundational concepts that have studied the current landscape of planning with diffusion models.

- Diffuser: A pioneering study in this field, positioned within model-based reinforcement learning, integrating trajectory optimization directly into the model learning process through diffusion models. It introduces a diffusion probabilistic model designed for trajectory planning, predicting all timesteps concurrently. This approach is significant for its innovative use of iterative denoising processes, allowing for flexible conditioning and planning.

- Diffusion-QL: Focuses on offline reinforcement learning, presenting a conditional diffusion model for policy representation. This model leverages the expressiveness of diffusion processes to capture complex, multi-modal action distributions, essential for offline RL tasks. By combining behavior cloning with Q-learning guidance during the diffusion model training, Diffusion-QL effectively generates high-value actions through an iterative denoising process guided by Q-values.

Motion and Path Planning

This section introduces diverse approaches leveraging diffusion models for motion and path planning, comparing their contributions and proposed methodologies.

- Ensemble-of-costs-guided Diffusion for Motion Planning (EDMP): Focuses on motion planning for robotic manipulation, integrating classical and deep-learning approaches, demonstrating generalization to diverse and out-of-distribution scenes.

- Intermittent Diffusion Based Path Planning: Caters to heterogeneous mobile sensors navigating cluttered environments, introducing random perturbations to gradient flow dynamics, aiding sensors in escaping local minima.

- Contrastive Diffuser: Shifts focus to reinforcement learning for long-term planning, employing contrastive learning to refine the base distribution of diffusion-based RL methods.

- Motion Planning Diffusion: Innovates in robot motion planning by integrating trajectory generative models with optimization-based planning.

- DiPPeR (Diffusion-based 2D Path Planner): Emphasizes scalability and speed for legged robots, employing an image-conditioned diffusion planner and a robust training pipeline using CNNs.

- Diffusion Policy: Advances robot visuomotor control, utilizing a conditional denoising diffusion process.

- Hierarchical Framework by Wu et al.: Integrates diffusion models with reinforcement learning for evasive planning, featuring a high-level diffusion model for global path planning and low-level reinforcement learning algorithms for local evasive maneuvers.

- DiPPeST (Diffusion-based Path Planner for Synthesizing Trajectories): Extends the capabilities of diffusion-based planning to quadrupedal robots, using RGB input for global path generation and real-time refinement.

Efficiency

This section explores improving computational efficiency and decision-making effectiveness in diffusion model-based planning.

- Latent Diffuser: Addresses offline reinforcement learning by proposing a framework for continuous latent action space representation.

- Equivariant Diffuser for Generating Interactions (EDGI): Enhances sample efficiency and generalization in model-based reinforcement learning by incorporating spatial, temporal, and permutation symmetries.

- Diffused Task-Agnostic Milestone Planner (DTAMP): Utilizes diffusion-based generative sequence models for multi-task decision-making and long-term planning.

- DiffuserLite: Introduces a real-time diffusion planning approach using its plan refinement process (PRP), generating coarse-to-fine-grained trajectories.

- Hierarchical Diffuser: Combines hierarchical and diffusion-based planning to improve efficiency in long-horizon tasks.

Preference Customization

For human preference alignment in reinforcement learning, AlignDiff introduces a framework combining reinforcement learning from human feedback (RLHF) with diffusion models for zero-shot behavior customization. Key contributions include using RLHF to quantify multi-perspective human feedback datasets and developing metrics to evaluate agents’ preference matching, switching, and covering capabilities.

Replanning

Under unforeseen and changing conditions, replanning is crucial for enhancing adaptability and robustness in dynamic environments. Replanning with Diffusion Models (RDM) introduces an approach to determine optimal replanning times, enhancing planning efficiency and effectiveness in stochastic and long-horizon tasks.

Skill Learning and Conditional Planning

This section delves into the state of the art in skill discovery, task planning, and trajectory generation through the lens of diffusion models and conditional generative approaches.

Skill Discovery and Planning

- XSkill: A cross-embodiment skill discovery framework leveraging imitation learning to bridge the gap between human and robot demonstrations.

- Generative Skill Chaining (GSC): Tackles long-horizon task and motion planning by integrating generative modeling with skill chaining.

- SkillDiffuser: Offers an interpretable hierarchical planning framework, combining skill abstractions with diffusion-based task execution.

Conditional Planning

- MetaDiffuser: Introduces a context-conditioned diffusion model for task-oriented trajectory generation in offline meta-reinforcement learning.

- Decision Diffuser: Positions conditional generative modeling as a viable alternative to traditional reinforcement learning methods.

- AdaptDiffuser: Extends diffusion model-based planning methods by incorporating an evolutionary approach.

- Constraint-Guided Diffusion Policies (CDG): Addresses UAV trajectory planning through an imitation learning-based approach.

Safety and Robustness in Planning

This section explores the safety and robustness of diffusion model planning, building on existing research in offline reinforcement learning robustness.

Safety Mechanisms

- DDPMs with Control Barrier Functions (CBFs): A framework developed for safety-critical optimal control, employing three distinct DDPMs for dynamically consistent trajectory generation, value estimation, and safety classification.

- Restoration Gap Metric: Introduces a metric to evaluate the quality of generated plans, coupled with a gap predictor for refinement.

- SafeDiffuser: Ensures safety constraints are consistently met by incorporating finite-time diffusion invariance and embedding control barrier functions into the diffusion process.

- Cold Diffusion on the Replay Buffer (CDRB): Utilizes cold diffusion to optimize the planning process via an agent’s replay buffer of previously visited states.

- LTLDoG: Modifies the inference steps of the reverse process based on finite linear temporal logic (LTLf) instructions, ensuring adherence to static and temporal safety constraints during trajectory generation.

Managing Uncertainty

- Planning as In-Painting: Introduces a language-conditioned diffusion model for task planning in partially observable environments.

- DYffusion: A dynamics-informed diffusion model designed for spatiotemporal forecasting.

- PlanCP: Introduces conformal prediction for uncertainty-aware planning with diffusion dynamics models.

- DiMSam: Utilizes diffusion models as samplers for task and motion planning (TAMP) under partial observability.

Domain-Specific Planning

The application of diffusion models is widespread in fields such as robotics, autonomous driving, and instructional content analysis.

3D Planning and Optimization

- SceneDiffuser: A model designed for integrating scene-conditioned generation, physics-based optimization, and goal-oriented planning in 3D scenes.

- 3D Diffusion Policy (DP3): Focuses on visual imitation learning and robot manipulation, integrating 3D visual representations with diffusion policies.

- RGB-based One-Shot View Planning: Uses 3D diffusion models as priors for RGB-based one-shot view planning to optimize object reconstruction tasks.

Procedure Planning in Instructional Videos

- PDPP: Introduces a novel approach to procedural planning by conceptualizing it as a conditional distribution-fitting challenge.

- Masked Diffusion with Task-awareness: Advances procedural planning by integrating task-oriented attention mechanisms to manage decision spaces more effectively.

- ActionDiffusion: Incorporates temporal dependencies between actions within its diffusion model framework to heighten the precision of action plan generation.

Autonomous Driving

- Diffusion-ES: Combines gradient-free evolutionary search with diffusion models to optimize trajectories for autonomous driving and instruction following on non-differentiable, black-box objectives.

Multi-Tasking

- Multi-Task Diffusion Model (MTDIFF): Introduces a diffusion-based generative model designed for handling extensive multi-task offline data, showcasing its ability to plan and synthesize data across various tasks.

Research Challenges

Combination with Other Generative Models

Combining diffusion models with other generative models, such as Variational Autoencoders (VAEs) and Generative Adversarial Networks (GANs), offers the potential for enhancing the performance, robustness, and efficiency of diffusion models used in planning. Integrating these models presents challenges, particularly in ensuring model compatibility and optimization stability.

Scalability and Real-Time Applications

To achieve an efficient architecture, a critical need exists to explore advanced pruning and quantization techniques aimed at reducing the model size and computational demands without sacrificing performance. For real-time applications, incorporating diffusion models with edge computing would introduce unique advantages for enhancing real-time processing capabilities.

Generalization and Robustness

Enhancing the discussion on the challenges of generalization and robustness within advanced learning models, particularly meta-learning, key aspects from recent research highlight the complexities involved. Developing algorithms that perform reliably across diverse environments and manage rapid adaptation without overfitting is a core challenge.

Human-Robot Interaction

For Human-Robot Interaction using diffusion models for planning, it would be beneficial to explore how these models might enhance the interpretability and controllability of robotic actions, crucial for effective collaboration. Refining these diffusion models to more accurately translate complex and often ambiguous human instructions into precise robotic actions is crucial.

Conclusion

This paper systematically reviews the application of diffusion models in planning, underscoring their potential and versatility across various domains. The integration of diffusion models in areas such as model-based and offline reinforcement learning, motion and path planning, and hierarchical planning has been shown to enhance efficiency, flexibility, and performance, addressing complex decision-making challenges. Recent advancements focus on skill learning, task planning, and trajectory generation using conditional generative approaches, which have further improved the generalization capabilities of robotic systems. Robust safety mechanisms and uncertainty management techniques have been integrated into diffusion-based planning models, improving their reliability in safety-critical applications. Diffusion models have also been successfully applied in specified domains such as 3D planning, demonstrating their versatility and effectiveness. Future challenges include improving computational efficiency and integrating diverse data sources.